Support for OpenAI Vision, Assistants and more

Pipedream empowers you to build, test, and deploy end to end workflows in the fraction of the time. Building functional proof of concepts with pre-built actions, triggers, or custom Node.js or Python code takes just a few minutes.

Now you can leverage the latest AI developments in your workflows at the same speed with OpenAI's enhanced AI APIs.

OpenAI recently announced major additions to its already robust AI APIs including:

We've just released a slew of new actions and triggers that leverage these powerful new features of OpenAI directly within Pipedream.

In this post, we're going to specifically cover the Vision API and give you functioning sample workflows that you can use to kick start your AI projects.

What is GPT-4 Computer Vision?

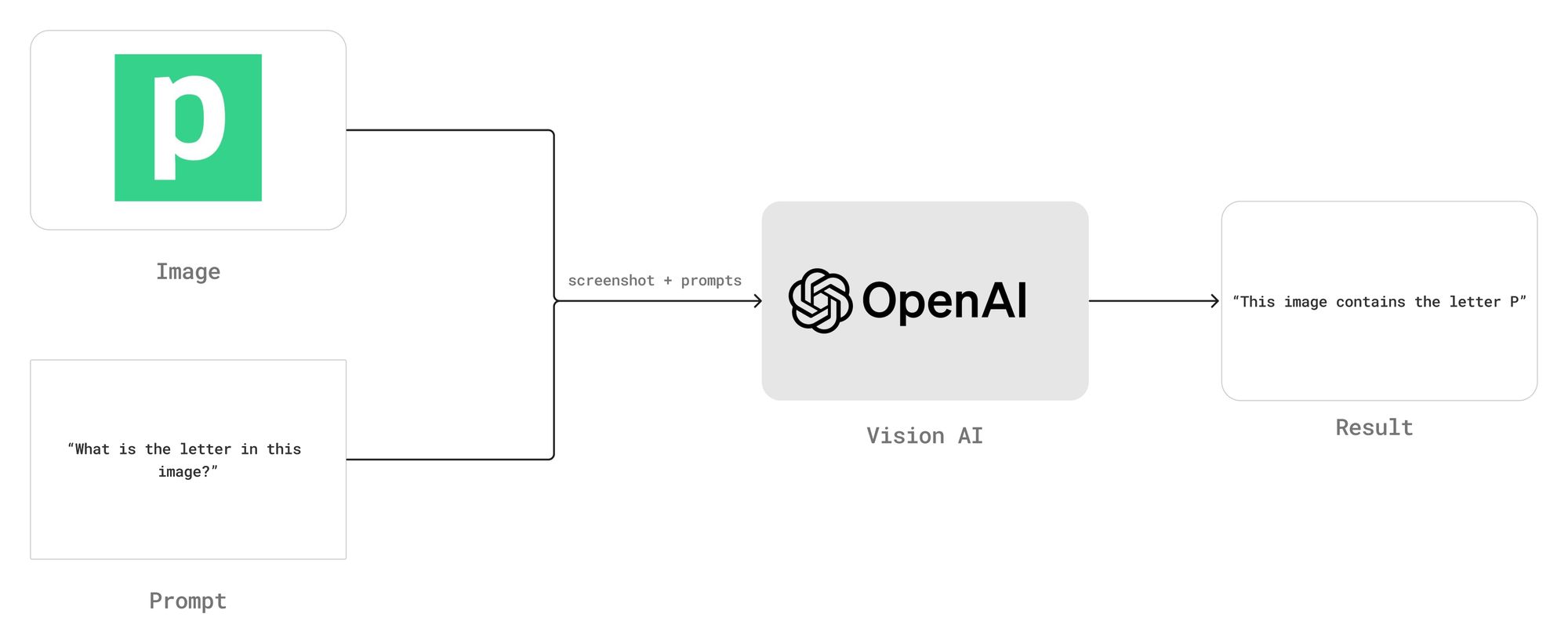

Not to be confused with DALL-E, the Image Generation AI API; the OpenAI Vision API allows GPT models to read and answer prompts with images as inputs.

Prior to this update you could only prompt with OpenAI with text alone. Questions were sent in text format, and you'd receive text answers.

However, with the Computer Vision support, you can provide one or more images alongside your prompt and the OpenAI model will programmatically read the images.

Vision AI is a game changer

Until now there have been bespoke separate APIs for performing computer vision tasks.

Detecting objects in an image uses a separate computer vision algorithm from extracting text (OCR). You would have to learn the following algorithms pros & cons to find the right fit for the problem at hand:

- Object detection

- OCR (Optical Character Recognition)

- Classification

- Comparison

Each one of these computer vision problem areas has it's own flavors of algorithms, and although there are commercial APIs for each, you'd still need to wire them by hand for even simple solutions.

OpenAI's Vision API just upended the entire computer vision category of Machine Learning by allowing you to prompt in human language against images with a single API endpoint:

- Is there a cat in this photo? (Classification)

- Where in this are photo the cats? (Object detection)

- What's the item's name, price, and description in this screenshot? (OCR)

- Are these two photos of the same person? (Comparison)

How can I use Computer Vision in Pipedream?

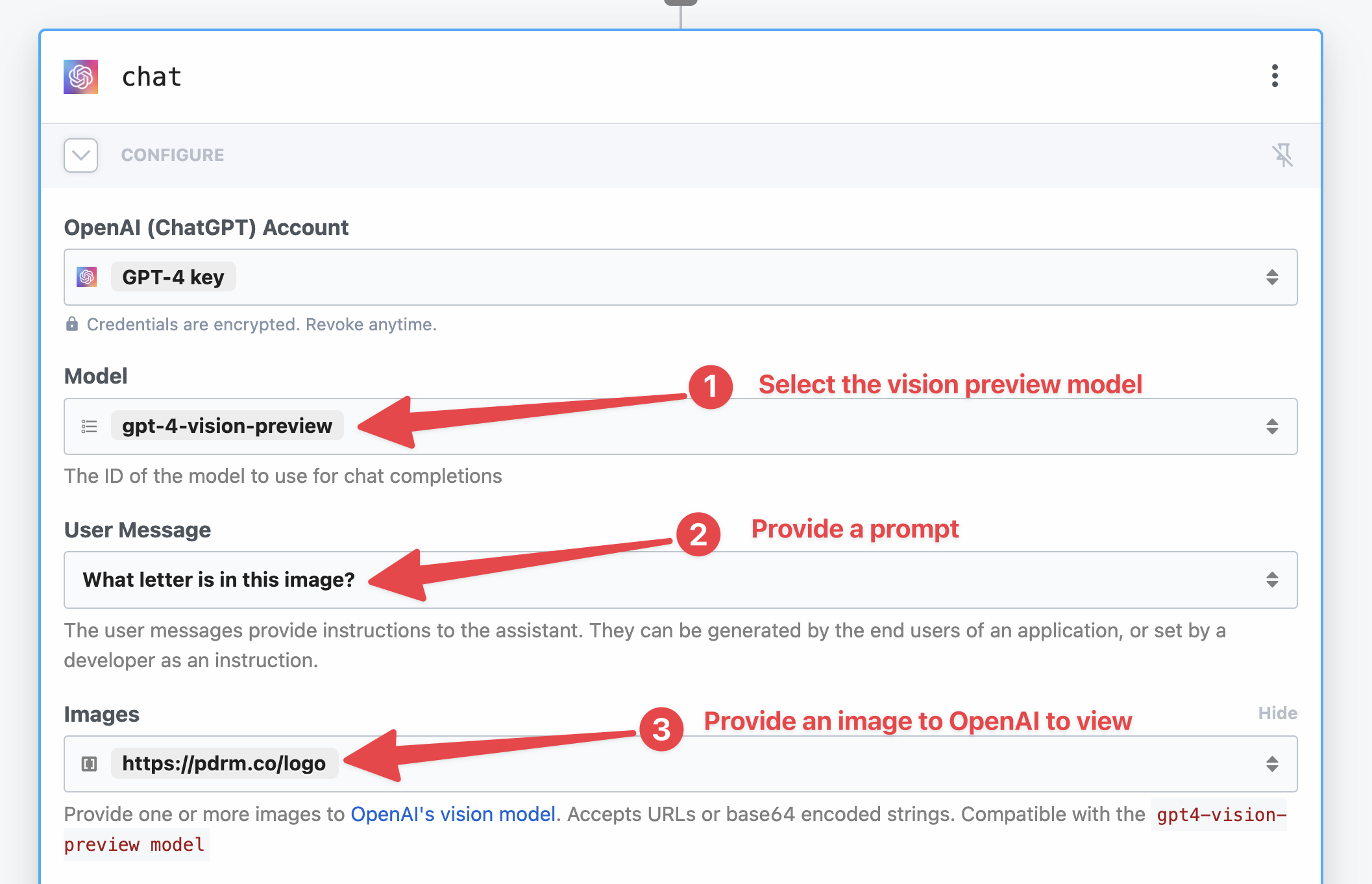

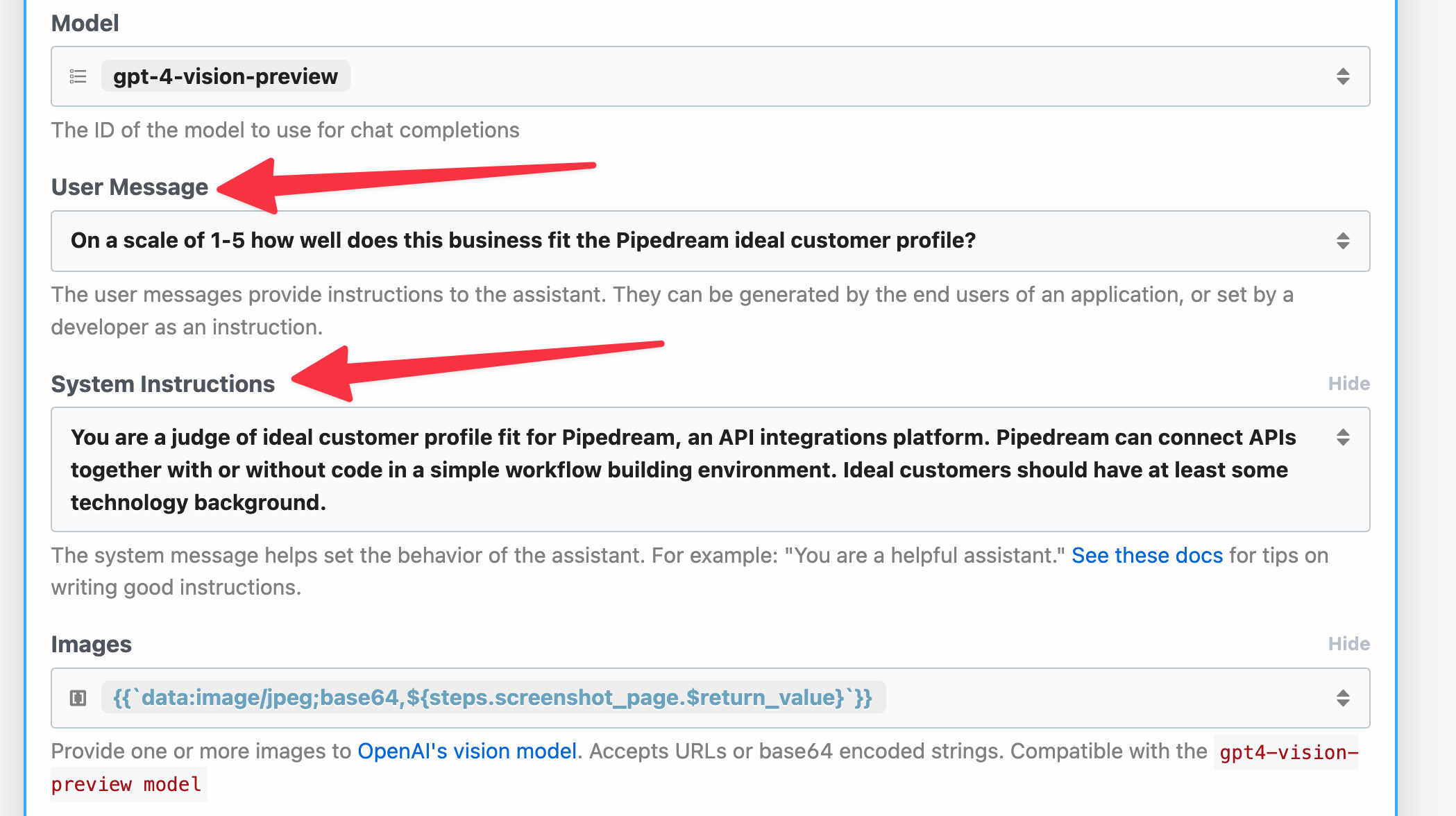

This feature is now available in the OpenAI - Chat action in Pipedream.

To pass an image to the prompt, enable the Images option and pass one or more images by URL or a base64 encoded string. Then add your prompt under the User Message field.

For this example, we'll use an image of Pipedream's logo: https://pdrm.co/logo

And then let's ask OpenAI a simple question "What is the letter in this photo"?

Don't forget to choose the gpt-4-vision-preview model, which is compatible with the Vision API.

The action will look like this:

Clicking Test will run this step and it results in OpenAI successfully reading that the image content:

Copy this sample workflow to your Pipedream account.

Reading the Web visually with OpenAI

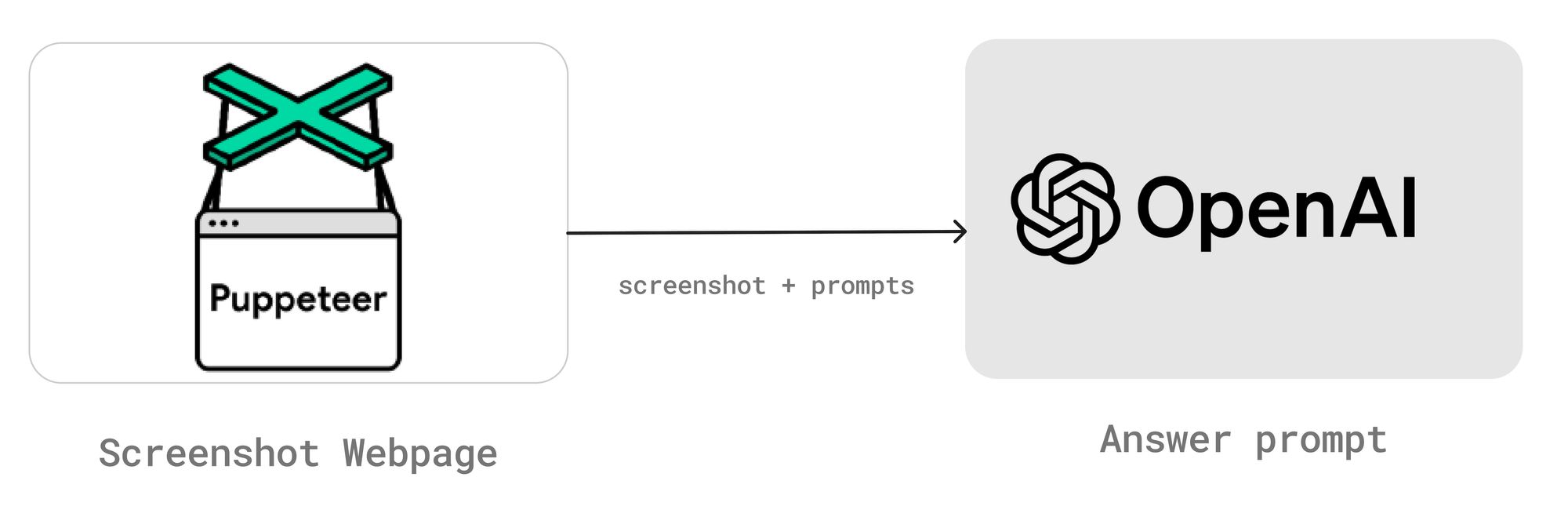

This tool is exceptionally powerful paired with screenshots of websites. One of the faults of scraping content from the web manually through parsing HTML is how brittle the automations can be.

Websites are subject to change in design or structure, and the smallest changes can break any automations you have built around the anticipation of the underling HTML.

Web scraping automations are brittle because they expect HTML in a specific structure. Vision AI bypasses layout changes and allows general questioning of a webpage visually.

Computer Vision is a more robust answer to this issue. Paired with prompting, you can simply pass a screenshot of a website to ChatGPT to extract details or summarize pages.

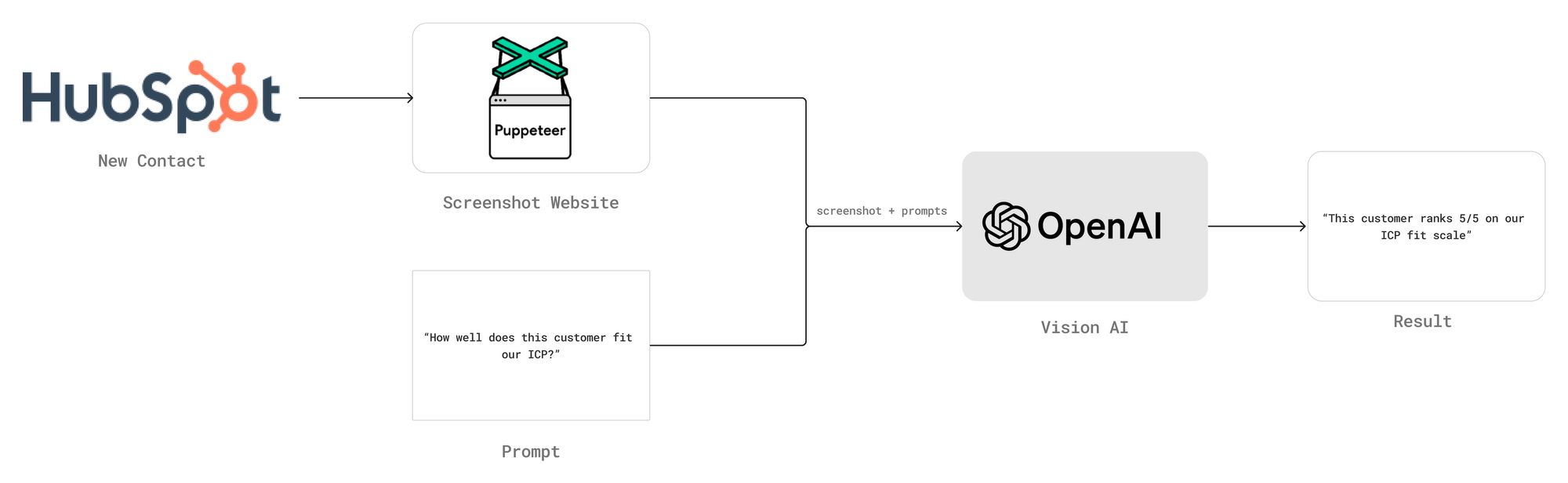

With Pipedream's native Puppeteer support, you can simply use the Puppeteer - Get Screenshot action to screenshot a URL, then pass the screenshot to OpenAI - Chat to summarize what the page is about.

Extracting details about a lead's website

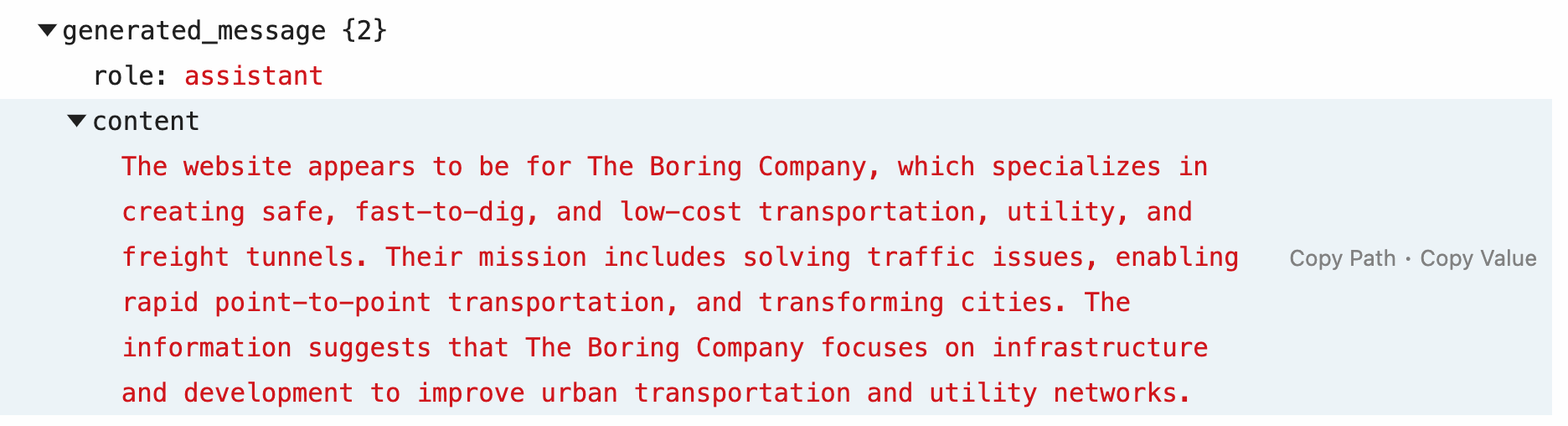

Leveraging OpenAI Vision and Puppeteer's screenshot ability, you can extract details automatically from your incoming lead's websites.

For example, you can trigger a workflow from new leads from your CRM, like HubSpot, Salesforce, or Pipedrive. Then extract the website from the lead's email address, screenshot it, and pass a prompt to OpenAI:

"Describe the service or product that this website provides."

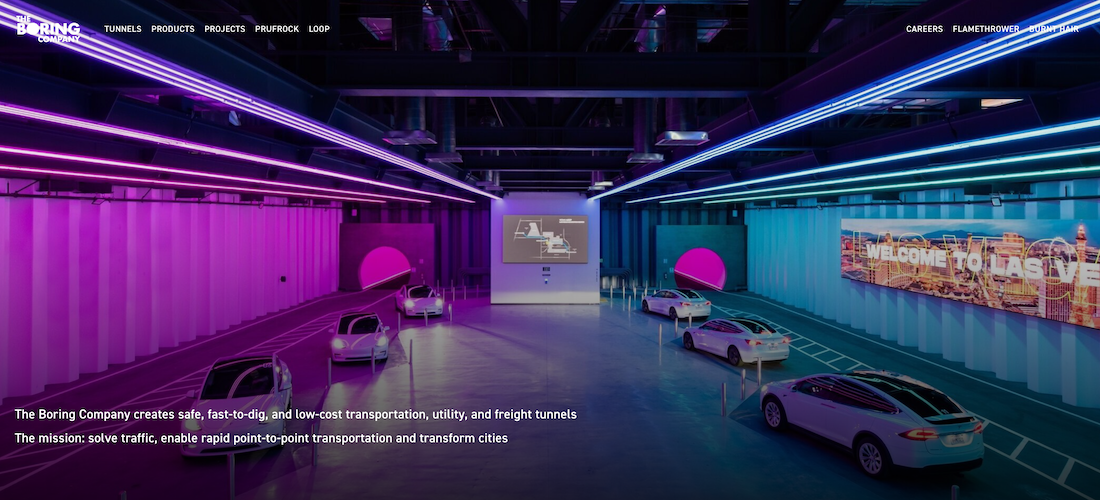

Given a lead from elon@boringcompany.com, this workflow will capture a screenshot of boringcompany.com's website and categorize the business:

Copy this workflow to your Pipedream account.

You can then enrich your CRM with these details, or include it on Slack/Discord notification for you and your team.

Rating Ideal Customer Profile fit with AI

We can slightly tweak the workflow above to instead determine ICP (Ideal Customer Profile) fit by slightly changing OpenAI's instructions and prompt.

We'll just add a System Instruction to OpenAI to instruct the AI about our ideal customer:

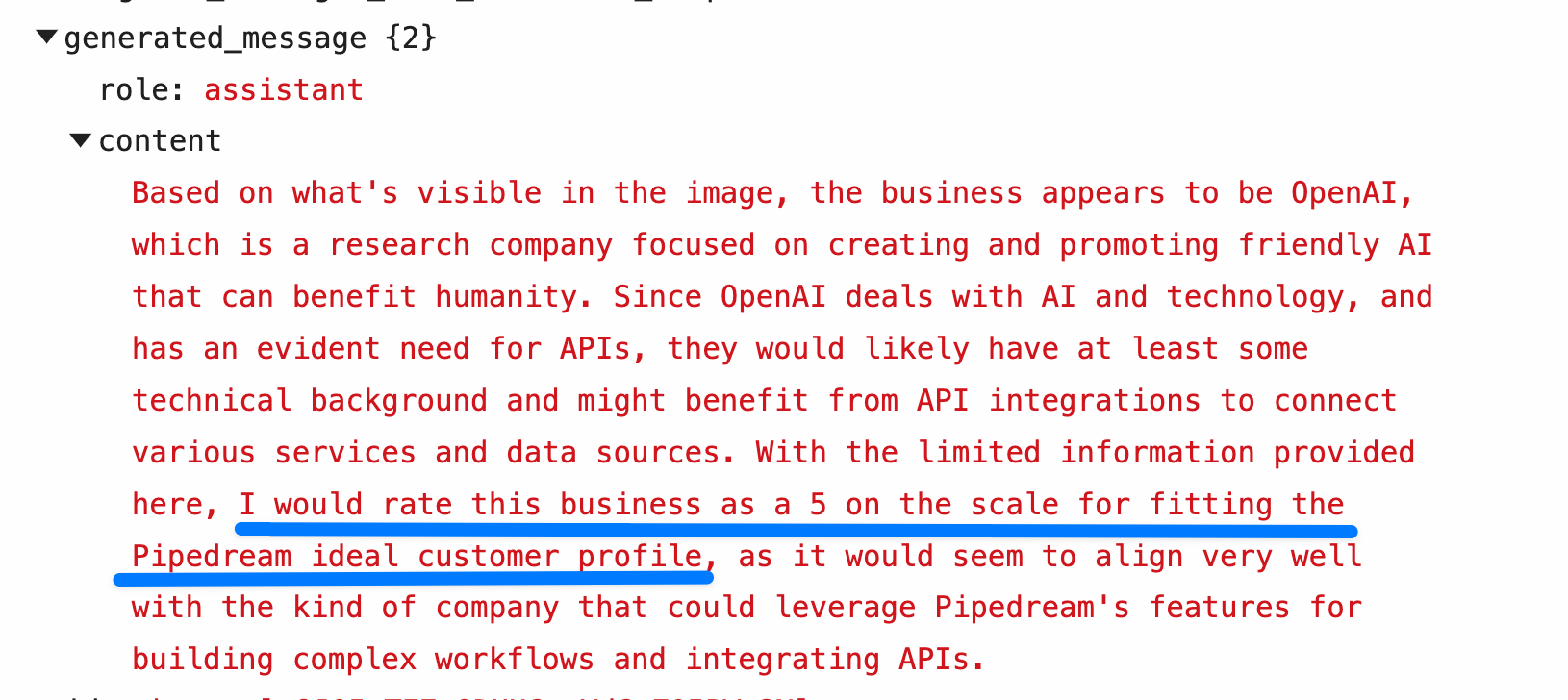

Given a new lead sam@openai.com , let's see how well they fit our ICP.

The response is good! OpenAI determines that this is an ideal fit for Pipedream:

Now you can easily add an action to update the lead in your CRM with these details, or post them to Slack if you have a new lead notification already set up.

Copy this workflow to your Pipedream account

Examples of CRM triggers you can use with this workflow:

More coming soon!

We just covered a few sample cases with one new facet of the OpenAI's new API offerings.

We'll be publishing more tutorials here on our blog, YouTube channel as well as Live Workflow Building Sessions where you can learn how to integrate these cutting edge AI tools within Pipedream.