🗎 README.md

🗎 airtable.app.mjs

🗎 package.json

🗁 actions

🗁 get-record

🗎 get-record.mjs

🗁 node\_modules

🗎 ...here be dragons

🗁 sources

🗁 new-records

🗎 new-records.mjs

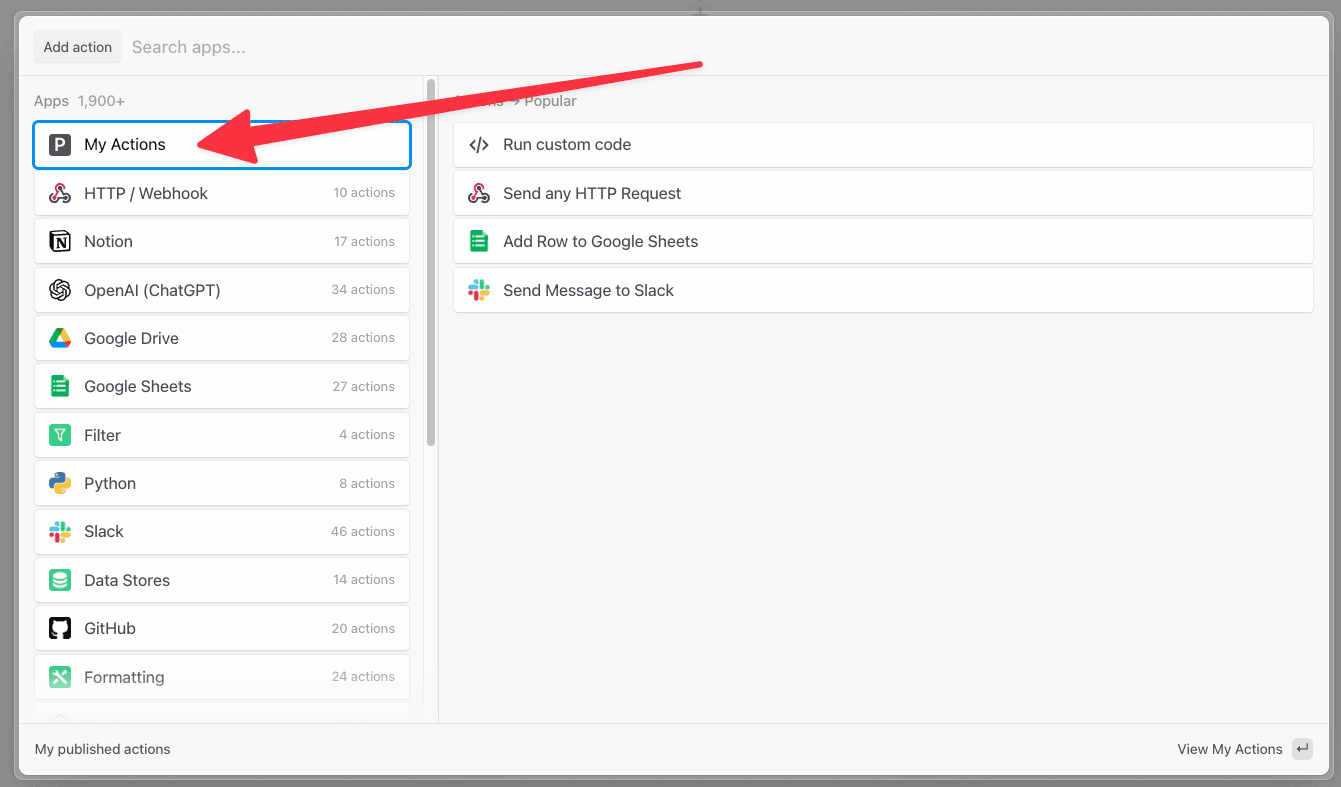

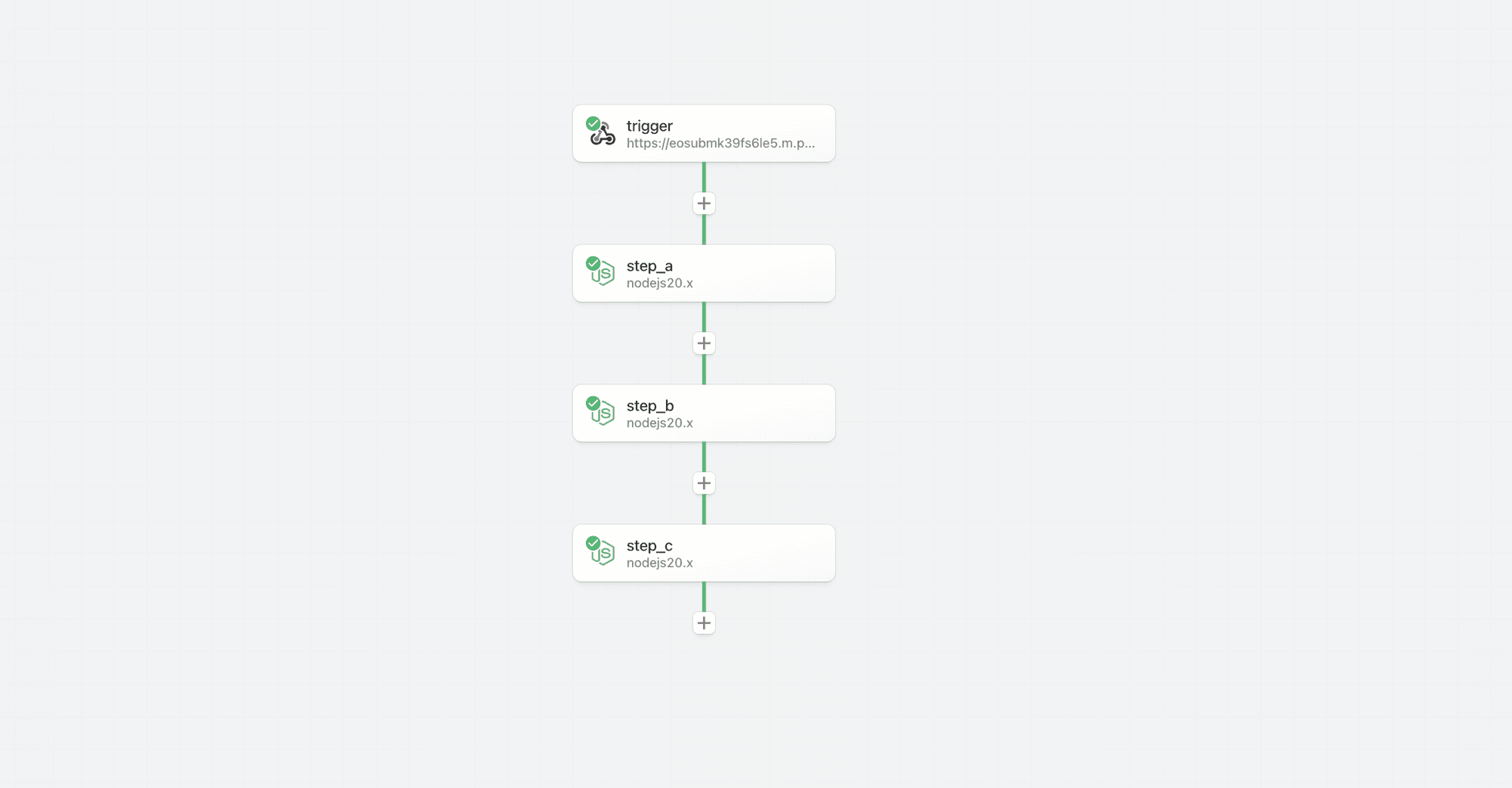

In the example above, the `components/airtable/actions/get-record/get-record.mjs` component is published as the **Get Record** action under the **Airtable** app within the workflow builder in Pipedream.

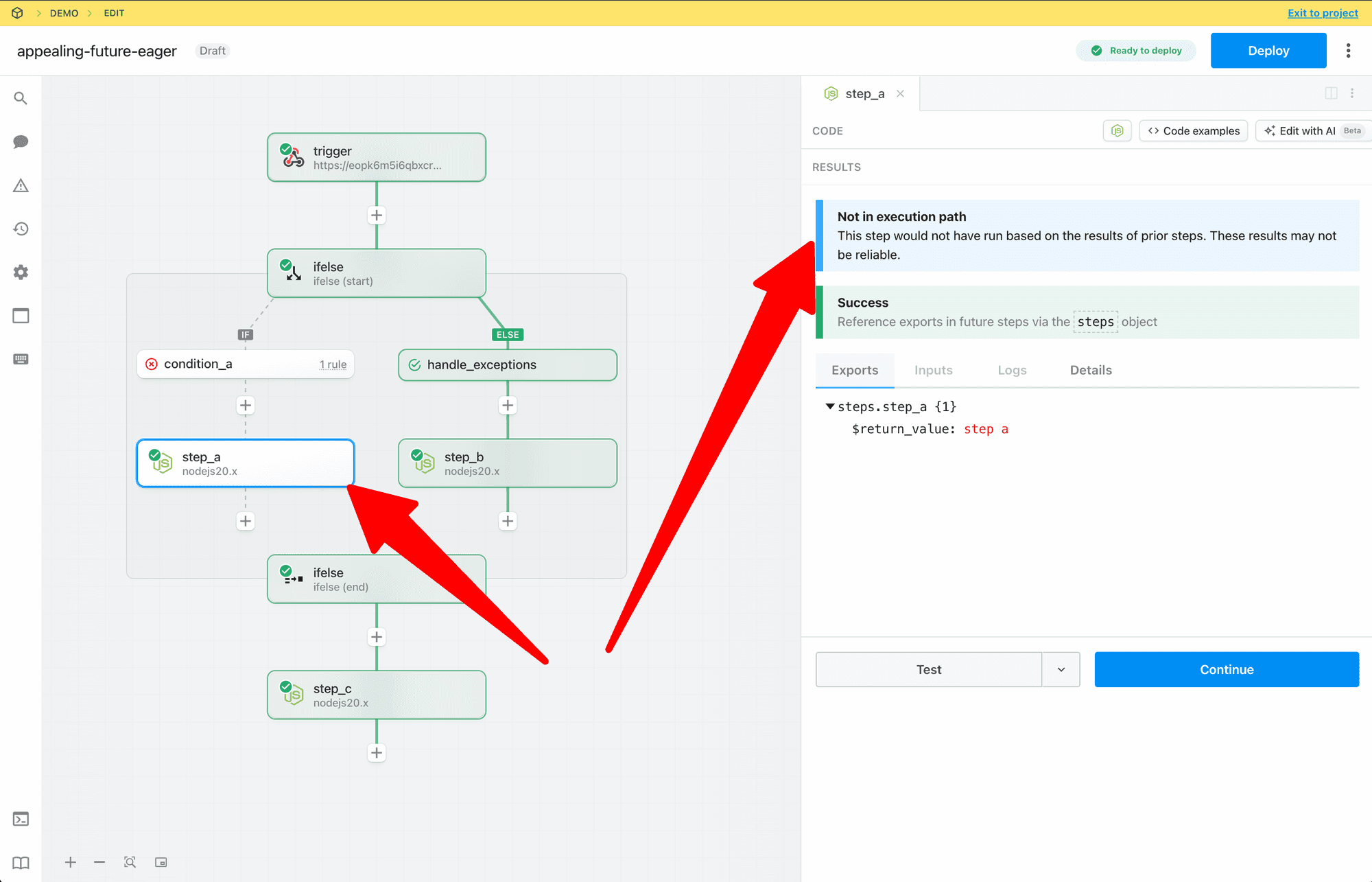

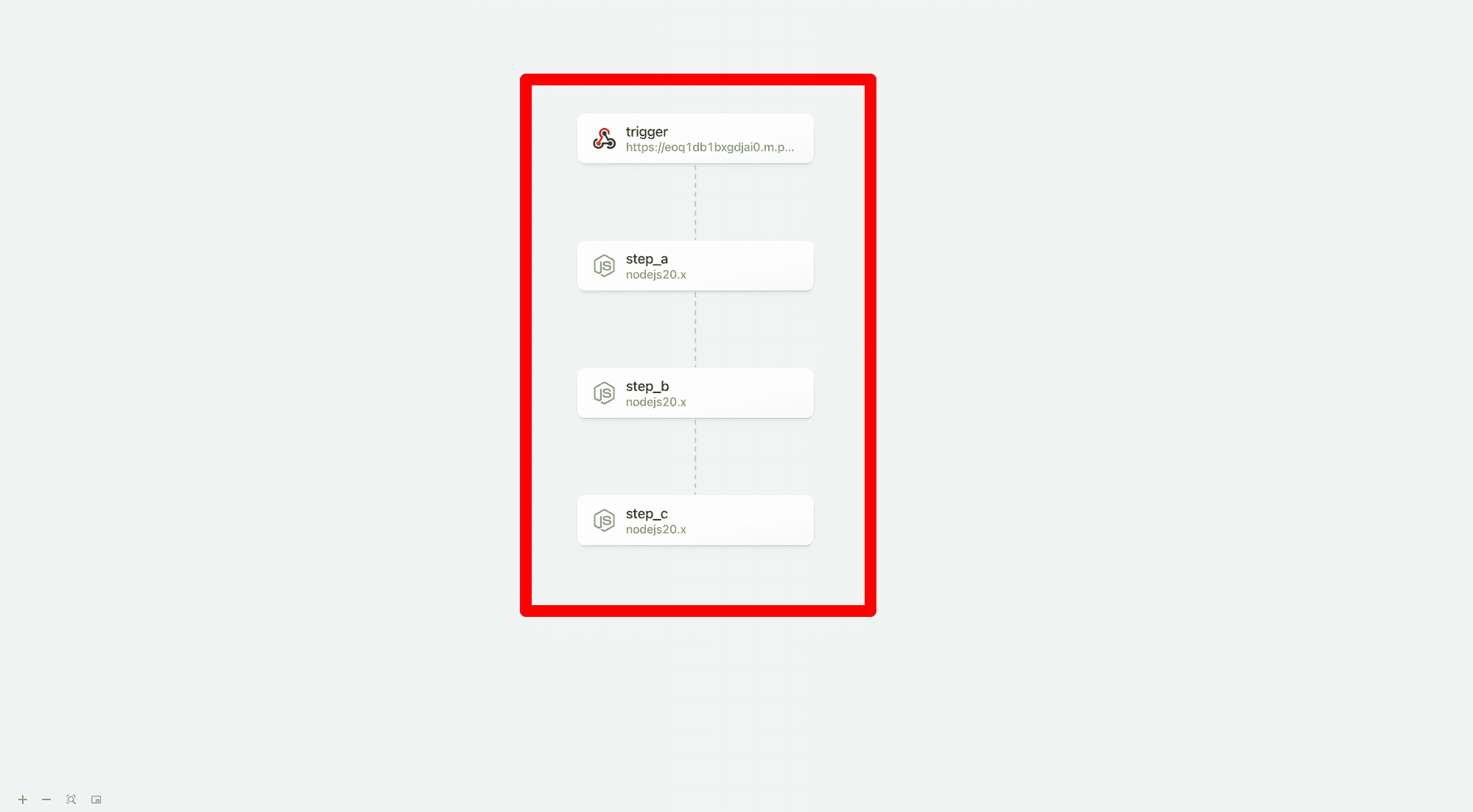

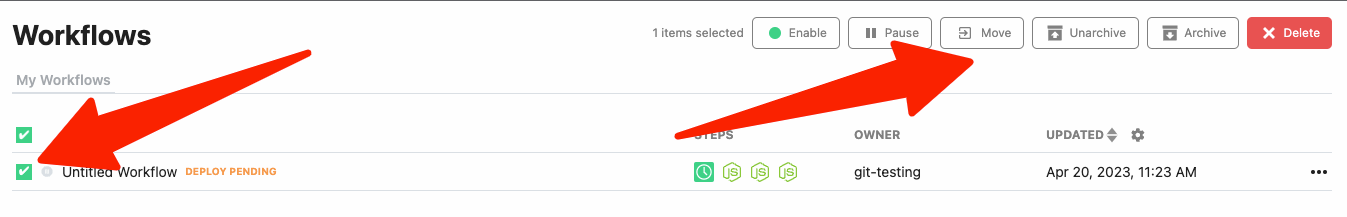

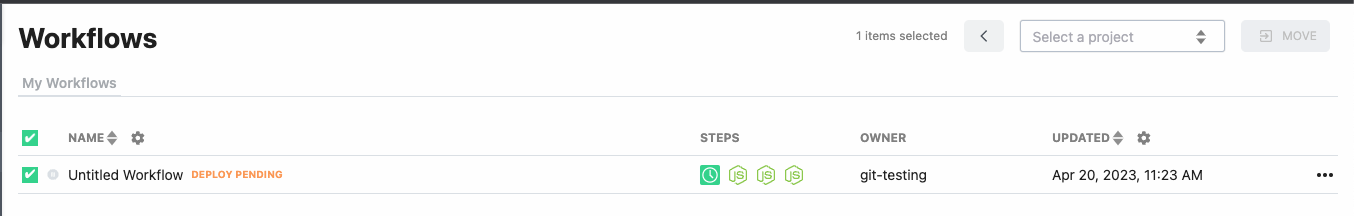

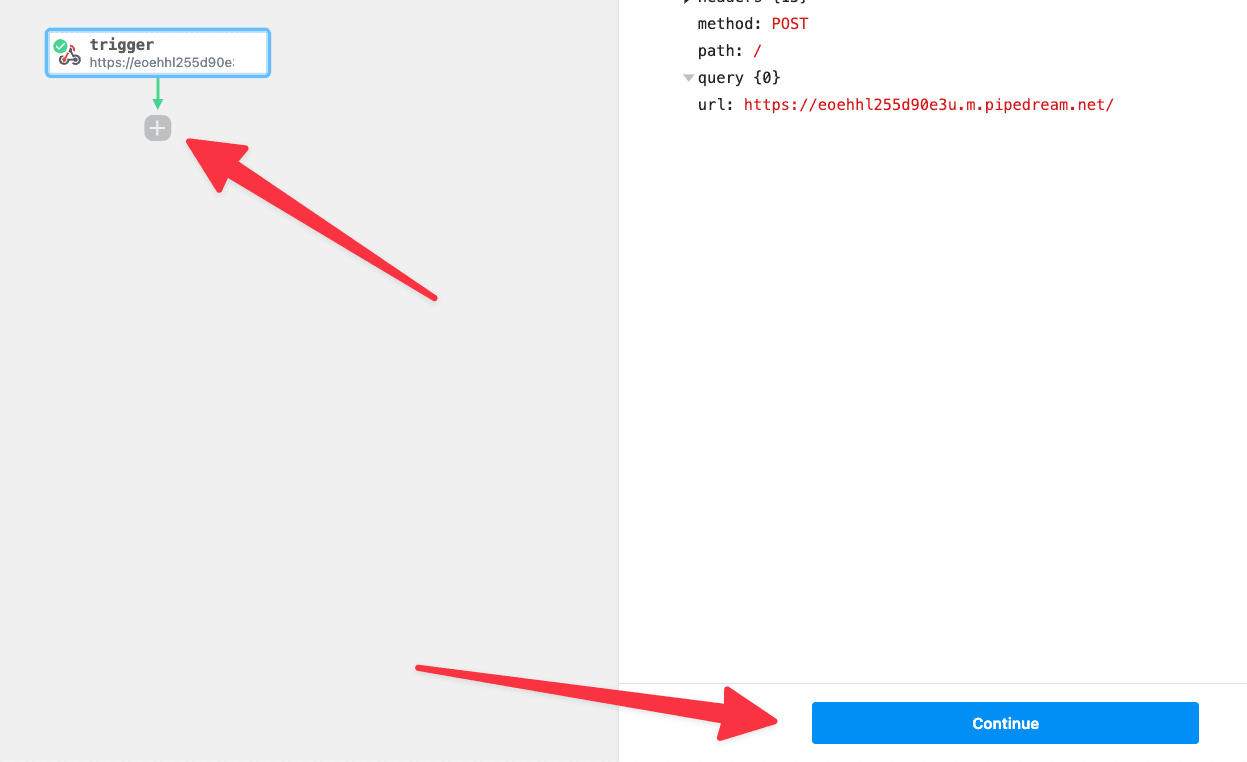

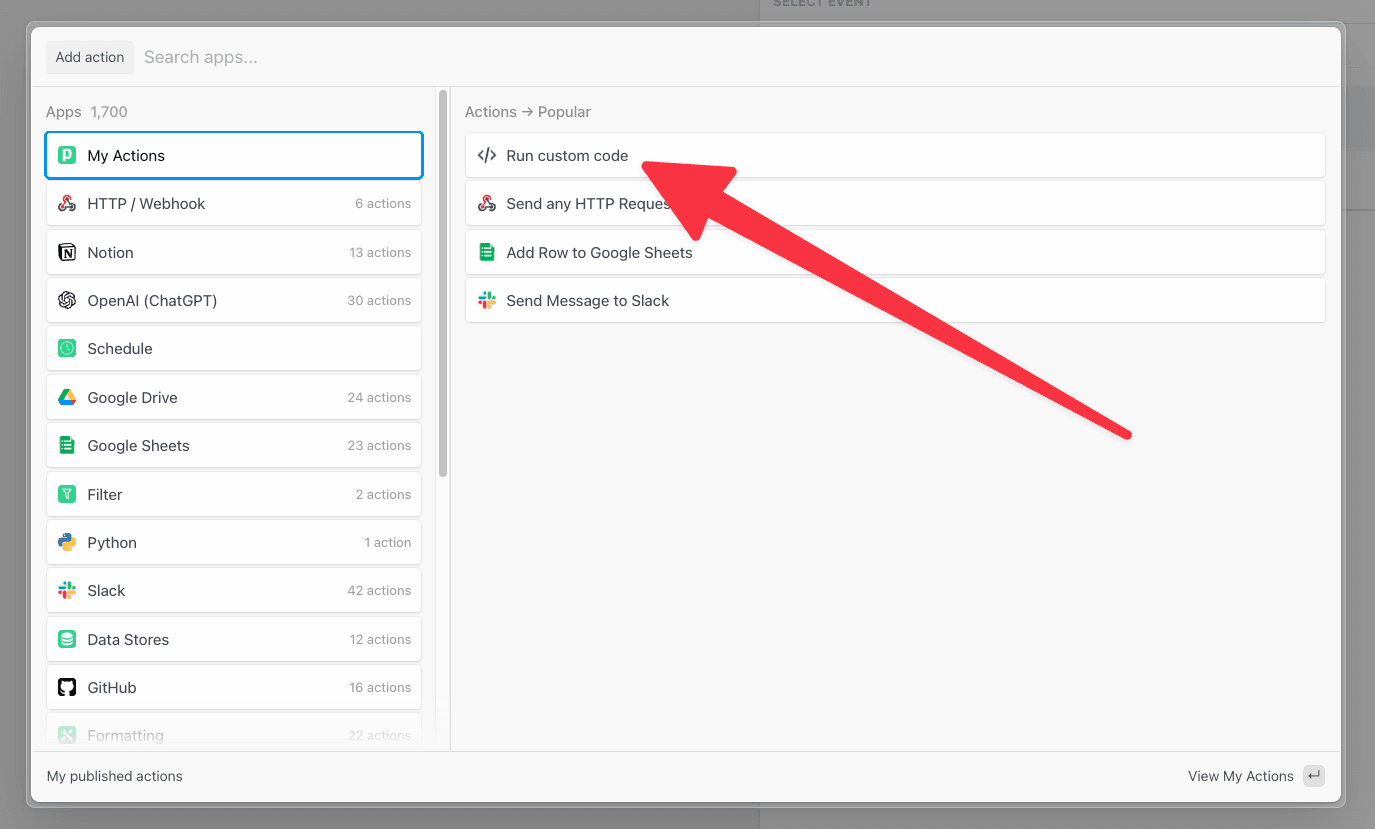

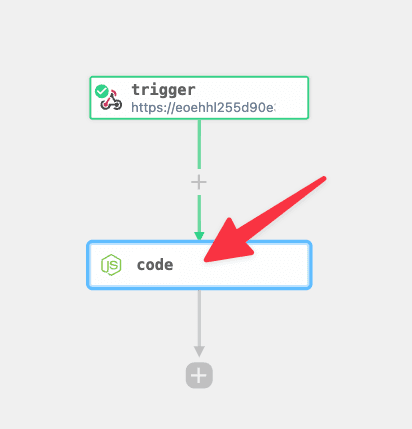

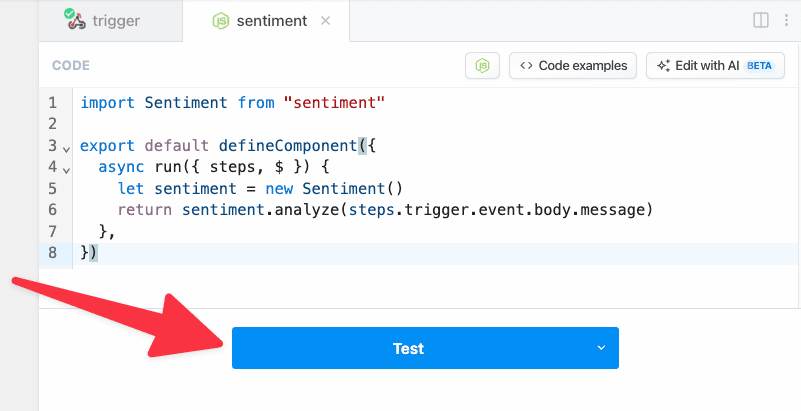

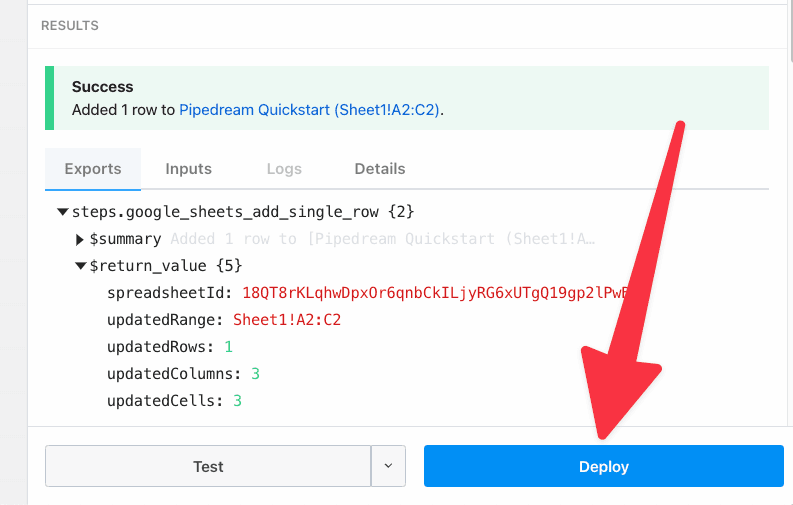

5. Deploy your workflow

6. Click **RUN NOW** to execute your workflow and action

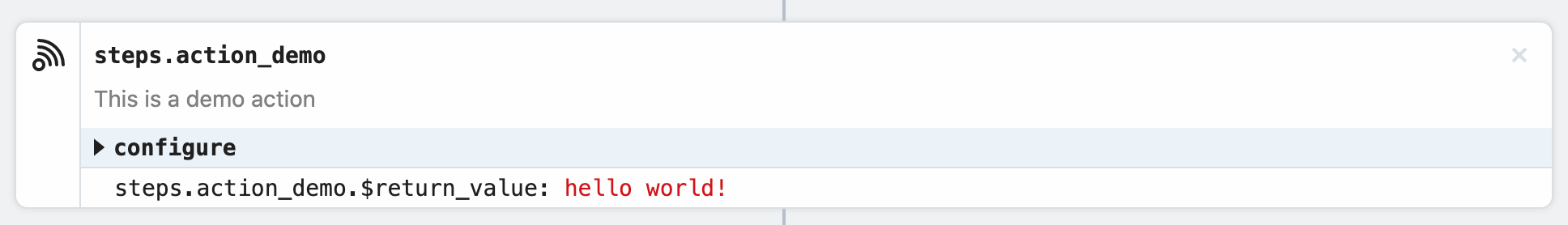

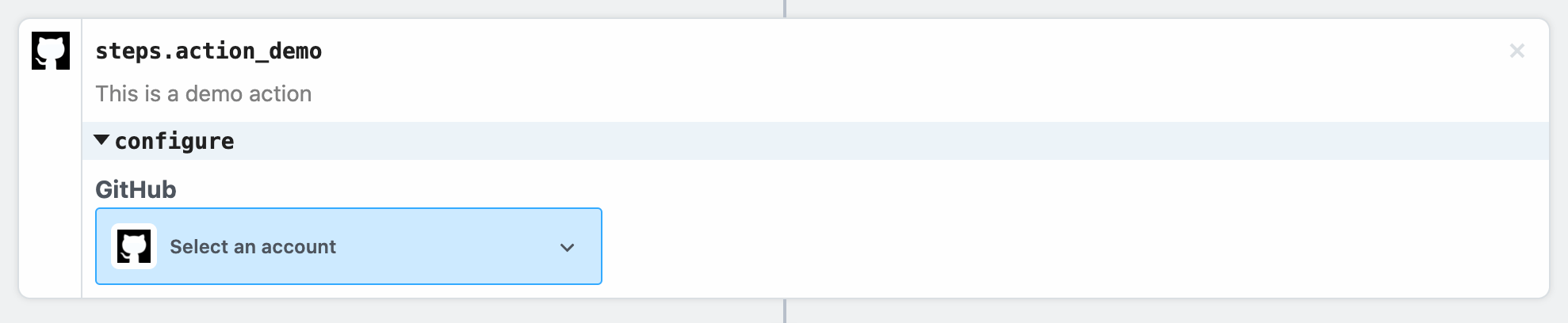

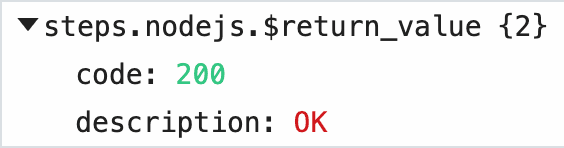

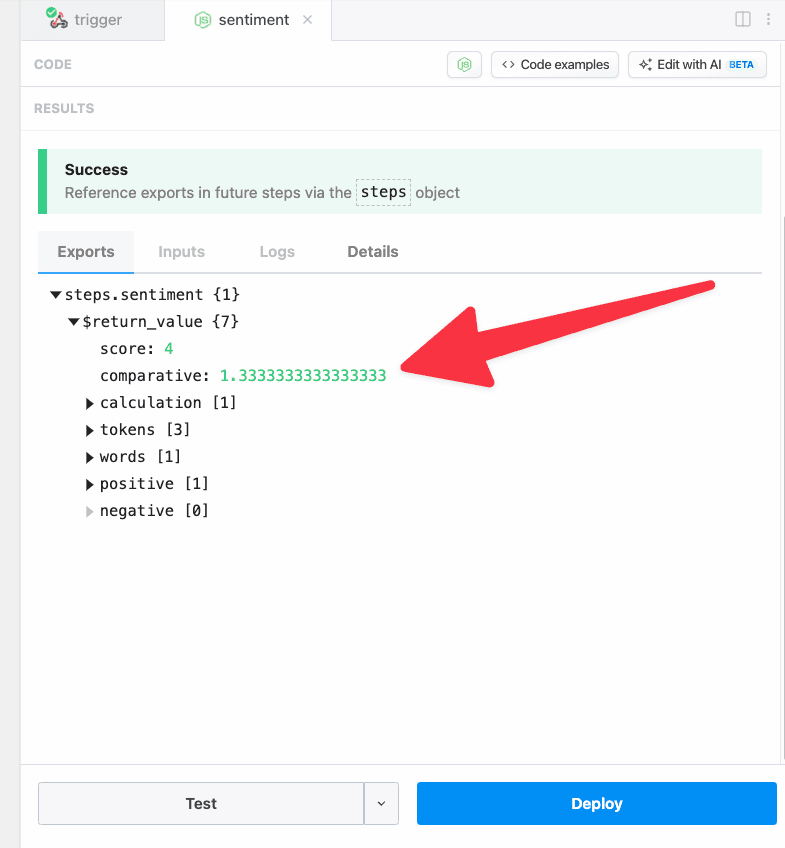

You should see `hello world!` returned as the value for `steps.action_demo.$return_value`.

5. Deploy your workflow

6. Click **RUN NOW** to execute your workflow and action

You should see `hello world!` returned as the value for `steps.action_demo.$return_value`.

Keep the browser tab open. We’ll return to this workflow in the rest of the examples as we update the action.

### hello \[name]!

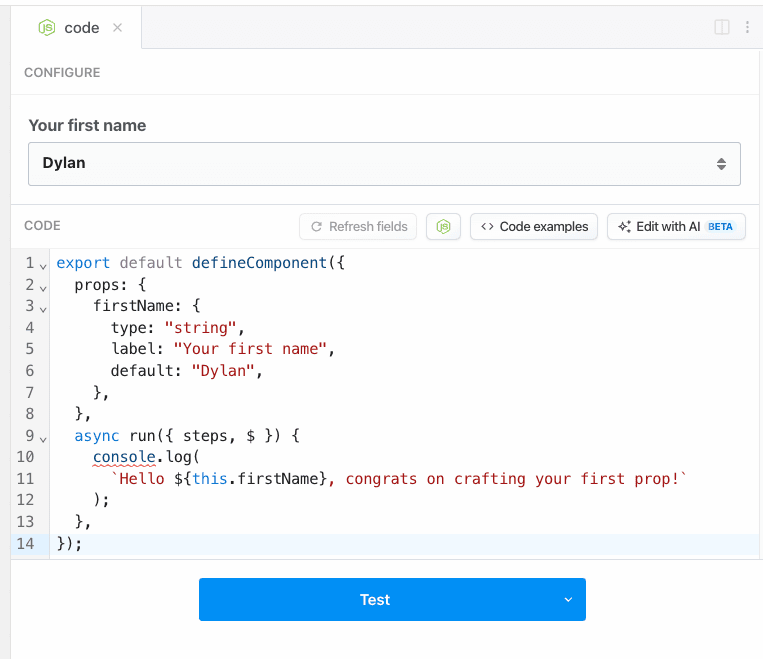

Next, let’s update the component to capture some user input. First, add a `string` [prop](/docs/components/contributing/api/#props) called `name` to the component.

```javascript

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.1",

type: "action",

props: {

name: {

type: "string",

label: "Name",

}

},

async run() {

return `hello world!`

},

}

```

Next, update the `run()` function to reference `this.name` in the return value.

```javascript

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.1",

type: "action",

props: {

name: {

type: "string",

label: "Name",

},

},

async run() {

return `hello ${this.name}!`;

},

};

```

Finally, update the component version to `0.0.2`. If you fail to update the version, the CLI will throw an error.

```javascript

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.2",

type: "action",

props: {

name: {

type: "string",

label: "Name",

},

},

async run() {

return `hello ${this.name}!`;

},

};

```

Save the file and run the `pd publish` command again to update the action in your account.

```

pd publish action.js

```

The CLI will update the component in your account with the key `action_demo`. You should see something like this:

```

sc_Egip04 Action Demo 0.0.2 just now action_demo

```

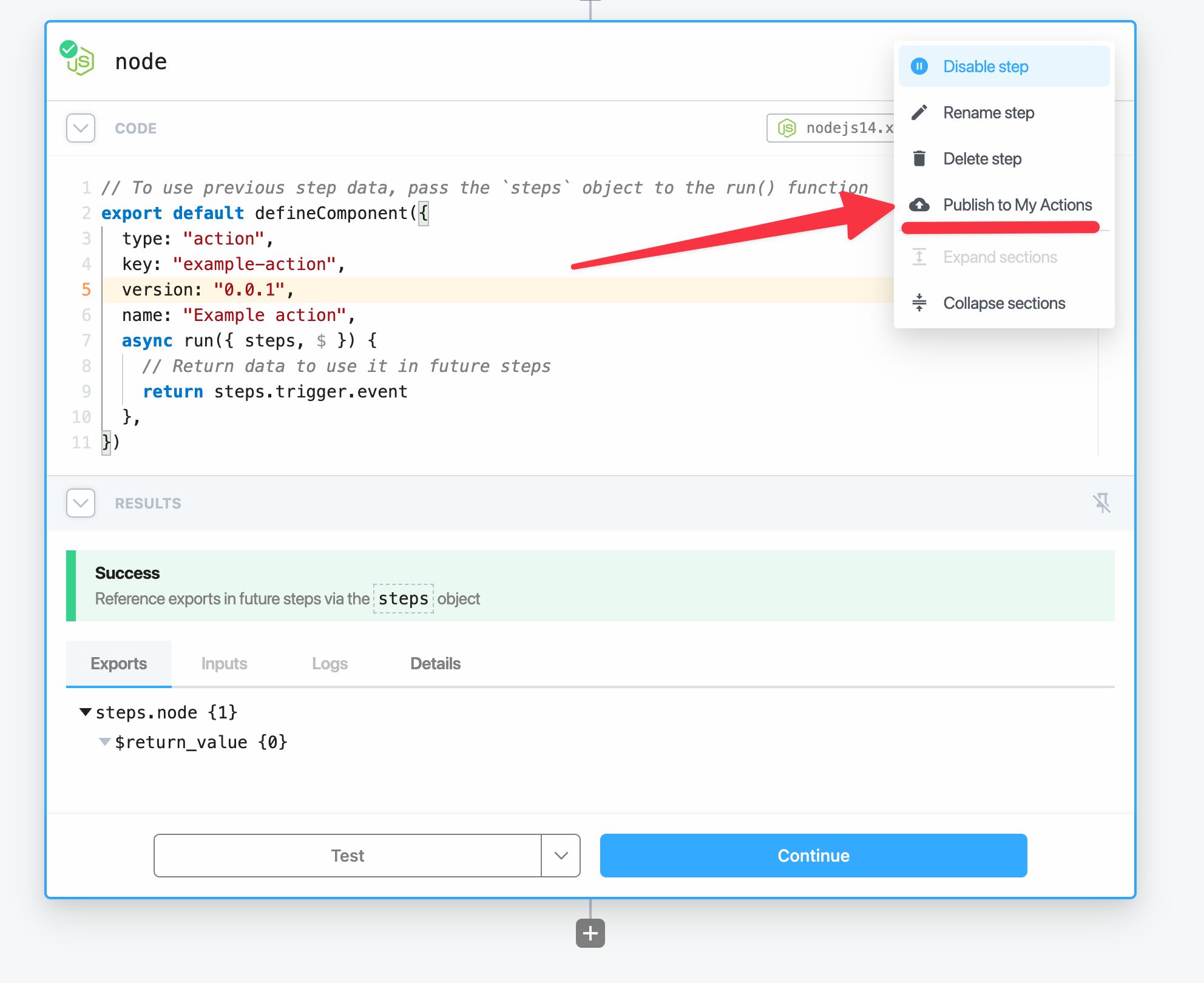

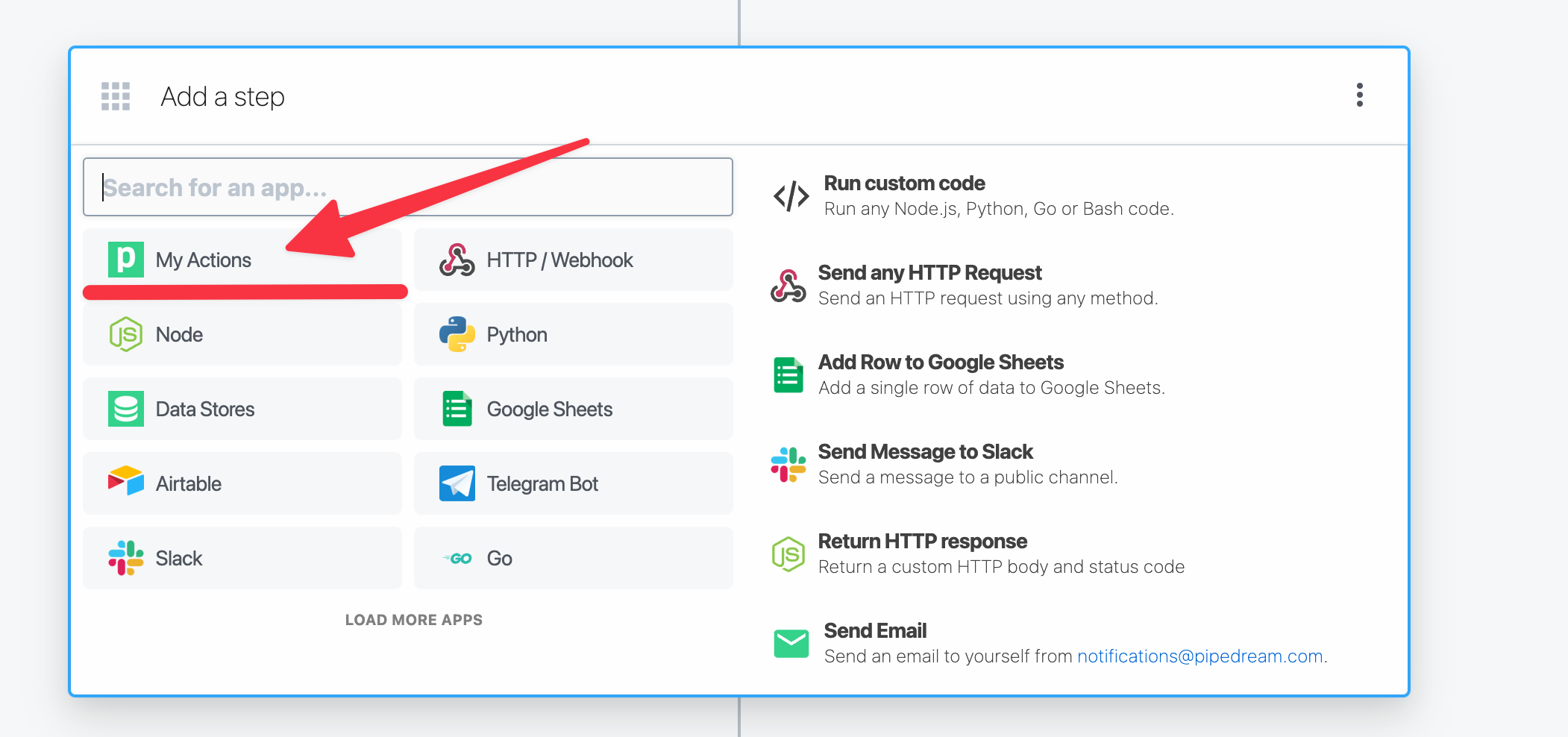

Next, let’s update the action in the workflow from the previous example and run it.

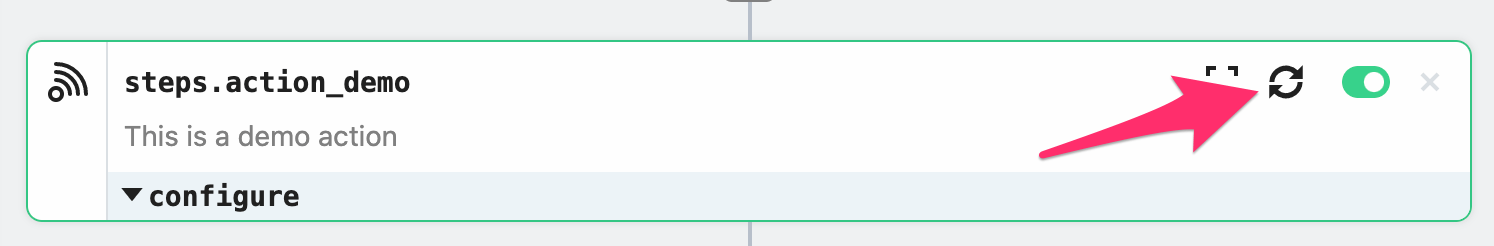

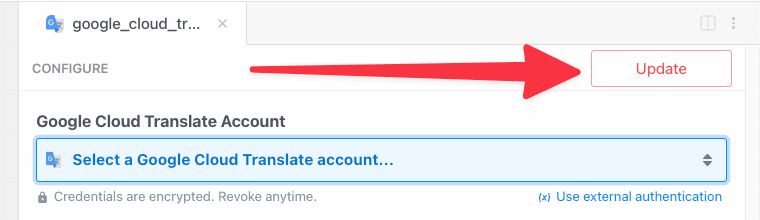

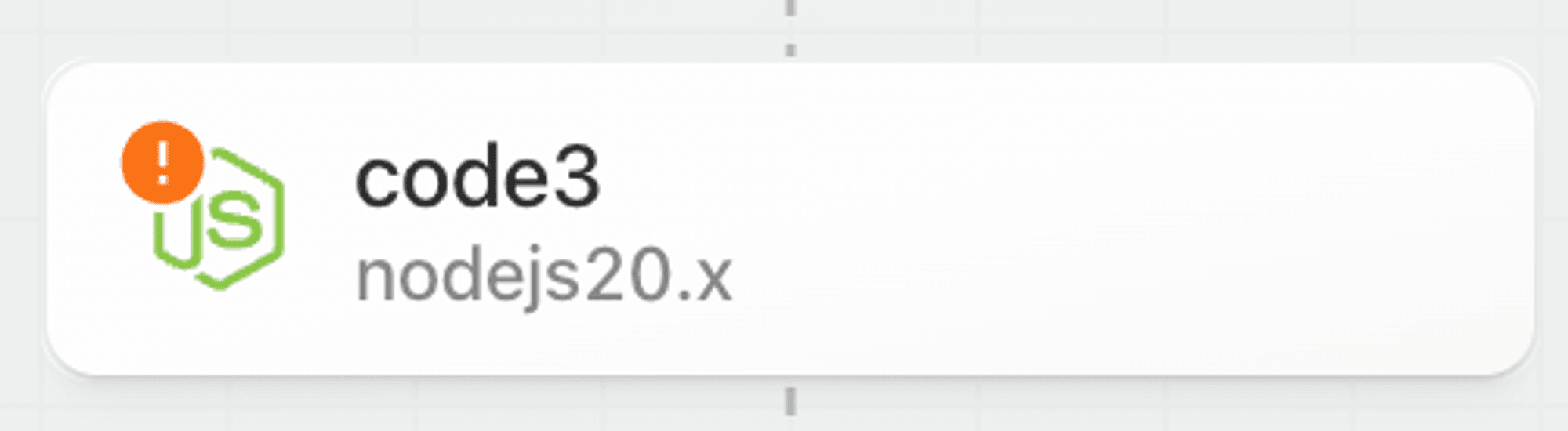

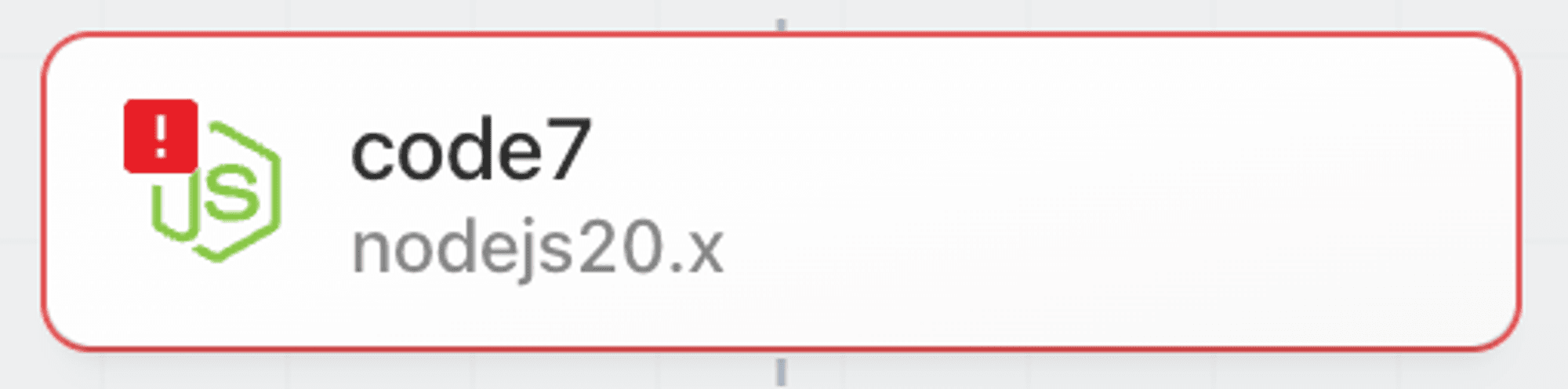

1. Hover over the action in your workflow —you should see an update icon at the top right. Click the icon to update the action to the latest version and then save the workflow. If you don’t see the icon, verify that the CLI successfully published the update or try refreshing the page.

Keep the browser tab open. We’ll return to this workflow in the rest of the examples as we update the action.

### hello \[name]!

Next, let’s update the component to capture some user input. First, add a `string` [prop](/docs/components/contributing/api/#props) called `name` to the component.

```javascript

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.1",

type: "action",

props: {

name: {

type: "string",

label: "Name",

}

},

async run() {

return `hello world!`

},

}

```

Next, update the `run()` function to reference `this.name` in the return value.

```javascript

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.1",

type: "action",

props: {

name: {

type: "string",

label: "Name",

},

},

async run() {

return `hello ${this.name}!`;

},

};

```

Finally, update the component version to `0.0.2`. If you fail to update the version, the CLI will throw an error.

```javascript

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.2",

type: "action",

props: {

name: {

type: "string",

label: "Name",

},

},

async run() {

return `hello ${this.name}!`;

},

};

```

Save the file and run the `pd publish` command again to update the action in your account.

```

pd publish action.js

```

The CLI will update the component in your account with the key `action_demo`. You should see something like this:

```

sc_Egip04 Action Demo 0.0.2 just now action_demo

```

Next, let’s update the action in the workflow from the previous example and run it.

1. Hover over the action in your workflow —you should see an update icon at the top right. Click the icon to update the action to the latest version and then save the workflow. If you don’t see the icon, verify that the CLI successfully published the update or try refreshing the page.

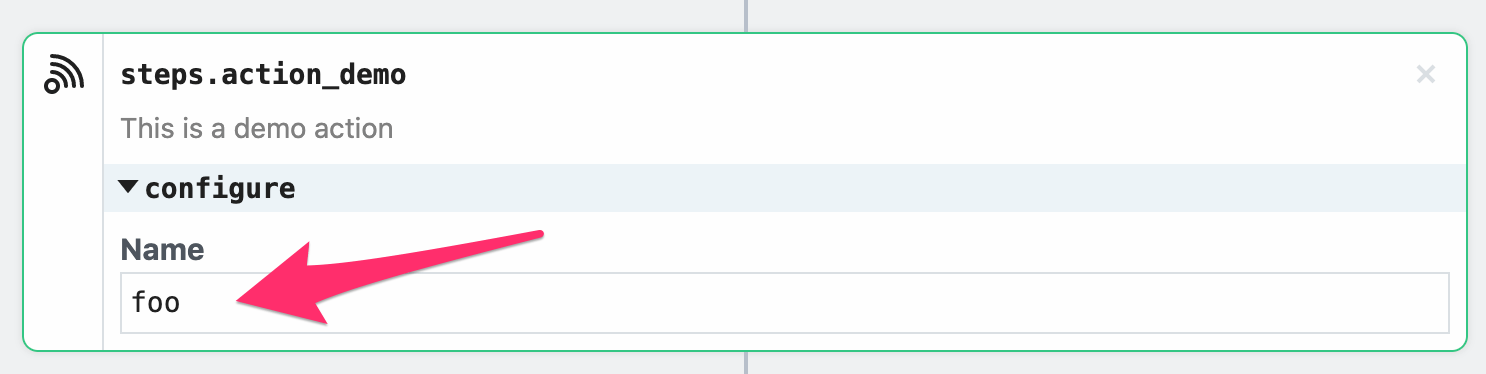

2. After saving the workflow, you should see an input field appear. Enter a value for the `Name` input (e.g., `foo`).

2. After saving the workflow, you should see an input field appear. Enter a value for the `Name` input (e.g., `foo`).

3. Deploy the workflow and click **RUN NOW**

You should see `hello foo!` (or the value you entered for `Name`) as the value returned by the step.

### Use an npm Package

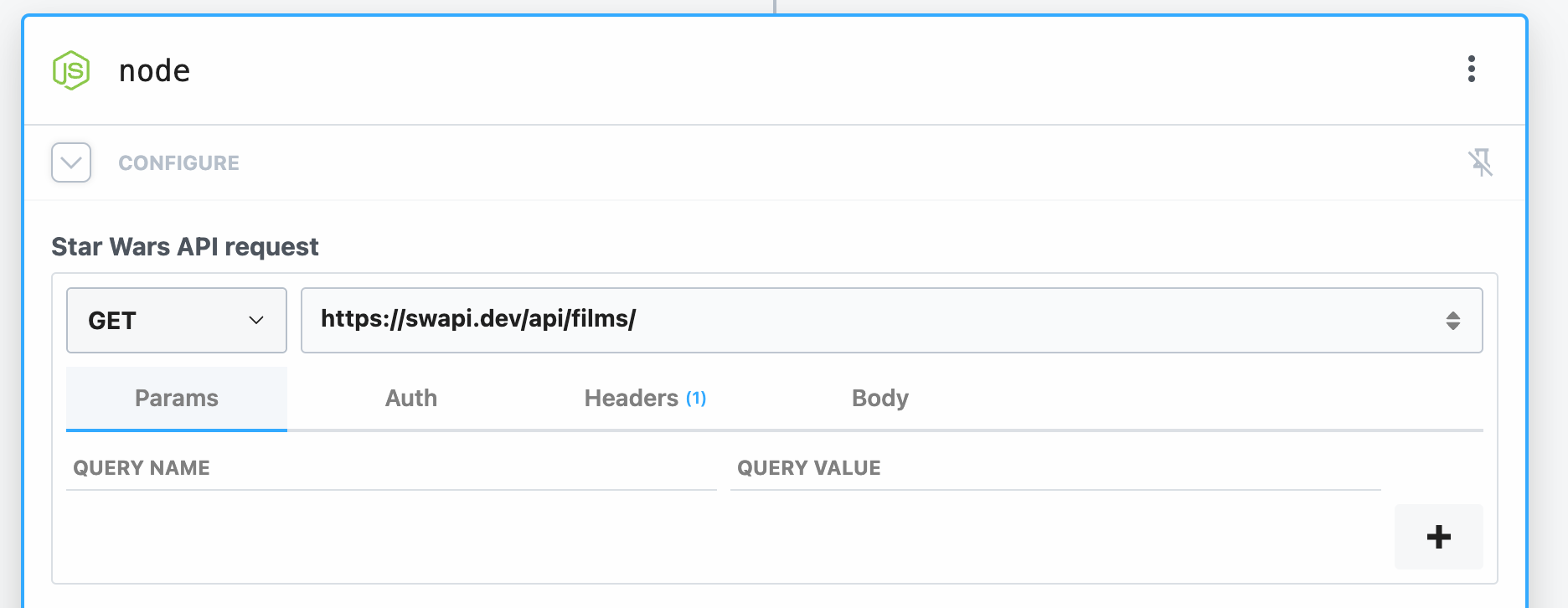

Next, we’ll update the component to get data from the Star Wars API using the `axios` npm package. To use the `axios` package, just `import` it.

```javascript

import { axios } from "@pipedream/platform";

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.2",

type: "action",

props: {

name: {

type: "string",

label: "Name",

},

},

async run() {

return `hello ${this.name}!`;

},

};

```

3. Deploy the workflow and click **RUN NOW**

You should see `hello foo!` (or the value you entered for `Name`) as the value returned by the step.

### Use an npm Package

Next, we’ll update the component to get data from the Star Wars API using the `axios` npm package. To use the `axios` package, just `import` it.

```javascript

import { axios } from "@pipedream/platform";

export default {

name: "Action Demo",

description: "This is a demo action",

key: "action_demo",

version: "0.0.2",

type: "action",

props: {

name: {

type: "string",

label: "Name",

},

},

async run() {

return `hello ${this.name}!`;

},

};

```

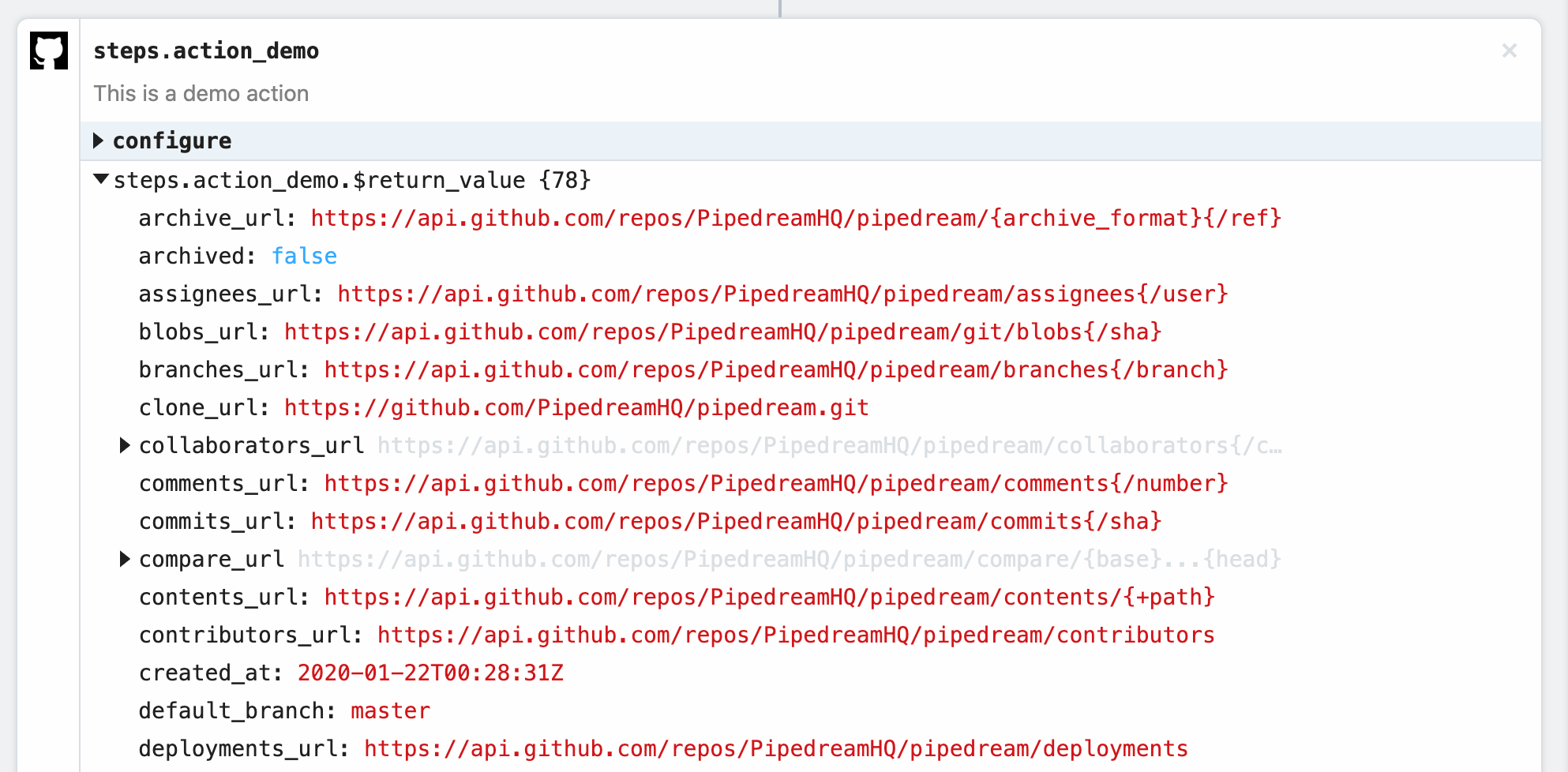

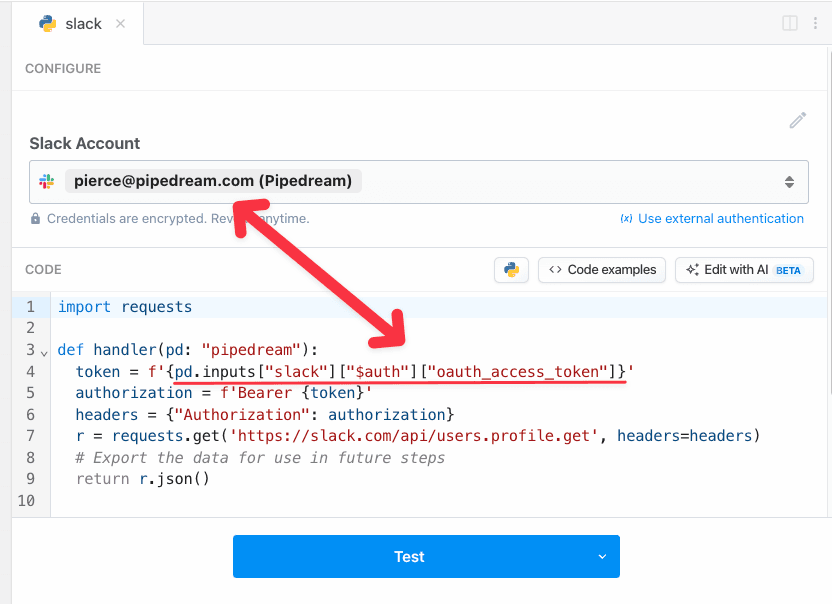

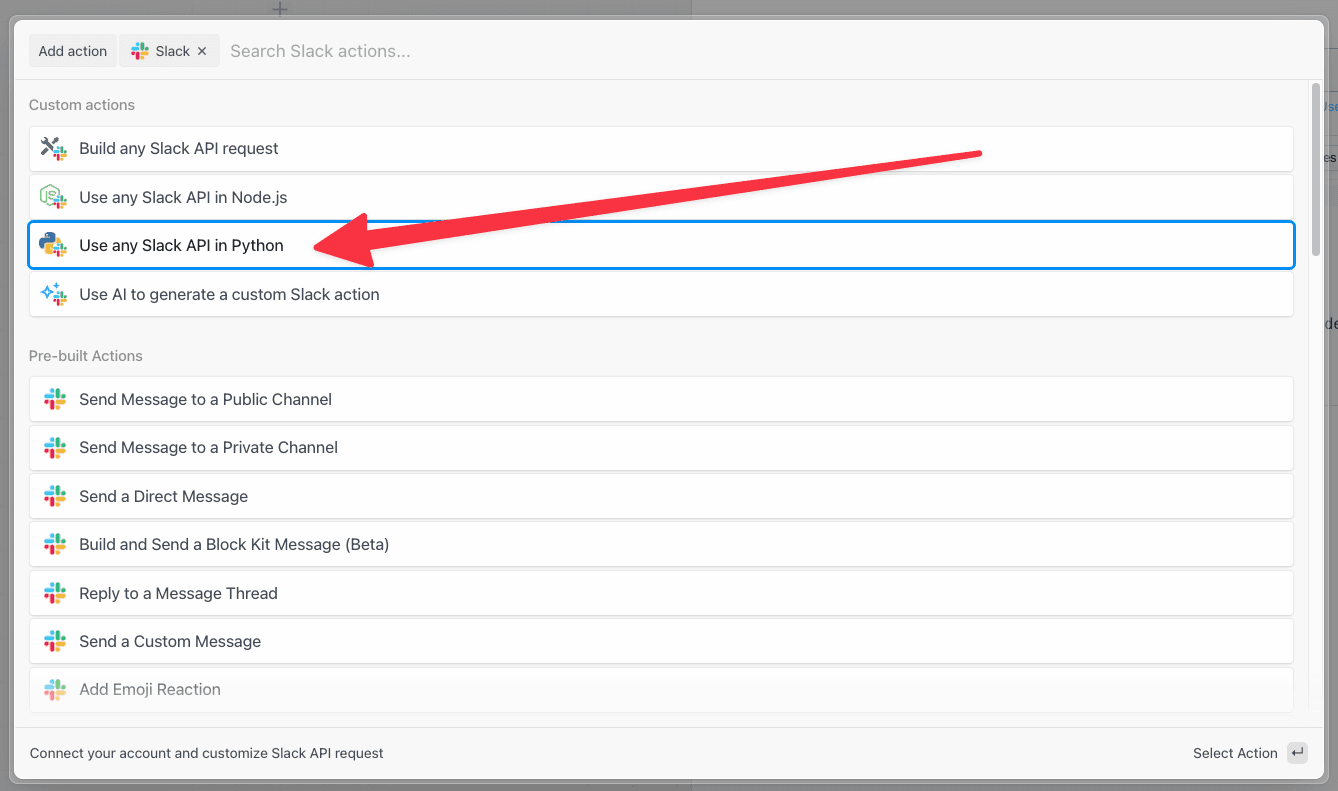

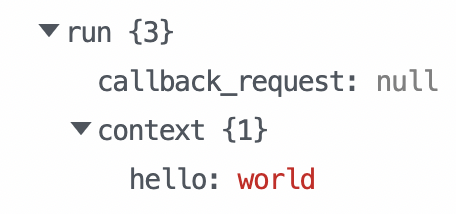

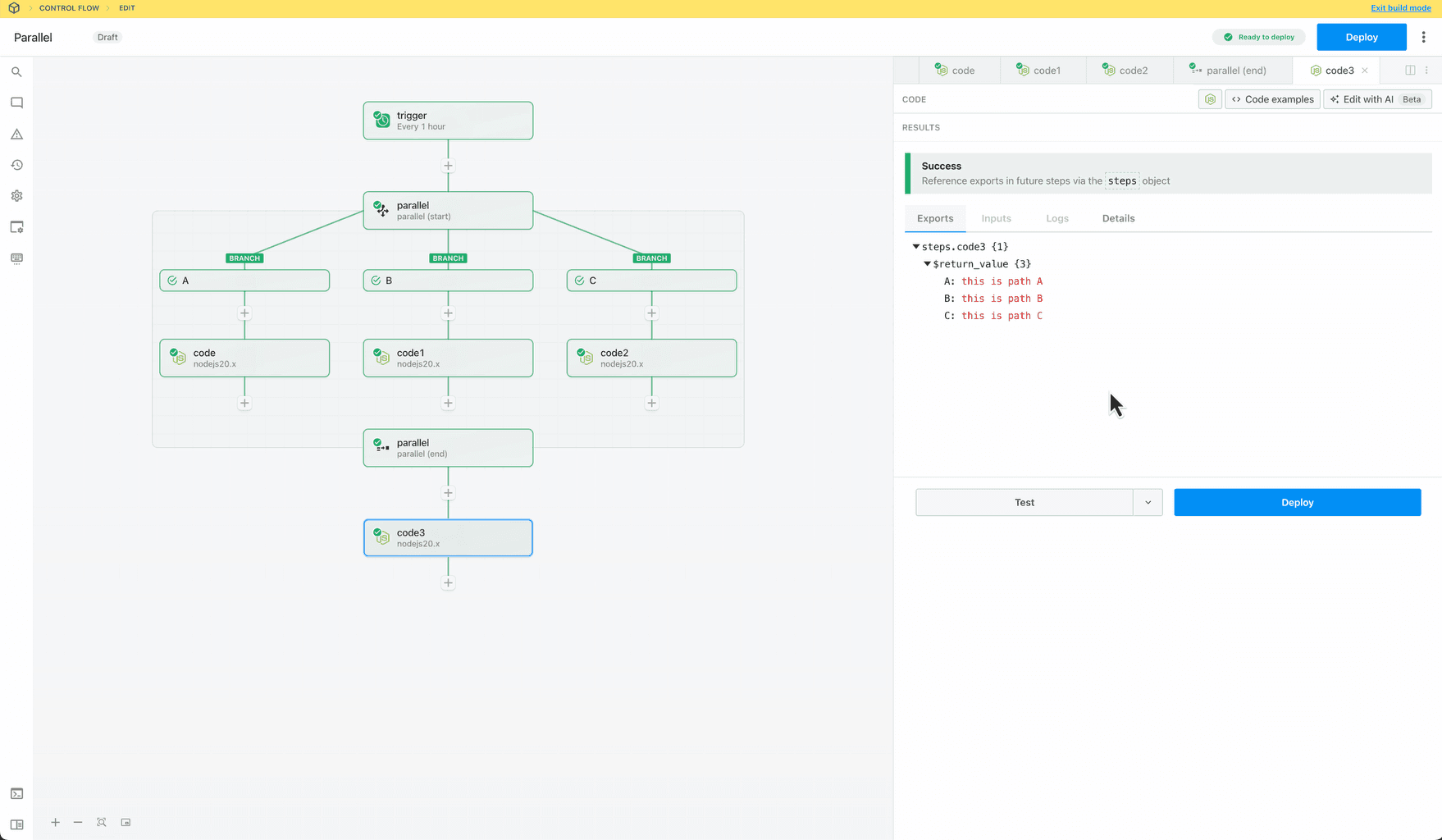

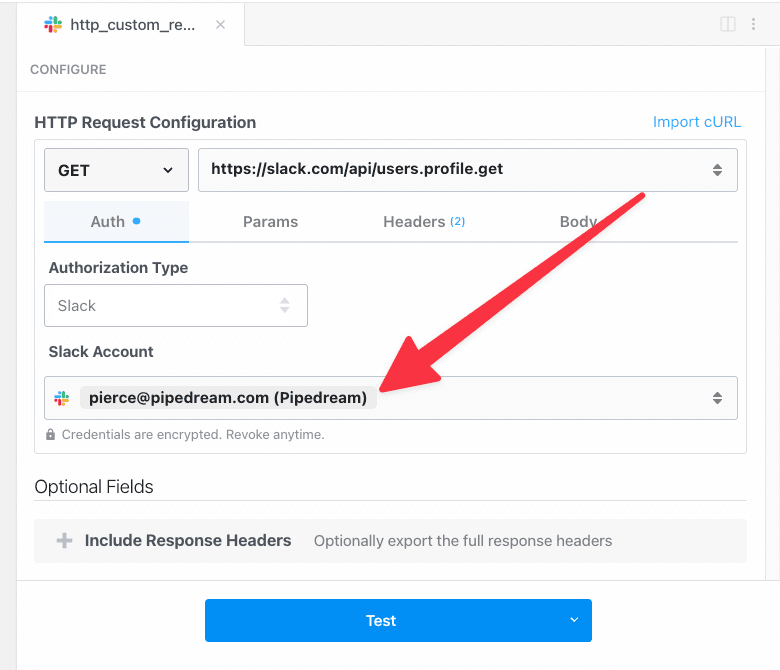

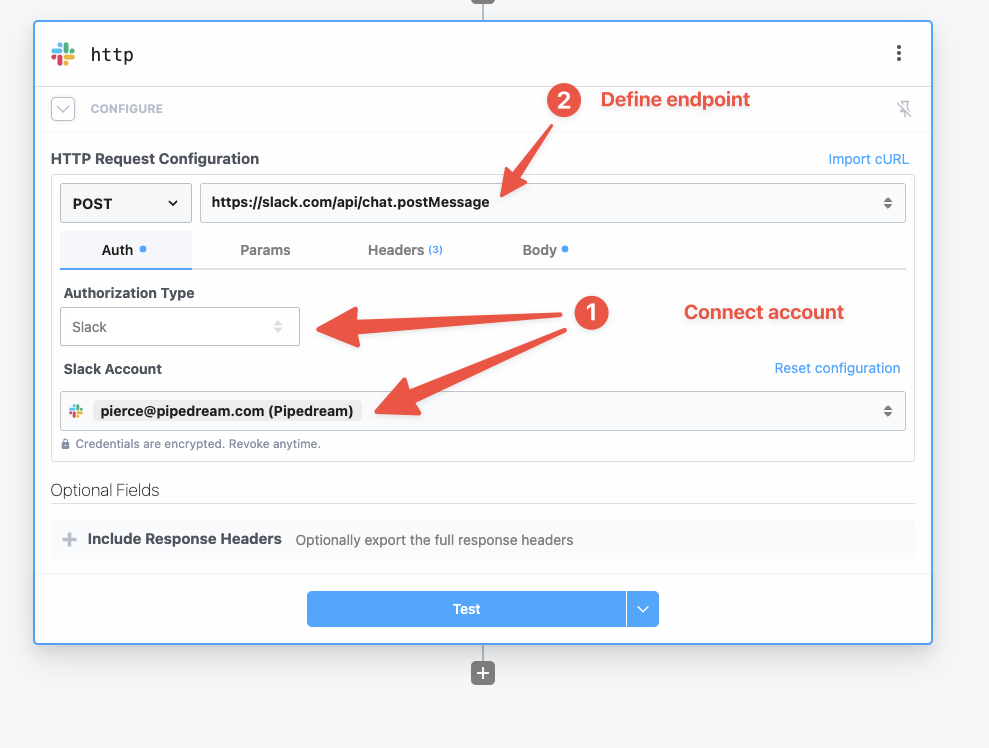

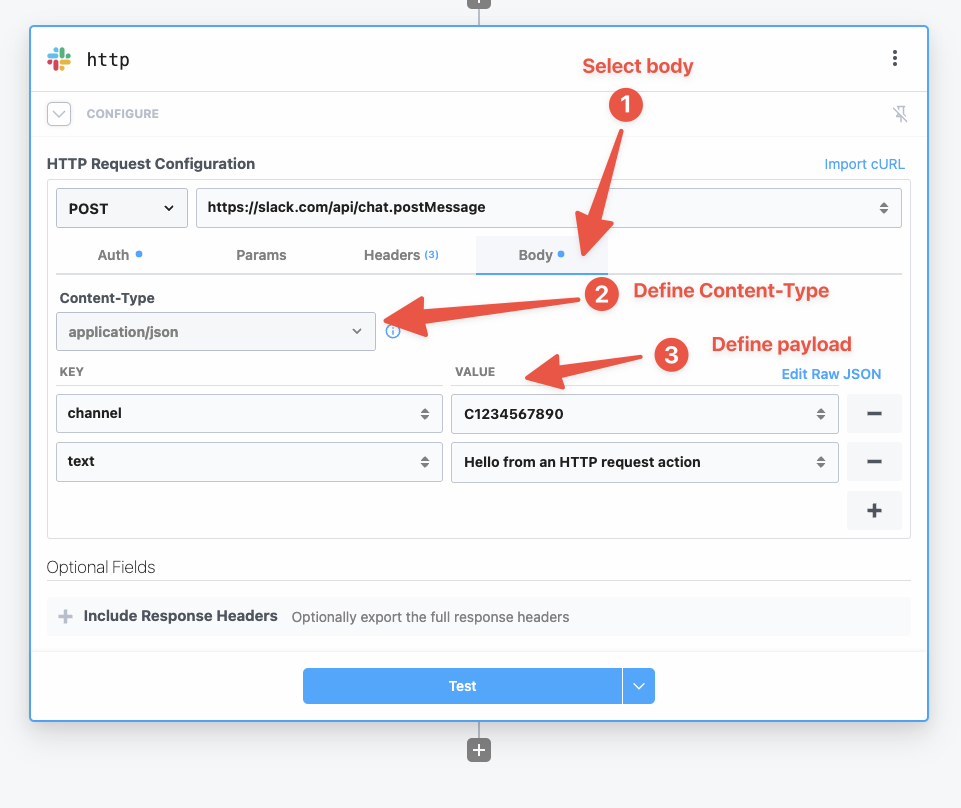

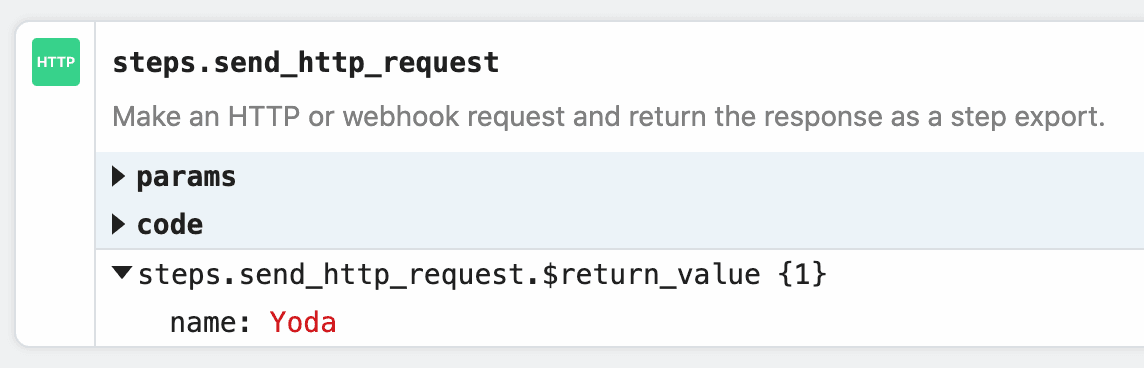

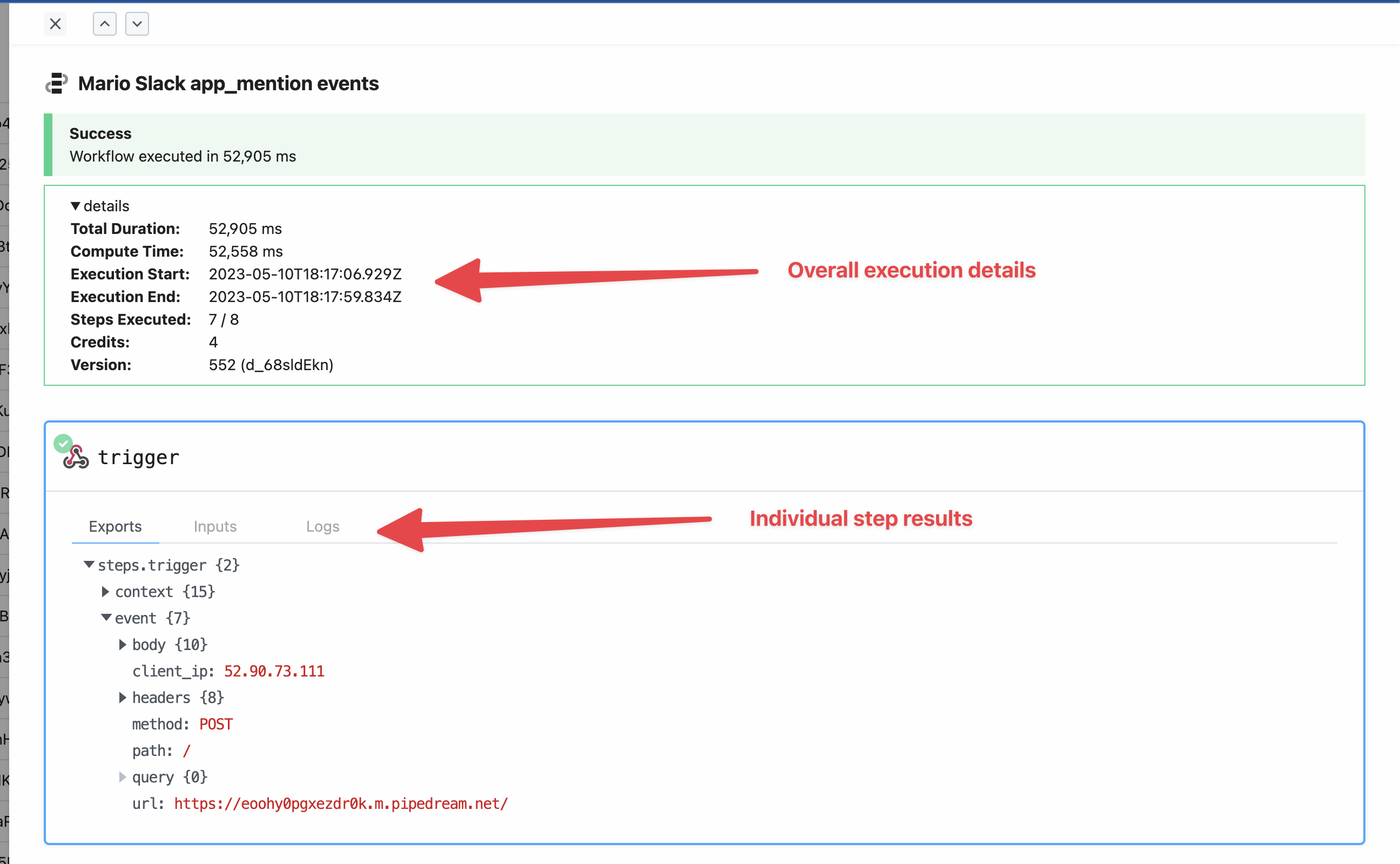

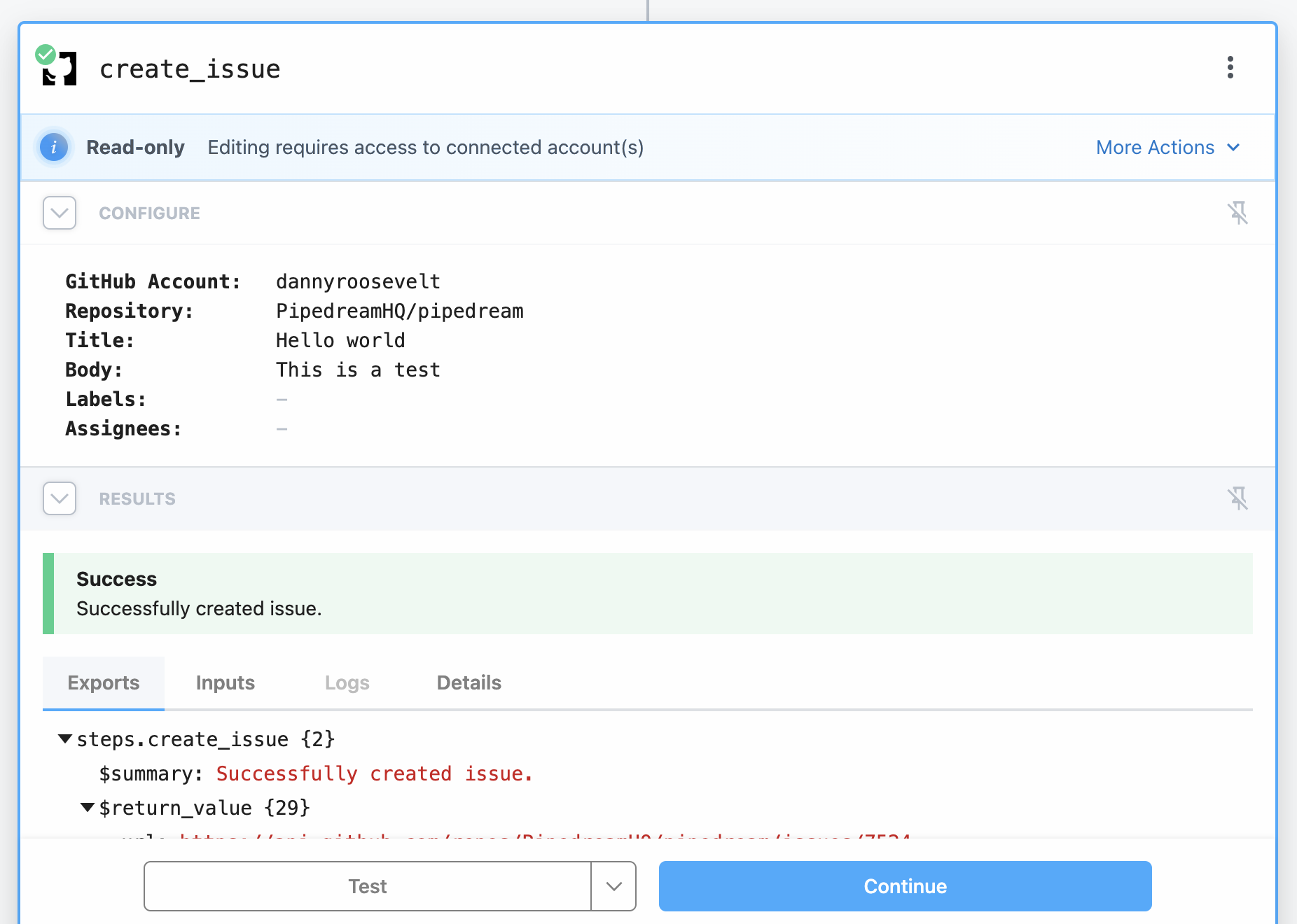

Select an existing account or connect a new one, and then deploy your workflow and click **RUN NOW**. You should see the results returned by the action:

Select an existing account or connect a new one, and then deploy your workflow and click **RUN NOW**. You should see the results returned by the action:

## What’s Next?

You’re ready to start authoring and publishing actions on Pipedream! You can also check out the [detailed component reference](/docs/components/contributing/api/#component-api) at any time!

If you have any questions or feedback, please [reach out](https://pipedream.com/community)!

# Component API Reference

Source: https://pipedream.com/docs/components/contributing/api

export const CONFIGURED_PROPS_SIZE_LIMIT = '64KB';

## What’s Next?

You’re ready to start authoring and publishing actions on Pipedream! You can also check out the [detailed component reference](/docs/components/contributing/api/#component-api) at any time!

If you have any questions or feedback, please [reach out](https://pipedream.com/community)!

# Component API Reference

Source: https://pipedream.com/docs/components/contributing/api

export const CONFIGURED_PROPS_SIZE_LIMIT = '64KB';

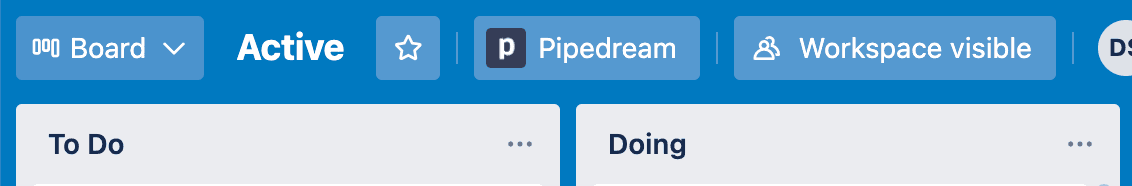

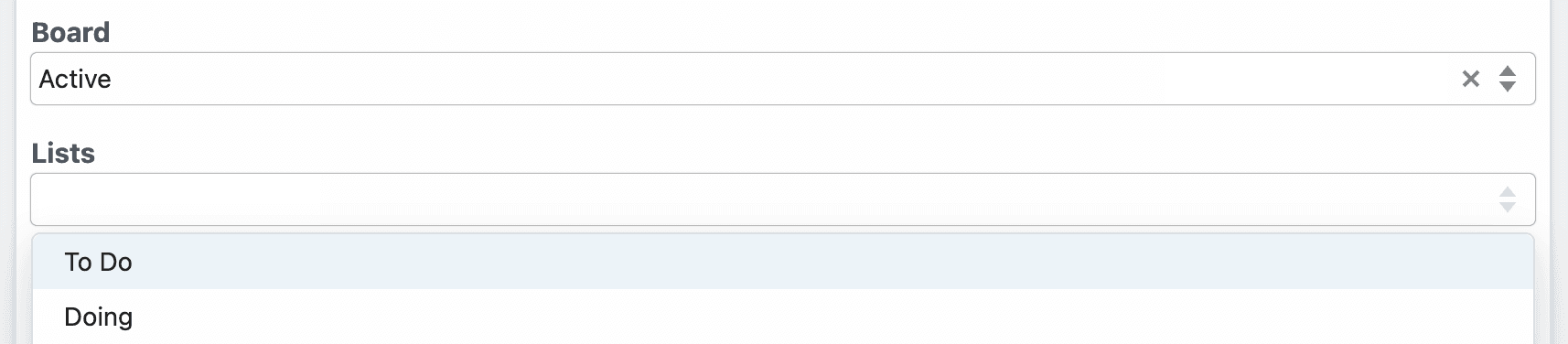

In Pipedream, users can choose from lists on a specific board:

In Pipedream, users can choose from lists on a specific board:

Both **Board** and **Lists** are defined in the Trello app file:

```javascript

board: {

type: "string",

label: "Board",

async options(opts) {

const boards = await this.getBoards(this.$auth.oauth_uid);

const activeBoards = boards.filter((board) => board.closed === false);

return activeBoards.map((board) => {

return { label: board.name, value: board.id };

});

},

},

lists: {

type: "string[]",

label: "Lists",

optional: true,

async options(opts) {

const lists = await this.getLists(opts.board);

return lists.map((list) => {

return { label: list.name, value: list.id };

});

},

}

```

In the `lists` prop, notice how `opts.board` references the board. You can pass `opts` to the prop’s `options` method when you reference `propDefinitions` in specific components:

```javascript

board: { propDefinition: [trello, "board"] },

lists: {

propDefinition: [

trello,

"lists",

(configuredProps) => ({ board: configuredProps.board }),

],

},

```

`configuredProps` contains the props the user previously configured (the board). This allows the `lists` prop to use it in the `options` method.

##### Dynamic props

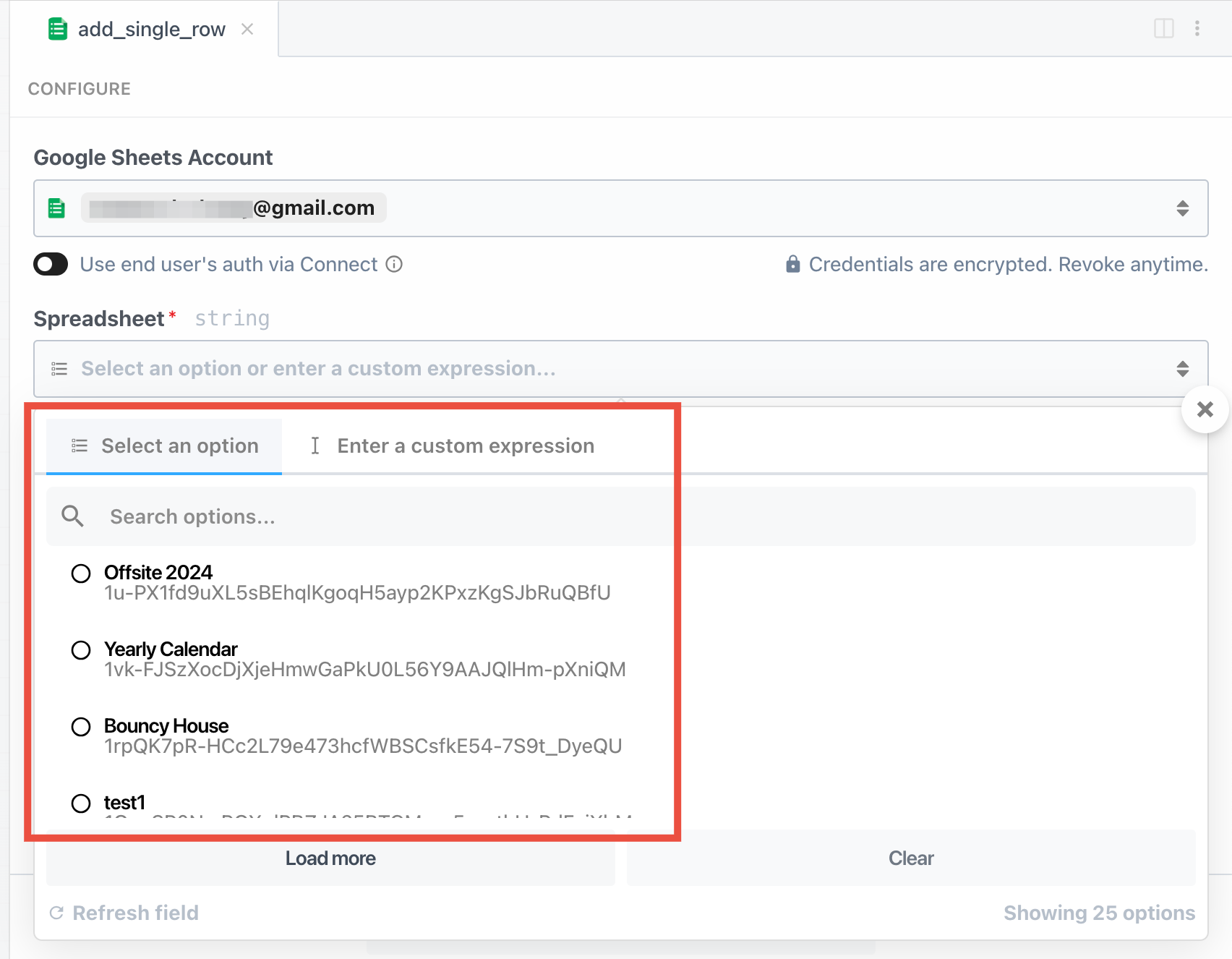

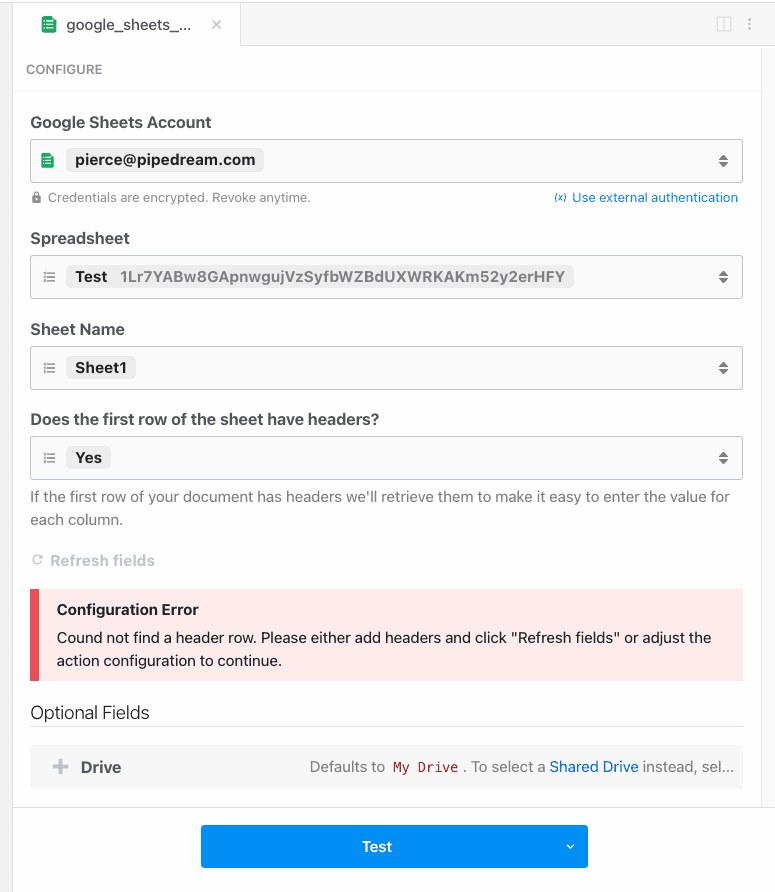

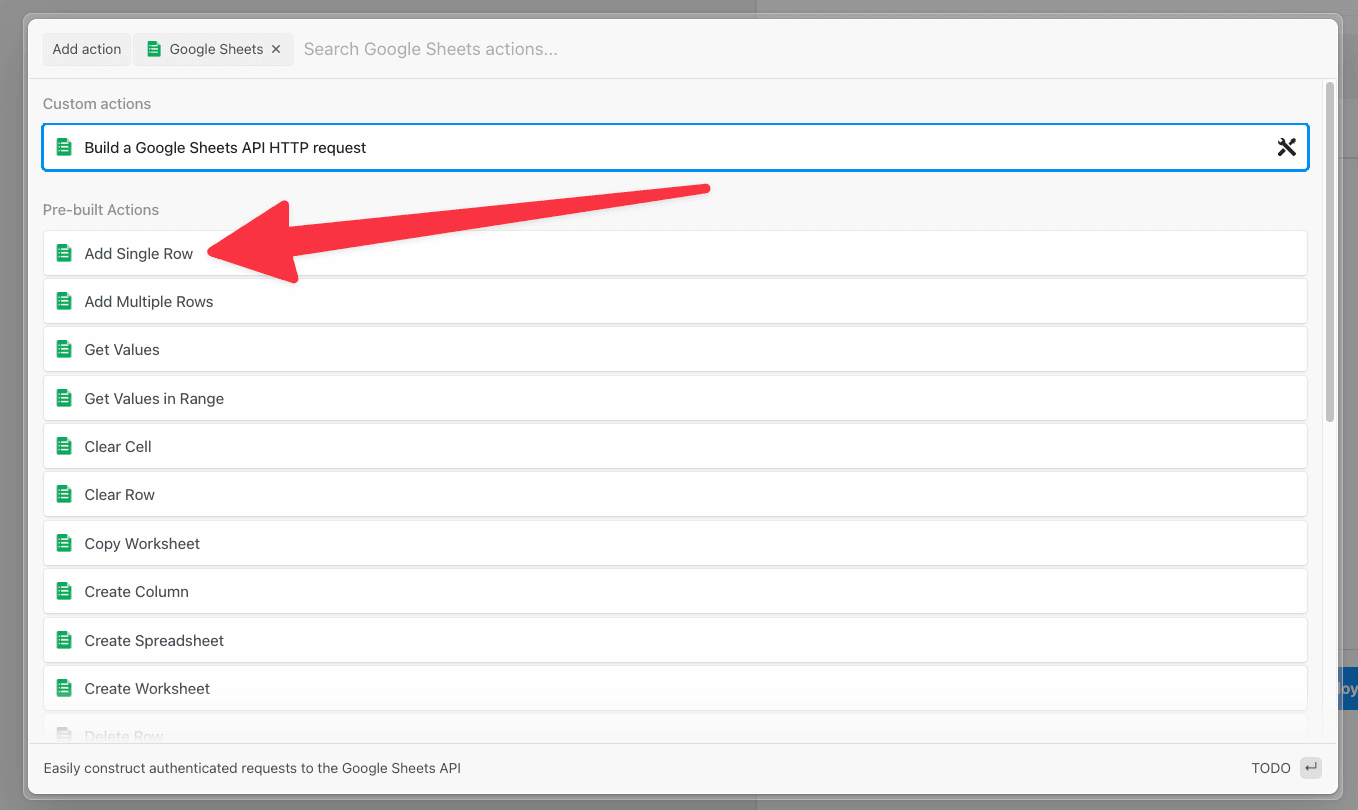

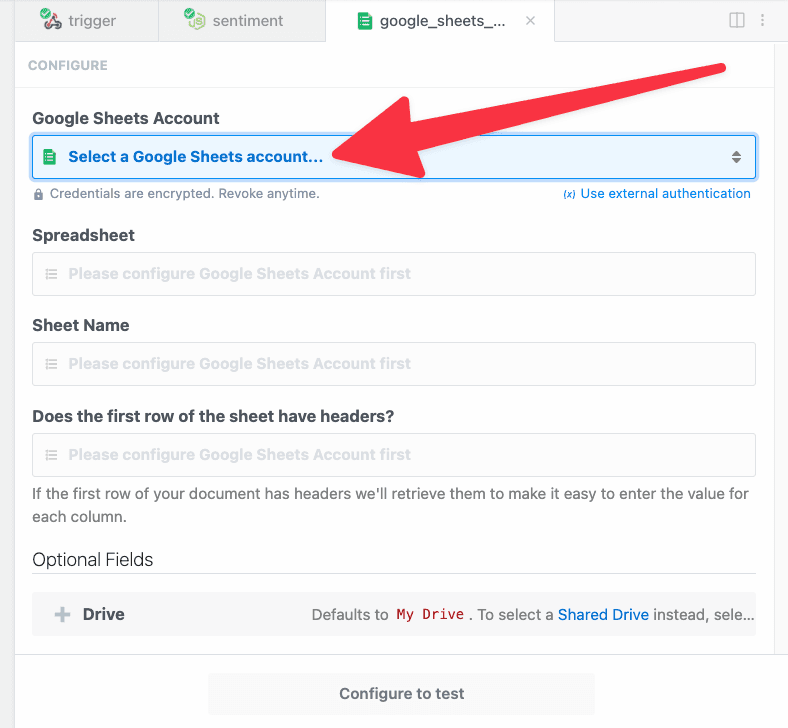

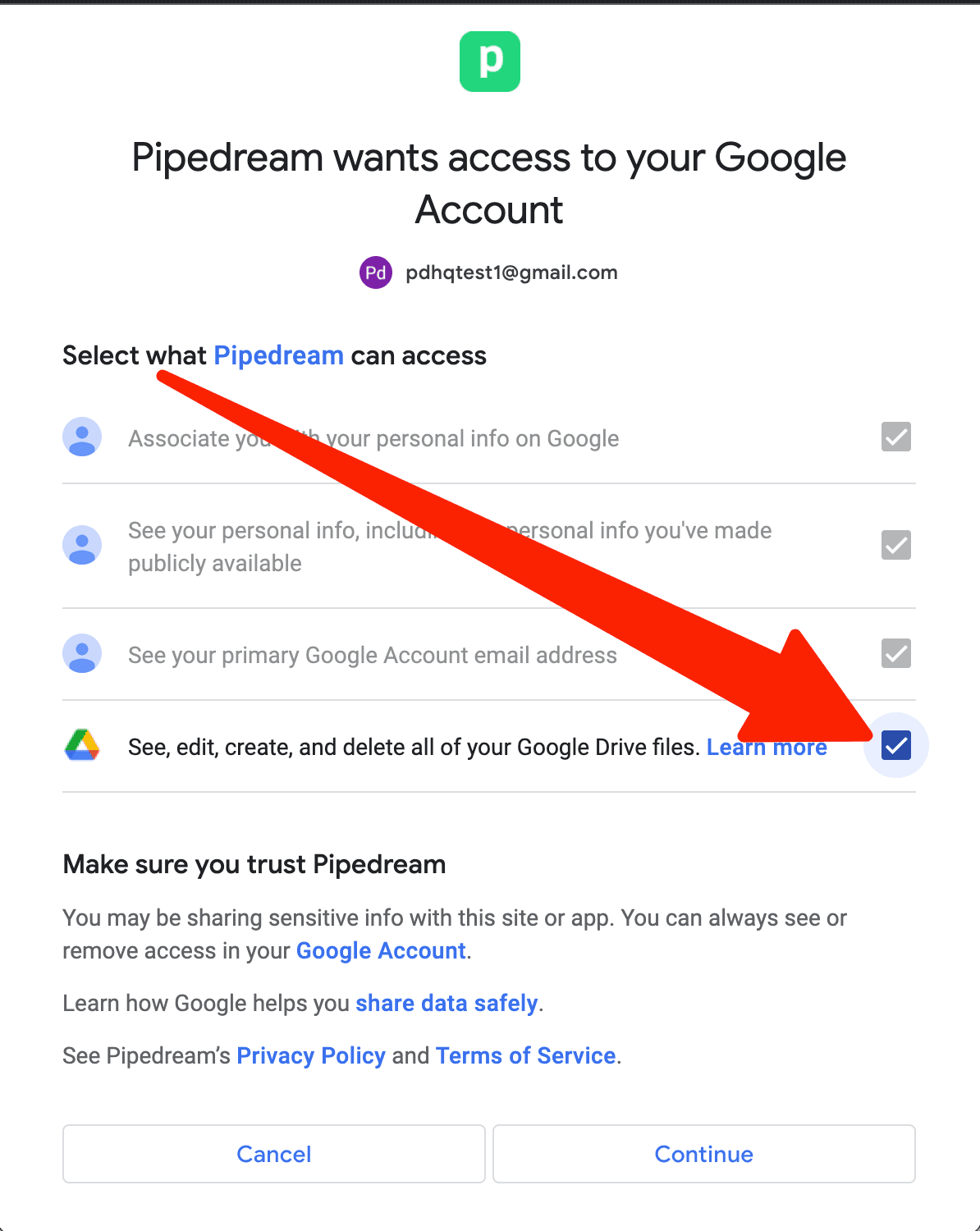

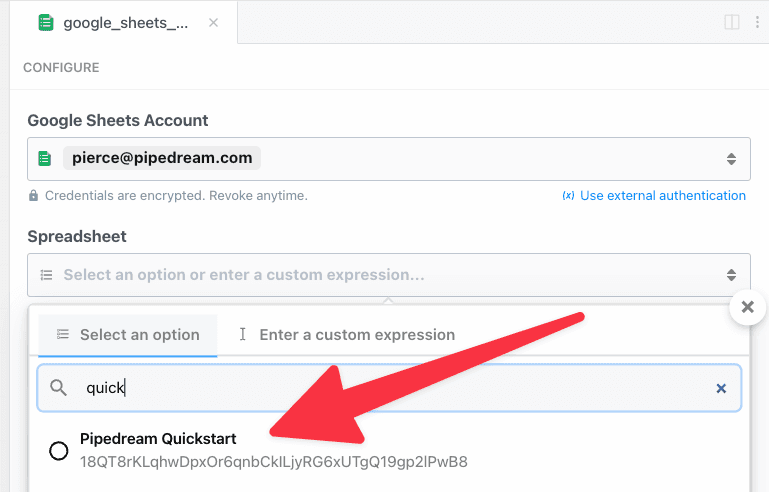

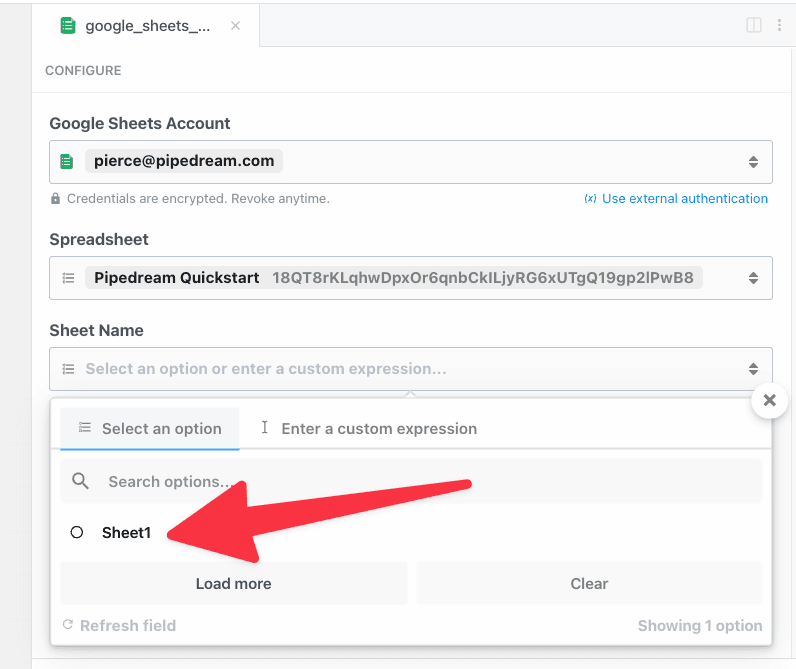

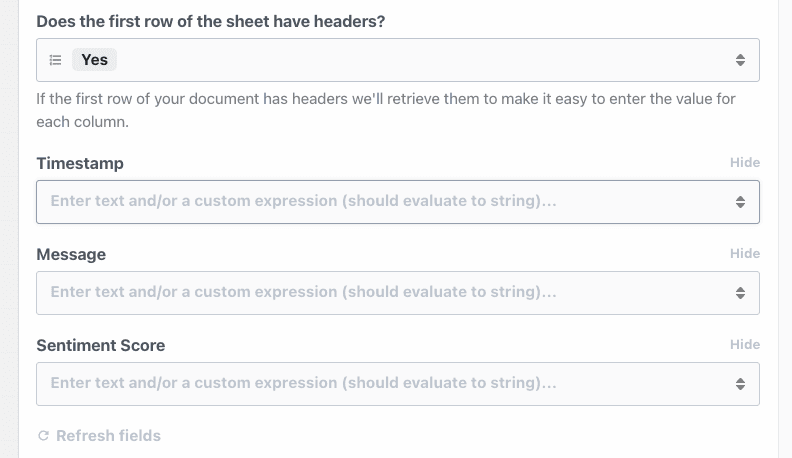

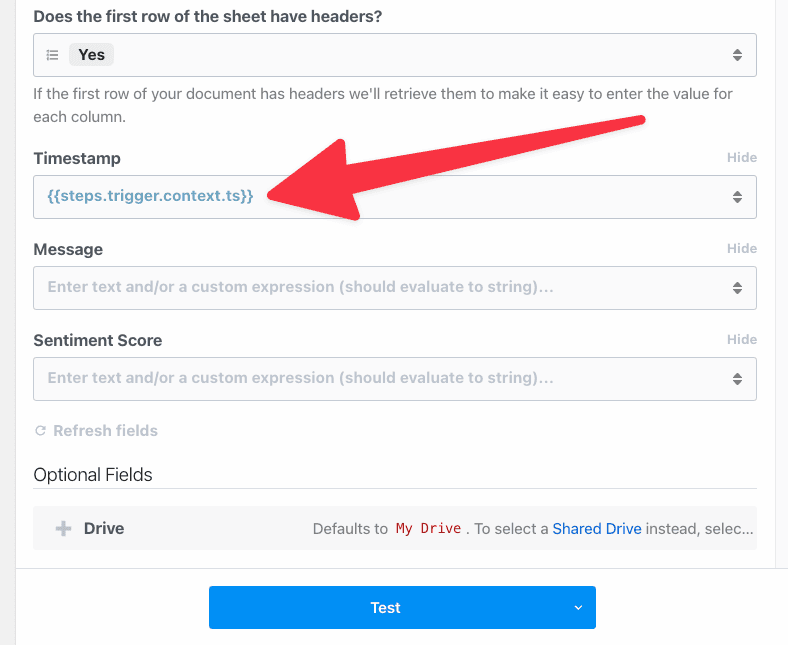

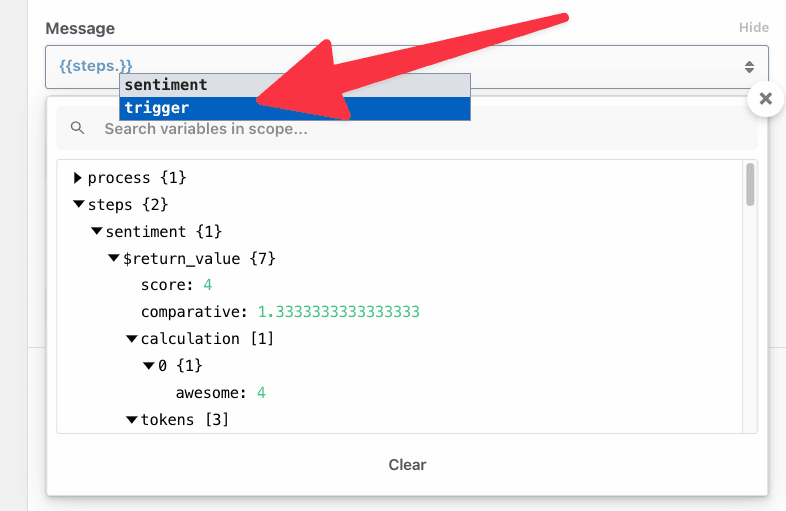

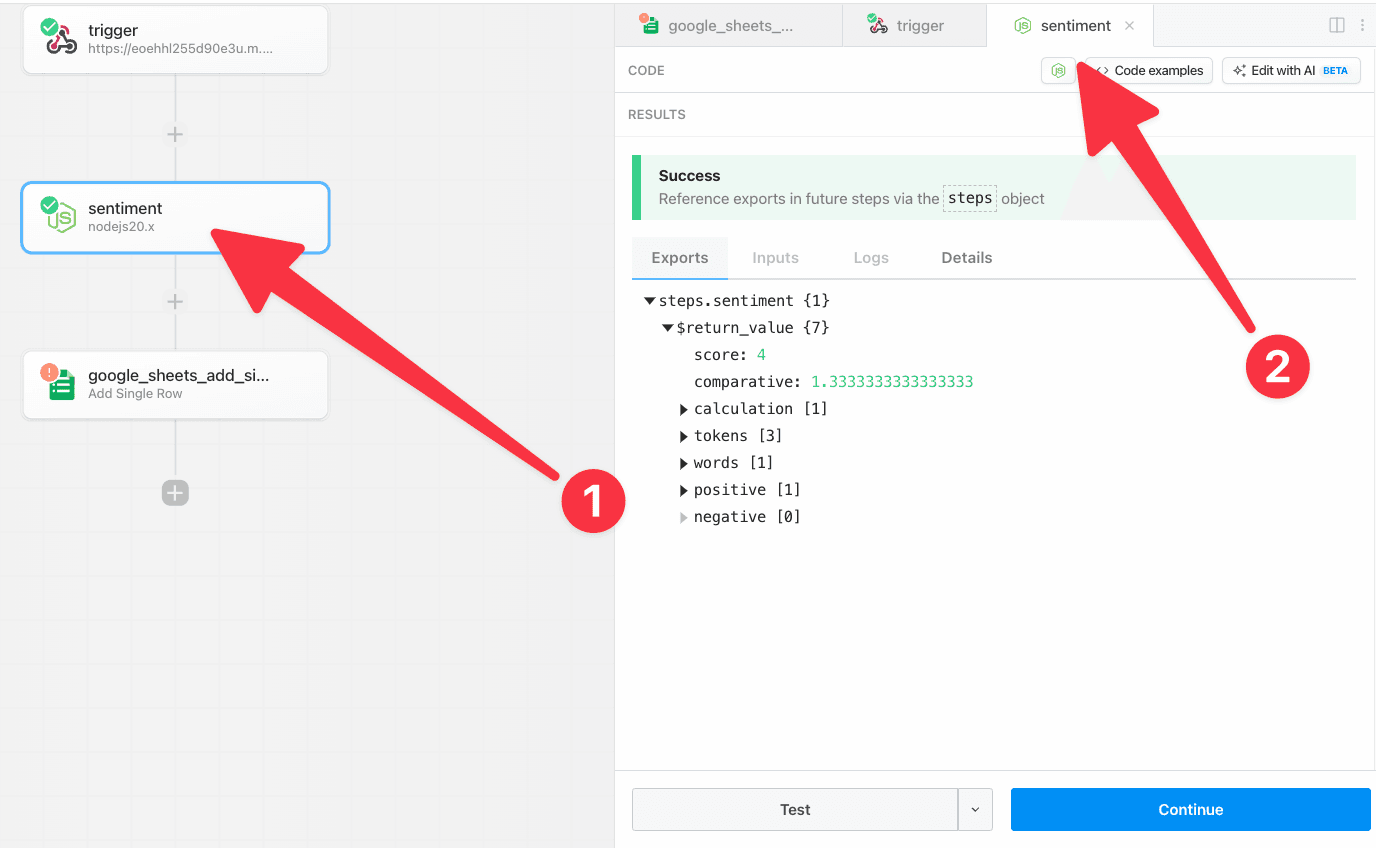

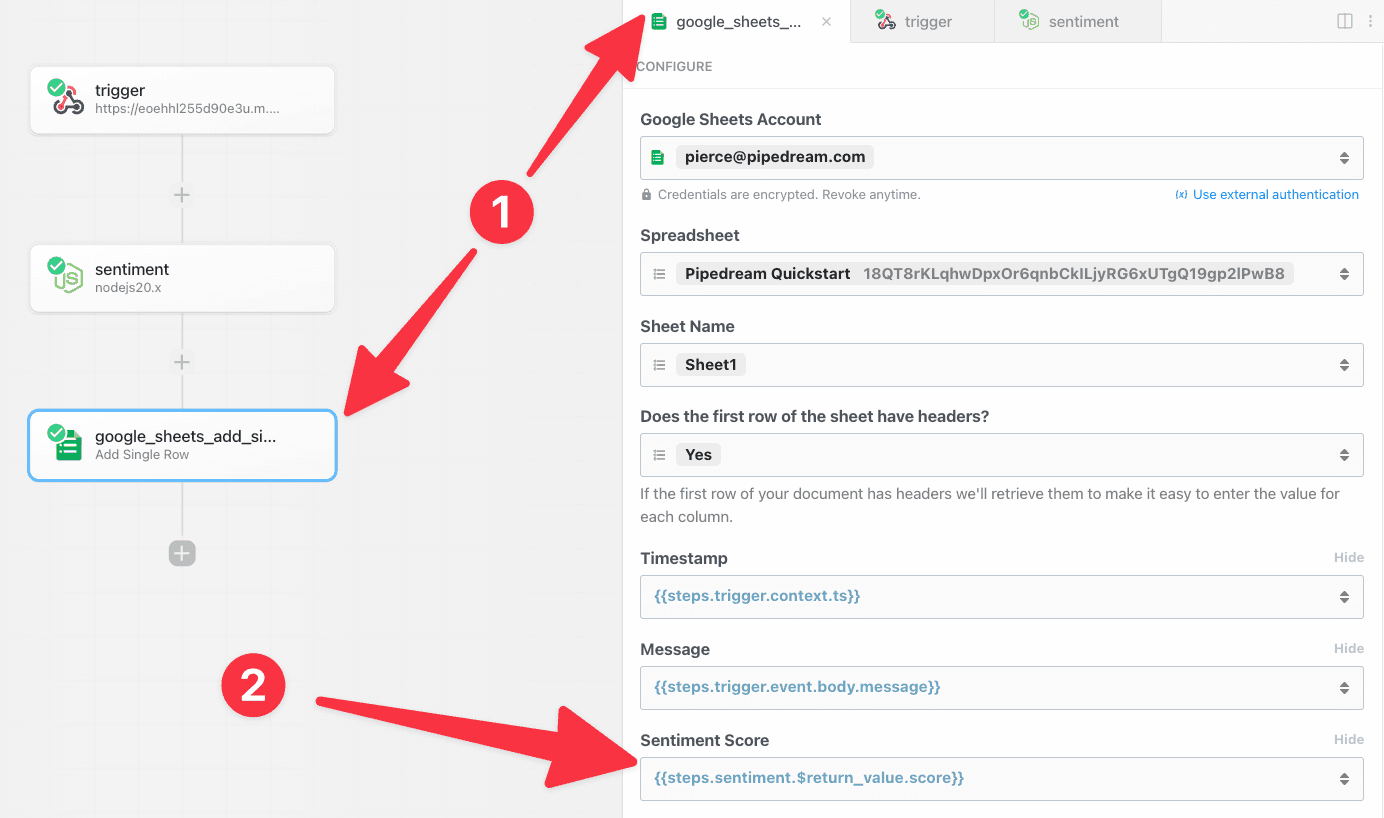

Some prop definitions must be computed dynamically, after the user configures another prop. We call these **dynamic props**, since they are rendered on-the-fly. This technique is used in [the Google Sheets **Add Single Row** action](https://github.com/PipedreamHQ/pipedream/blob/master/components/google_sheets/actions/add-single-row/add-single-row.mjs), which we’ll use as an example below.

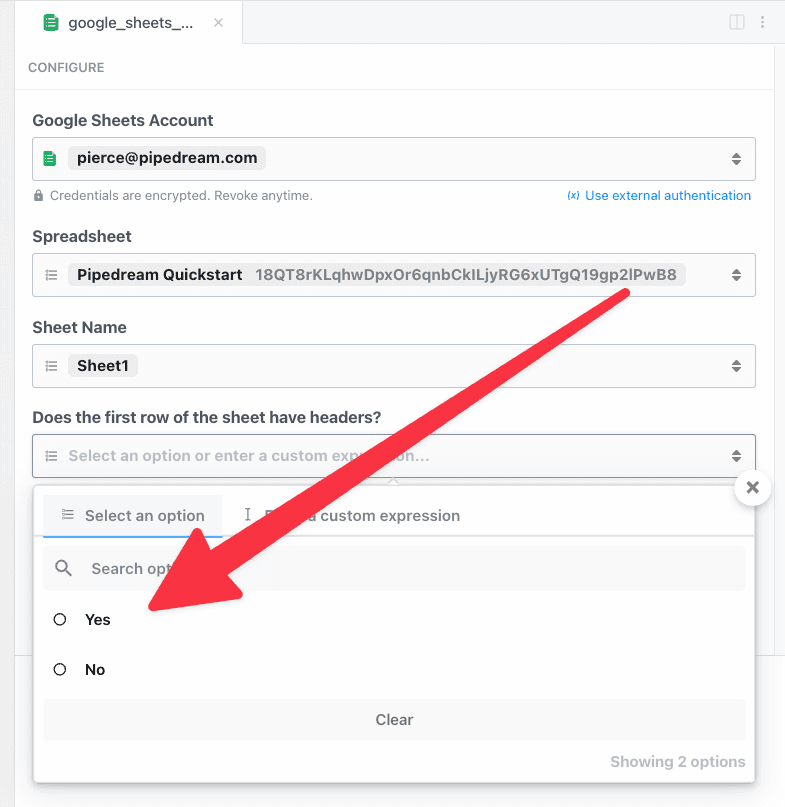

First, determine the prop whose selection should render dynamic props. In the Google Sheets example, we ask the user whether their sheet contains a header row. If it does, we display header fields as individual props:

Both **Board** and **Lists** are defined in the Trello app file:

```javascript

board: {

type: "string",

label: "Board",

async options(opts) {

const boards = await this.getBoards(this.$auth.oauth_uid);

const activeBoards = boards.filter((board) => board.closed === false);

return activeBoards.map((board) => {

return { label: board.name, value: board.id };

});

},

},

lists: {

type: "string[]",

label: "Lists",

optional: true,

async options(opts) {

const lists = await this.getLists(opts.board);

return lists.map((list) => {

return { label: list.name, value: list.id };

});

},

}

```

In the `lists` prop, notice how `opts.board` references the board. You can pass `opts` to the prop’s `options` method when you reference `propDefinitions` in specific components:

```javascript

board: { propDefinition: [trello, "board"] },

lists: {

propDefinition: [

trello,

"lists",

(configuredProps) => ({ board: configuredProps.board }),

],

},

```

`configuredProps` contains the props the user previously configured (the board). This allows the `lists` prop to use it in the `options` method.

##### Dynamic props

Some prop definitions must be computed dynamically, after the user configures another prop. We call these **dynamic props**, since they are rendered on-the-fly. This technique is used in [the Google Sheets **Add Single Row** action](https://github.com/PipedreamHQ/pipedream/blob/master/components/google_sheets/actions/add-single-row/add-single-row.mjs), which we’ll use as an example below.

First, determine the prop whose selection should render dynamic props. In the Google Sheets example, we ask the user whether their sheet contains a header row. If it does, we display header fields as individual props:

To load dynamic props, the header prop must have the `reloadProps` field set to `true`:

```javascript

hasHeaders: {

type: "string",

label: "Does the first row of the sheet have headers?",

description: "If the first row of your document has headers we'll retrieve them to make it easy to enter the value for each column.",

options: [

"Yes",

"No",

],

reloadProps: true,

},

```

When a user chooses a value for this prop, Pipedream runs the `additionalProps` component method to render props:

```javascript

async additionalProps() {

const sheetId = this.sheetId?.value || this.sheetId;

const props = {};

if (this.hasHeaders === "Yes") {

const { values } = await this.googleSheets.getSpreadsheetValues(sheetId, `${this.sheetName}!1:1`);

if (!values[0]?.length) {

throw new ConfigurationError("Cound not find a header row. Please either add headers and click \"Refresh fields\" or adjust the action configuration to continue.");

}

for (let i = 0; i < values[0]?.length; i++) {

props[`col_${i.toString().padStart(4, "0")}`] = {

type: "string",

label: values[0][i],

optional: true,

};

}

} else if (this.hasHeaders === "No") {

props.myColumnData = {

type: "string[]",

label: "Values",

description: "Provide a value for each cell of the row. Google Sheets accepts strings, numbers and boolean values for each cell. To set a cell to an empty value, pass an empty string.",

};

}

return props;

},

```

The signature of this function is:

```javascript

async additionalProps(previousPropDefs)

```

where `previousPropDefs` are the full set of props (props merged with the previous `additionalProps`). When the function is executed, `this` is bound similar to when the `run` function is called, where you can access the values of the props as currently configured, and call any `methods`. The return value of `additionalProps` will replace any previous call, and that return value will be merged with props to define the final set of props.

Following is an example that demonstrates how to use `additionalProps` to dynamically change a prop’s `disabled` and `hidden` properties:

```javascript

async additionalProps(previousPropDefs) {

if (this.myCondition === "Yes") {

previousPropDefs.myPropName.disabled = true;

previousPropDefs.myPropName.hidden = true;

} else {

previousPropDefs.myPropName.disabled = false;

previousPropDefs.myPropName.hidden = false;

}

return previousPropDefs;

},

```

Dynamic props can have any one of the following prop types:

* `app`

* `boolean`

* `integer`

* `string`

* `object`

* `any`

* `$.interface.http`

* `$.interface.timer`

* `data_store`

* `http_request`

#### Interface Props

Interface props are infrastructure abstractions provided by the Pipedream platform. They declare how a source is invoked — via HTTP request, run on a schedule, etc. — and therefore define the shape of the events it processes.

| Interface Type | Description |

| ------------------------------------------------- | --------------------------------------------------------------- |

| [Timer](/docs/components/contributing/api/#timer) | Invoke your source on an interval or based on a cron expression |

| [HTTP](/docs/components/contributing/api/#http) | Invoke your source on HTTP requests |

#### Timer

To use the timer interface, declare a prop whose value is the string `$.interface.timer`:

**Definition**

```javascript

props: {

myPropName: {

type: "$.interface.timer",

default: {},

},

}

```

| Property | Type | Required? | Description |

| --------- | -------- | --------- | ------------------------------------------------------------------------------------------------------------------------- |

| `type` | `string` | required | Must be set to `$.interface.timer` |

| `default` | `object` | optional | **Define a default interval** `{ intervalSeconds: 60, },` **Define a default cron expression** `{ cron: "0 0 * * *", },` |

**Usage**

| Code | Description | Read Scope | Write Scope |

| ----------------- | ------------------------------------------------------------------------------------------------------------------------------------------ | ------------------------- | ------------------------------------------------------------------------------------------- |

| `this.myPropName` | Returns the type of interface configured (e.g., `{ type: '$.interface.timer' }`) | `run()` `hooks` `methods` | n/a (interface props may only be modified on component deploy or update via UI, CLI or API) |

| `event` | Returns an object with the execution timestamp and interface configuration (e.g., `{ "timestamp": 1593937896, "interval_seconds": 3600 }`) | `run(event)` | n/a (interface props may only be modified on source deploy or update via UI, CLI or API) |

**Example**

Following is a basic example of a source that is triggered by a `$.interface.timer` and has default defined as a cron expression.

```javascript

export default {

name: "Cron Example",

version: "0.1",

props: {

timer: {

type: "$.interface.timer",

default: {

cron: "0 0 * * *", // Run job once a day

},

},

},

async run() {

console.log("hello world!");

},

};

```

Following is an example source that’s triggered by a `$.interface.timer` and has a `default` interval defined.

```javascript

export default {

name: "Interval Example",

version: "0.1",

props: {

timer: {

type: "$.interface.timer",

default: {

intervalSeconds: 60 * 60 * 24, // Run job once a day

},

},

},

async run() {

console.log("hello world!");

},

};

```

##### HTTP

To use the HTTP interface, declare a prop whose value is the string `$.interface.http`:

```javascript

props: {

myPropName: {

type: "$.interface.http",

customResponse: true, // optional: defaults to false

},

}

```

**Definition**

| Property | Type | Required? | Description |

| --------- | -------- | --------- | ------------------------------------------------------------------------------------------------------------ |

| `type` | `string` | required | Must be set to `$.interface.http` |

| `respond` | `method` | required | The HTTP interface exposes a `respond()` method that lets your component issue HTTP responses to the client. |

**Usage**

| Code | Description | Read Scope | Write Scope |

| --------------------------- | --------------------------------------------------------------------------------------------------------------------------------------- | ------------------------- | ----------------------------------------------------------------------------------------------------------------------------- |

| `this.myPropName` | Returns an object with the unique endpoint URL generated by Pipedream (e.g., `{ endpoint: 'https://abcde.m.pipedream.net' }`) | `run()` `hooks` `methods` | n/a (interface props may only be modified on source deploy or update via UI, CLI or API) |

| `event` | Returns an object representing the HTTP request (e.g., `{ method: 'POST', path: '/', query: {}, headers: {}, bodyRaw: '', body: {}, }`) | `run(event)` | The shape of `event` corresponds with the the HTTP request you make to the endpoint generated by Pipedream for this interface |

| `this.myPropName.respond()` | Returns an HTTP response to the client (e.g., `this.http.respond({status: 200})`). | n/a | `run()` |

###### Responding to HTTP requests

The HTTP interface exposes a `respond()` method that lets your source issue HTTP responses. You may run `this.http.respond()` to respond to the client from the `run()` method of a source. In this case you should also pass the `customResponse: true` parameter to the prop.

| Property | Type | Required? | Description |

| --------- | -------------------------- | --------- | ------------------------------------------------------------------------------------------------------------------------------ |

| `status` | `integer` | required | An integer representing the HTTP status code. Return `200` to indicate success. Standard status codes range from `100` - `599` |

| `headers` | `object` | optional | Return custom key-value pairs in the HTTP response |

| `body` | `string` `object` `buffer` | optional | Return a custom body in the HTTP response. This can be any string, object, or Buffer. |

###### HTTP Event Shape

Following is the shape of the event passed to the `run()` method of your source:

```javascript

{

method: 'POST',

path: '/',

query: {},

headers: {},

bodyRaw: '',

body:

}

```

**Example**

Following is an example source that’s triggered by `$.interface.http` and returns `{ 'msg': 'hello world!' }` in the HTTP response. On deploy, Pipedream will generate a unique URL for this source:

```javascript

export default {

name: "HTTP Example",

version: "0.0.1",

props: {

http: {

type: "$.interface.http",

customResponse: true,

},

},

async run(event) {

this.http.respond({

status: 200,

body: {

msg: "hello world!",

},

headers: {

"content-type": "application/json",

},

});

console.log(event);

},

};

```

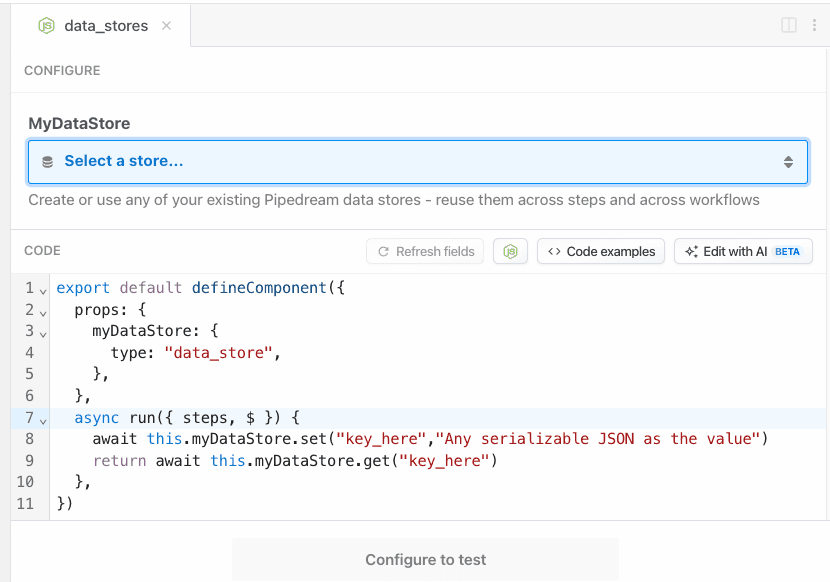

#### Service Props

| Service | Description |

| ------- | ---------------------------------------------------------------------------------------------------- |

| *DB* | Provides access to a simple, component-specific key-value store to maintain state across executions. |

##### DB

**Definition**

```javascript

props: {

myPropName: "$.service.db",

}

```

**Usage**

| Code | Description | Read Scope | Write Scope |

| ----------------------------------- | -------------------------------------------------------------------------------------------- | ------------------------------------- | -------------------------------------- |

| `this.myPropName.get('key')` | Method to get a previously set value for a key. Returns `undefined` if a key does not exist. | `run()` `hooks` `methods` | Use the `set()` method to write values |

| `this.myPropName.set('key', value)` | Method to set a value for a key. Values must be JSON-serializable data. | Use the `get()` method to read values | `run()` `hooks` `methods` |

#### App Props

App props are normally defined in an [app file](/docs/components/contributing/guidelines/#app-files), separate from individual components. See [the `components/` directory of the pipedream GitHub repo](https://github.com/PipedreamHQ/pipedream/tree/master/components) for example app files.

**Definition**

```javascript

props: {

myPropName: {

type: "app",

app: "",

propDefinitions: {}

methods: {},

},

},

```

| Property | Type | Required? | Description |

| ----------------- | -------- | --------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `type` | `string` | required | Value must be `app` |

| `app` | `string` | required | Value must be set to the name slug for an app registered on Pipedream. [App files](/docs/components/contributing/guidelines/#app-files) are programmatically generated for all integrated apps on Pipedream. To find your app’s slug, visit the `components` directory of [the Pipedream GitHub repo](https://github.com/PipedreamHQ/pipedream/tree/master/components), find the app file (the file that ends with `.app.mjs`), and find the `app` property at the root of that module. If you don’t see an app listed, please [open an issue here](https://github.com/PipedreamHQ/pipedream/issues/new?assignees=\&labels=app%2C+enhancement\&template=app---service-integration.md\&title=%5BAPP%5D). |

| `propDefinitions` | `object` | optional | An object that contains objects with predefined user input props. See the section on User Input Props above to learn about the shapes that can be defined and how to reference in components using the `propDefinition` property |

| `methods` | `object` | optional | Define app-specific methods. Methods can be referenced within the app object context via `this` (e.g., `this.methodName()`) and within a component via `this.myAppPropName` (e.g., `this.myAppPropName.methodName()`). |

**Usage**

| Code | Description | Read Scope | Write Scope |

| --------------------------------- | ------------------------------------------------------------------------------------------------ | ----------------------------------------------- | ----------- |

| `this.$auth` | Provides access to OAuth tokens and API keys for Pipedream managed auth | **App Object:** `methods` | n/a |

| `this.myAppPropName.$auth` | Provides access to OAuth tokens and API keys for Pipedream managed auth | **Parent Component:** `run()` `hooks` `methods` | n/a |

| `this.methodName()` | Execute a common method defined for an app within the app definition (e.g., from another method) | **App Object:** `methods` | n/a |

| `this.myAppPropName.methodName()` | Execute a common method defined for an app from a component that includes the app as a prop | **Parent Component:** `run()` `hooks` `methods` | n/a |

To load dynamic props, the header prop must have the `reloadProps` field set to `true`:

```javascript

hasHeaders: {

type: "string",

label: "Does the first row of the sheet have headers?",

description: "If the first row of your document has headers we'll retrieve them to make it easy to enter the value for each column.",

options: [

"Yes",

"No",

],

reloadProps: true,

},

```

When a user chooses a value for this prop, Pipedream runs the `additionalProps` component method to render props:

```javascript

async additionalProps() {

const sheetId = this.sheetId?.value || this.sheetId;

const props = {};

if (this.hasHeaders === "Yes") {

const { values } = await this.googleSheets.getSpreadsheetValues(sheetId, `${this.sheetName}!1:1`);

if (!values[0]?.length) {

throw new ConfigurationError("Cound not find a header row. Please either add headers and click \"Refresh fields\" or adjust the action configuration to continue.");

}

for (let i = 0; i < values[0]?.length; i++) {

props[`col_${i.toString().padStart(4, "0")}`] = {

type: "string",

label: values[0][i],

optional: true,

};

}

} else if (this.hasHeaders === "No") {

props.myColumnData = {

type: "string[]",

label: "Values",

description: "Provide a value for each cell of the row. Google Sheets accepts strings, numbers and boolean values for each cell. To set a cell to an empty value, pass an empty string.",

};

}

return props;

},

```

The signature of this function is:

```javascript

async additionalProps(previousPropDefs)

```

where `previousPropDefs` are the full set of props (props merged with the previous `additionalProps`). When the function is executed, `this` is bound similar to when the `run` function is called, where you can access the values of the props as currently configured, and call any `methods`. The return value of `additionalProps` will replace any previous call, and that return value will be merged with props to define the final set of props.

Following is an example that demonstrates how to use `additionalProps` to dynamically change a prop’s `disabled` and `hidden` properties:

```javascript

async additionalProps(previousPropDefs) {

if (this.myCondition === "Yes") {

previousPropDefs.myPropName.disabled = true;

previousPropDefs.myPropName.hidden = true;

} else {

previousPropDefs.myPropName.disabled = false;

previousPropDefs.myPropName.hidden = false;

}

return previousPropDefs;

},

```

Dynamic props can have any one of the following prop types:

* `app`

* `boolean`

* `integer`

* `string`

* `object`

* `any`

* `$.interface.http`

* `$.interface.timer`

* `data_store`

* `http_request`

#### Interface Props

Interface props are infrastructure abstractions provided by the Pipedream platform. They declare how a source is invoked — via HTTP request, run on a schedule, etc. — and therefore define the shape of the events it processes.

| Interface Type | Description |

| ------------------------------------------------- | --------------------------------------------------------------- |

| [Timer](/docs/components/contributing/api/#timer) | Invoke your source on an interval or based on a cron expression |

| [HTTP](/docs/components/contributing/api/#http) | Invoke your source on HTTP requests |

#### Timer

To use the timer interface, declare a prop whose value is the string `$.interface.timer`:

**Definition**

```javascript

props: {

myPropName: {

type: "$.interface.timer",

default: {},

},

}

```

| Property | Type | Required? | Description |

| --------- | -------- | --------- | ------------------------------------------------------------------------------------------------------------------------- |

| `type` | `string` | required | Must be set to `$.interface.timer` |

| `default` | `object` | optional | **Define a default interval** `{ intervalSeconds: 60, },` **Define a default cron expression** `{ cron: "0 0 * * *", },` |

**Usage**

| Code | Description | Read Scope | Write Scope |

| ----------------- | ------------------------------------------------------------------------------------------------------------------------------------------ | ------------------------- | ------------------------------------------------------------------------------------------- |

| `this.myPropName` | Returns the type of interface configured (e.g., `{ type: '$.interface.timer' }`) | `run()` `hooks` `methods` | n/a (interface props may only be modified on component deploy or update via UI, CLI or API) |

| `event` | Returns an object with the execution timestamp and interface configuration (e.g., `{ "timestamp": 1593937896, "interval_seconds": 3600 }`) | `run(event)` | n/a (interface props may only be modified on source deploy or update via UI, CLI or API) |

**Example**

Following is a basic example of a source that is triggered by a `$.interface.timer` and has default defined as a cron expression.

```javascript

export default {

name: "Cron Example",

version: "0.1",

props: {

timer: {

type: "$.interface.timer",

default: {

cron: "0 0 * * *", // Run job once a day

},

},

},

async run() {

console.log("hello world!");

},

};

```

Following is an example source that’s triggered by a `$.interface.timer` and has a `default` interval defined.

```javascript

export default {

name: "Interval Example",

version: "0.1",

props: {

timer: {

type: "$.interface.timer",

default: {

intervalSeconds: 60 * 60 * 24, // Run job once a day

},

},

},

async run() {

console.log("hello world!");

},

};

```

##### HTTP

To use the HTTP interface, declare a prop whose value is the string `$.interface.http`:

```javascript

props: {

myPropName: {

type: "$.interface.http",

customResponse: true, // optional: defaults to false

},

}

```

**Definition**

| Property | Type | Required? | Description |

| --------- | -------- | --------- | ------------------------------------------------------------------------------------------------------------ |

| `type` | `string` | required | Must be set to `$.interface.http` |

| `respond` | `method` | required | The HTTP interface exposes a `respond()` method that lets your component issue HTTP responses to the client. |

**Usage**

| Code | Description | Read Scope | Write Scope |

| --------------------------- | --------------------------------------------------------------------------------------------------------------------------------------- | ------------------------- | ----------------------------------------------------------------------------------------------------------------------------- |

| `this.myPropName` | Returns an object with the unique endpoint URL generated by Pipedream (e.g., `{ endpoint: 'https://abcde.m.pipedream.net' }`) | `run()` `hooks` `methods` | n/a (interface props may only be modified on source deploy or update via UI, CLI or API) |

| `event` | Returns an object representing the HTTP request (e.g., `{ method: 'POST', path: '/', query: {}, headers: {}, bodyRaw: '', body: {}, }`) | `run(event)` | The shape of `event` corresponds with the the HTTP request you make to the endpoint generated by Pipedream for this interface |

| `this.myPropName.respond()` | Returns an HTTP response to the client (e.g., `this.http.respond({status: 200})`). | n/a | `run()` |

###### Responding to HTTP requests

The HTTP interface exposes a `respond()` method that lets your source issue HTTP responses. You may run `this.http.respond()` to respond to the client from the `run()` method of a source. In this case you should also pass the `customResponse: true` parameter to the prop.

| Property | Type | Required? | Description |

| --------- | -------------------------- | --------- | ------------------------------------------------------------------------------------------------------------------------------ |

| `status` | `integer` | required | An integer representing the HTTP status code. Return `200` to indicate success. Standard status codes range from `100` - `599` |

| `headers` | `object` | optional | Return custom key-value pairs in the HTTP response |

| `body` | `string` `object` `buffer` | optional | Return a custom body in the HTTP response. This can be any string, object, or Buffer. |

###### HTTP Event Shape

Following is the shape of the event passed to the `run()` method of your source:

```javascript

{

method: 'POST',

path: '/',

query: {},

headers: {},

bodyRaw: '',

body:

}

```

**Example**

Following is an example source that’s triggered by `$.interface.http` and returns `{ 'msg': 'hello world!' }` in the HTTP response. On deploy, Pipedream will generate a unique URL for this source:

```javascript

export default {

name: "HTTP Example",

version: "0.0.1",

props: {

http: {

type: "$.interface.http",

customResponse: true,

},

},

async run(event) {

this.http.respond({

status: 200,

body: {

msg: "hello world!",

},

headers: {

"content-type": "application/json",

},

});

console.log(event);

},

};

```

#### Service Props

| Service | Description |

| ------- | ---------------------------------------------------------------------------------------------------- |

| *DB* | Provides access to a simple, component-specific key-value store to maintain state across executions. |

##### DB

**Definition**

```javascript

props: {

myPropName: "$.service.db",

}

```

**Usage**

| Code | Description | Read Scope | Write Scope |

| ----------------------------------- | -------------------------------------------------------------------------------------------- | ------------------------------------- | -------------------------------------- |

| `this.myPropName.get('key')` | Method to get a previously set value for a key. Returns `undefined` if a key does not exist. | `run()` `hooks` `methods` | Use the `set()` method to write values |

| `this.myPropName.set('key', value)` | Method to set a value for a key. Values must be JSON-serializable data. | Use the `get()` method to read values | `run()` `hooks` `methods` |

#### App Props

App props are normally defined in an [app file](/docs/components/contributing/guidelines/#app-files), separate from individual components. See [the `components/` directory of the pipedream GitHub repo](https://github.com/PipedreamHQ/pipedream/tree/master/components) for example app files.

**Definition**

```javascript

props: {

myPropName: {

type: "app",

app: "",

propDefinitions: {}

methods: {},

},

},

```

| Property | Type | Required? | Description |

| ----------------- | -------- | --------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `type` | `string` | required | Value must be `app` |

| `app` | `string` | required | Value must be set to the name slug for an app registered on Pipedream. [App files](/docs/components/contributing/guidelines/#app-files) are programmatically generated for all integrated apps on Pipedream. To find your app’s slug, visit the `components` directory of [the Pipedream GitHub repo](https://github.com/PipedreamHQ/pipedream/tree/master/components), find the app file (the file that ends with `.app.mjs`), and find the `app` property at the root of that module. If you don’t see an app listed, please [open an issue here](https://github.com/PipedreamHQ/pipedream/issues/new?assignees=\&labels=app%2C+enhancement\&template=app---service-integration.md\&title=%5BAPP%5D). |

| `propDefinitions` | `object` | optional | An object that contains objects with predefined user input props. See the section on User Input Props above to learn about the shapes that can be defined and how to reference in components using the `propDefinition` property |

| `methods` | `object` | optional | Define app-specific methods. Methods can be referenced within the app object context via `this` (e.g., `this.methodName()`) and within a component via `this.myAppPropName` (e.g., `this.myAppPropName.methodName()`). |

**Usage**

| Code | Description | Read Scope | Write Scope |

| --------------------------------- | ------------------------------------------------------------------------------------------------ | ----------------------------------------------- | ----------- |

| `this.$auth` | Provides access to OAuth tokens and API keys for Pipedream managed auth | **App Object:** `methods` | n/a |

| `this.myAppPropName.$auth` | Provides access to OAuth tokens and API keys for Pipedream managed auth | **Parent Component:** `run()` `hooks` `methods` | n/a |

| `this.methodName()` | Execute a common method defined for an app within the app definition (e.g., from another method) | **App Object:** `methods` | n/a |

| `this.myAppPropName.methodName()` | Execute a common method defined for an app from a component that includes the app as a prop | **Parent Component:** `run()` `hooks` `methods` | n/a |

#### Limits on props

When a user configures a prop with a value, it can hold at most {CONFIGURED_PROPS_SIZE_LIMIT} data. Consider this when accepting large input in these fields (such as a base64 string).

The {CONFIGURED_PROPS_SIZE_LIMIT} limit applies only to static values entered as raw text. In workflows, users can pass expressions (referencing data in a prior step). In that case the prop value is simply the text of the expression, for example `{{steps.nodejs.$return_value}}`, well below the limit. The value of these expressions is evaluated at runtime, and are subject to [different limits](/docs/workflows/limits/).

### Methods

You can define helper functions within the `methods` property of your component. You have access to these functions within the [`run` method](/docs/components/contributing/api/#run), or within other methods.

Methods can be accessed using `this.

#### Limits on props

When a user configures a prop with a value, it can hold at most {CONFIGURED_PROPS_SIZE_LIMIT} data. Consider this when accepting large input in these fields (such as a base64 string).

The {CONFIGURED_PROPS_SIZE_LIMIT} limit applies only to static values entered as raw text. In workflows, users can pass expressions (referencing data in a prior step). In that case the prop value is simply the text of the expression, for example `{{steps.nodejs.$return_value}}`, well below the limit. The value of these expressions is evaluated at runtime, and are subject to [different limits](/docs/workflows/limits/).

### Methods

You can define helper functions within the `methods` property of your component. You have access to these functions within the [`run` method](/docs/components/contributing/api/#run), or within other methods.

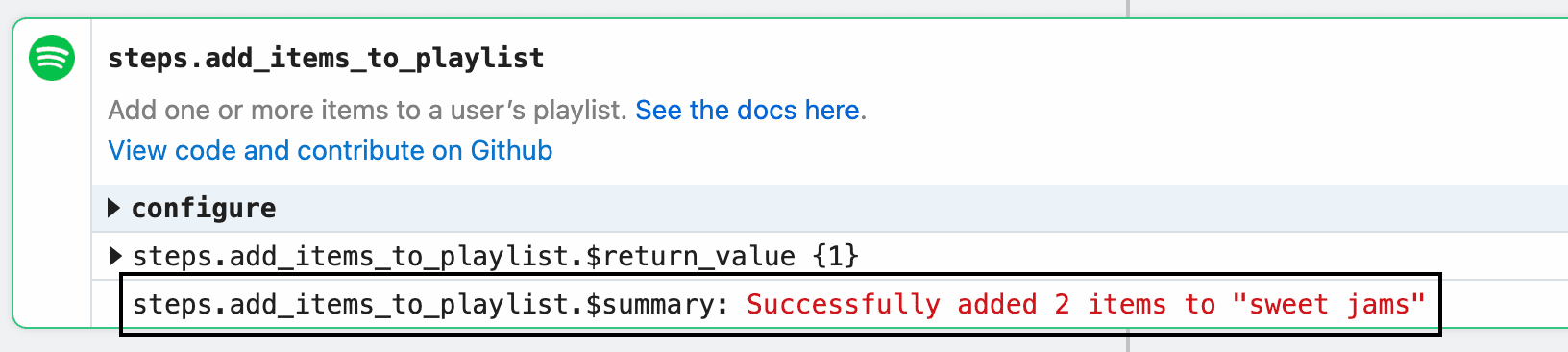

Methods can be accessed using `this. Example implementation:

```javascript

const data = [1, 2];

const playlistName = "Cool jams";

$.export(

"$summary",

`Successfully added ${data.length} ${

data.length == 1 ? "item" : "items"

} to "${playlistName}"`

);

```

##### `$.send`

`$.send` allows you to send data to [Pipedream destinations](/docs/workflows/data-management/destinations/).

**`$.send.http`**

[See the HTTP destination docs](/docs/workflows/data-management/destinations/http/#using-sendhttp-in-component-actions).

**`$.send.email`**

[See the Email destination docs](/docs/workflows/data-management/destinations/email/#using-sendemail-in-component-actions).

**`$.send.s3`**

[See the S3 destination docs](/docs/workflows/data-management/destinations/s3/#using-sends3-in-component-actions).

**`$.send.emit`**

[See the Emit destination docs](/docs/workflows/data-management/destinations/emit/#using-sendemit-in-component-actions).

**`$.send.sse`**

[See the SSE destination docs](/docs/workflows/data-management/destinations/sse/#using-sendsse-in-component-actions).

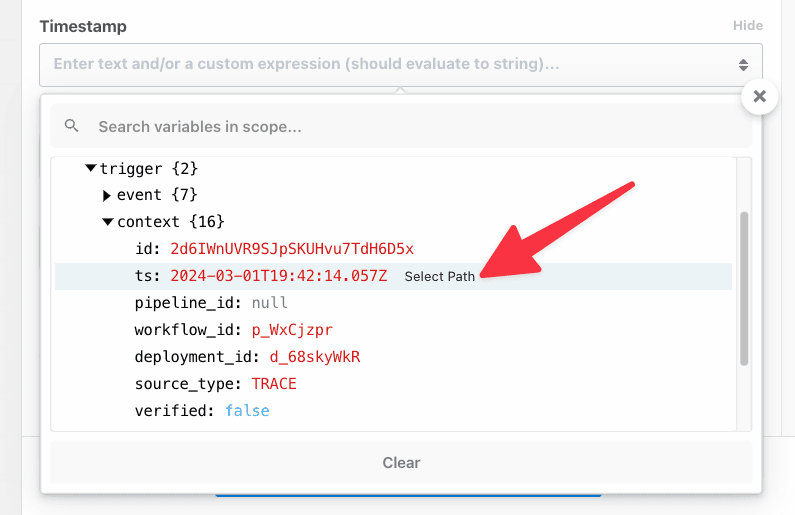

##### `$.context`

`$.context` exposes [the same properties as `steps.trigger.context`](/docs/workflows/building-workflows/triggers/#stepstriggercontext), and more. Action authors can use it to get context about the calling workflow and the execution.

All properties from [`steps.trigger.context`](/docs/workflows/building-workflows/triggers/#stepstriggercontext) are exposed, as well as:

| Property | Description |

| ---------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `deadline` | An epoch millisecond timestamp marking the point when the workflow is configured to [timeout](/docs/workflows/limits/#time-per-execution). |

| `JIT` | Stands for “just in time” (environment). `true` if the user is testing the step, `false` if the step is running in production. |

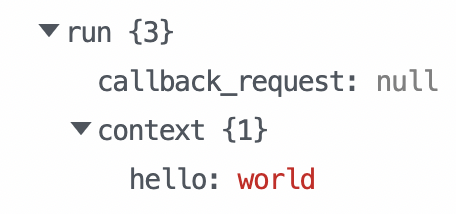

| `run` | An object containing metadata about the current run number. See [the docs on `$.flow.rerun`](/docs/workflows/building-workflows/triggers/#stepstriggercontext) for more detail. |

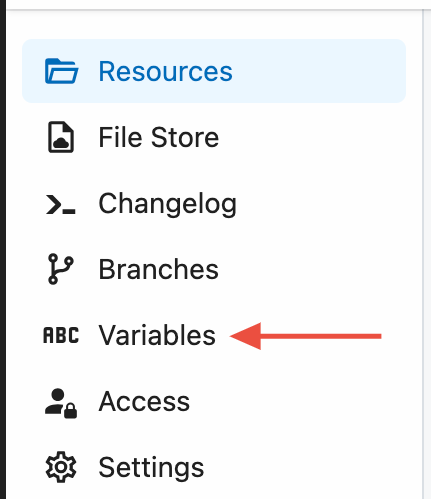

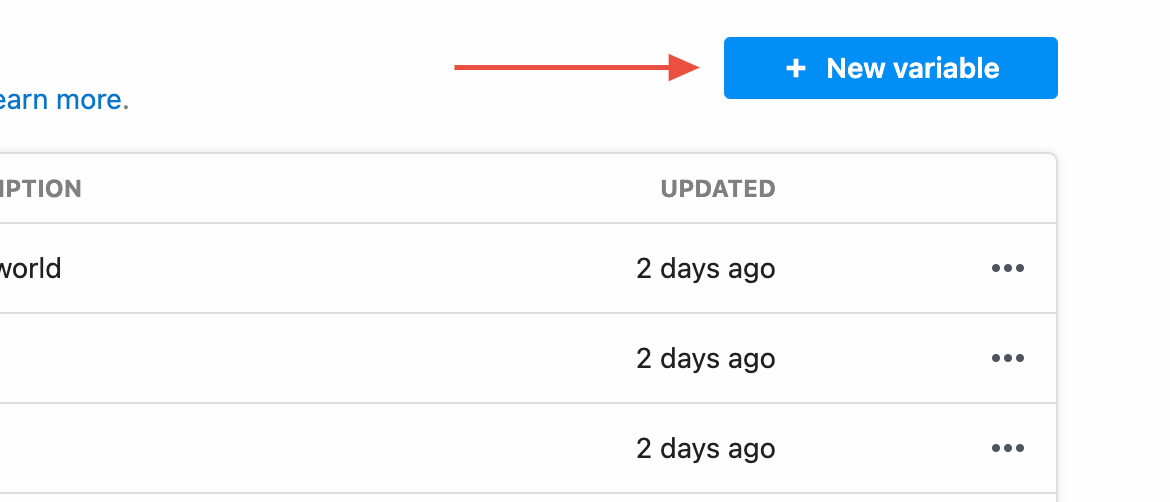

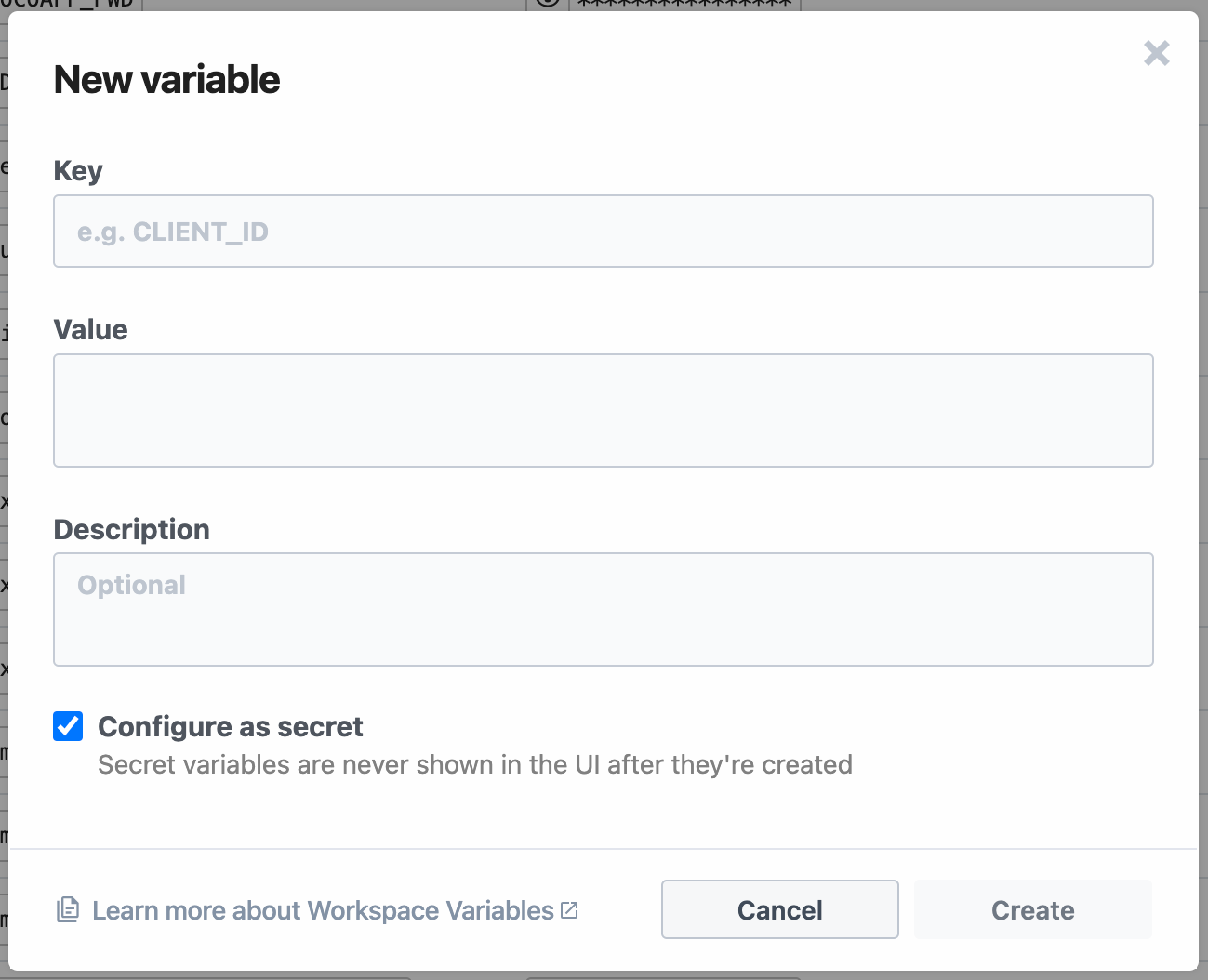

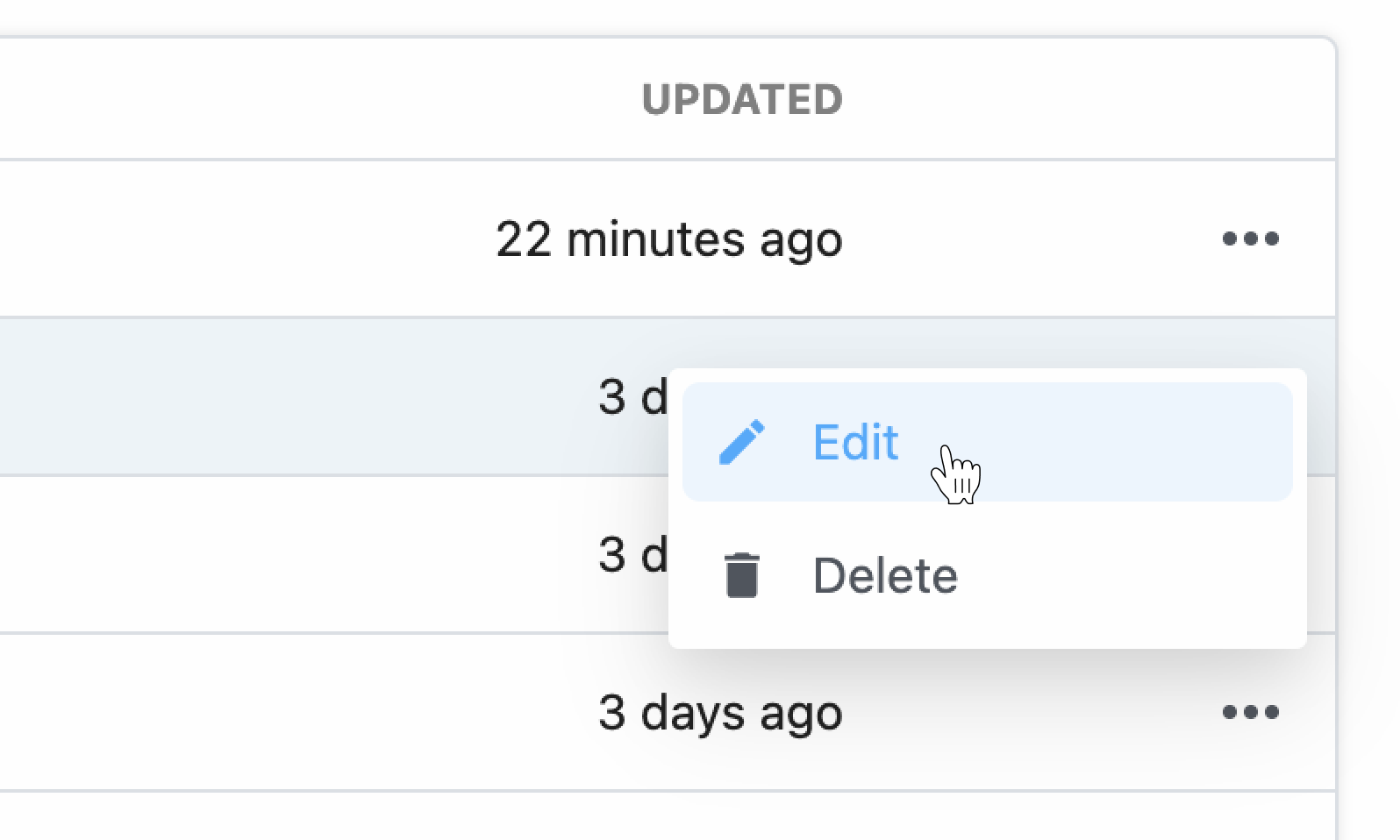

### Environment variables

[Environment variables](/docs/workflows/environment-variables/) are not accessible within sources or actions directly. Since components can be used by anyone, you cannot guarantee that a user will have a specific variable set in their environment.

In sources, you can use [`secret` props](/docs/components/contributing/api/#props) to reference sensitive data.

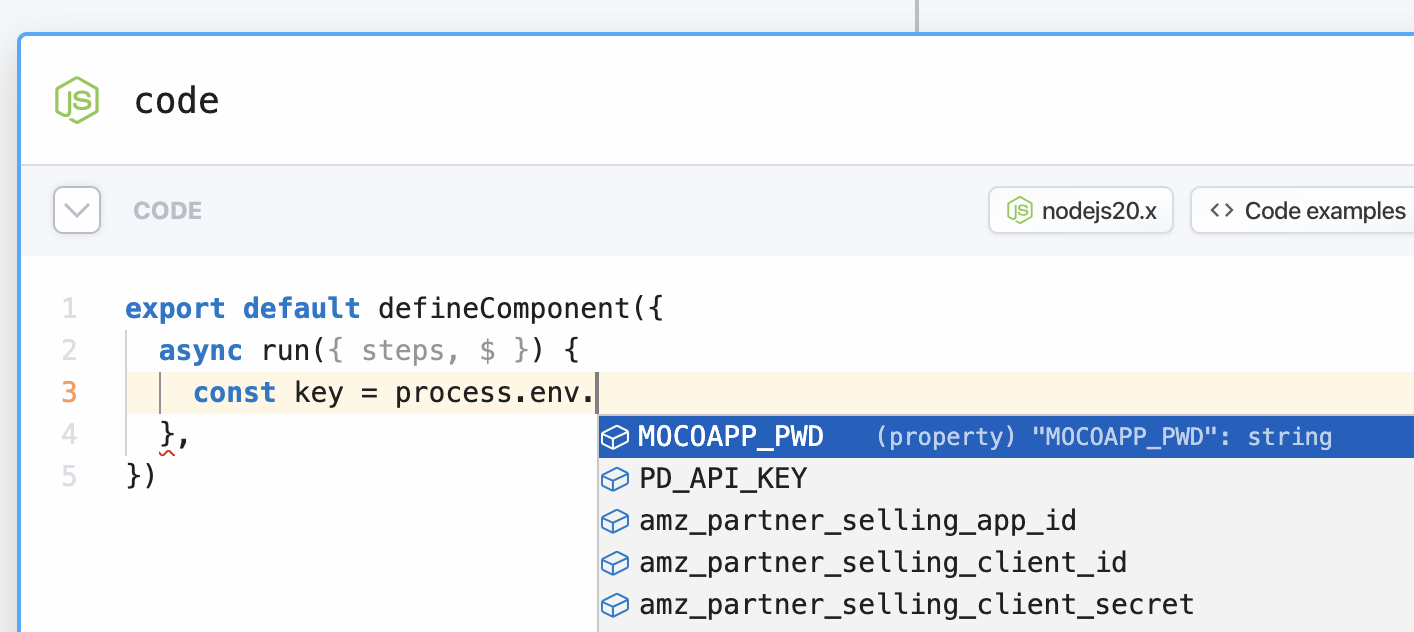

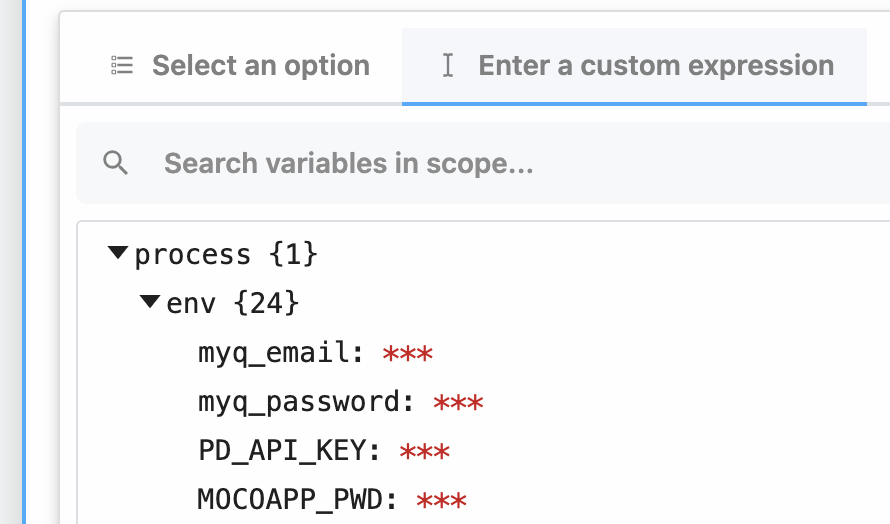

In actions, you’ll see a list of your environment variables in the object explorer when selecting a variable to pass to a step:

### Using npm packages

To use an npm package in a component, just require it. There is no `package.json` or `npm install` required.

```javascript

import axios from "axios";

```

When you deploy a component, Pipedream downloads the latest versions of these packages and bundles them with your deployment.

Some packages that rely on large dependencies or on unbundled binaries — may not work on Pipedream. Please [reach out](https://pipedream.com/support) if you encounter a specific issue.

#### Referencing a specific version of a package

*This currently applies only to sources*.

If you’d like to use a *specific* version of a package in a source, you can add that version in the `require` string, for example: `require("axios@0.19.2")`. Moreover, you can pass the same version specifiers that npm and other tools allow to specify allowed [semantic version](https://semver.org/) upgrades. For example:

* To allow for future patch version upgrades, use `require("axios@~0.20.0")`

* To allow for patch and minor version upgrades, use `require("axios@^0.20.0")`

## Managing Components

Sources and actions are developed and deployed in different ways, given the different functions they serve in the product.

* [Managing Sources](/docs/components/contributing/api/#managing-sources)

* [Managing Actions](/docs/components/contributing/api/#managing-actions)

### Managing Sources

#### CLI - Development Mode

***

The easiest way to develop and test sources is with the `pd dev` command. `pd dev` deploys a local file, attaches it to a component, and automatically updates the component on each local save. To deploy a new component with `pd dev`, run:

```

pd dev

Example implementation:

```javascript

const data = [1, 2];

const playlistName = "Cool jams";

$.export(

"$summary",

`Successfully added ${data.length} ${

data.length == 1 ? "item" : "items"

} to "${playlistName}"`

);

```

##### `$.send`

`$.send` allows you to send data to [Pipedream destinations](/docs/workflows/data-management/destinations/).

**`$.send.http`**

[See the HTTP destination docs](/docs/workflows/data-management/destinations/http/#using-sendhttp-in-component-actions).

**`$.send.email`**

[See the Email destination docs](/docs/workflows/data-management/destinations/email/#using-sendemail-in-component-actions).

**`$.send.s3`**

[See the S3 destination docs](/docs/workflows/data-management/destinations/s3/#using-sends3-in-component-actions).

**`$.send.emit`**

[See the Emit destination docs](/docs/workflows/data-management/destinations/emit/#using-sendemit-in-component-actions).

**`$.send.sse`**

[See the SSE destination docs](/docs/workflows/data-management/destinations/sse/#using-sendsse-in-component-actions).

##### `$.context`

`$.context` exposes [the same properties as `steps.trigger.context`](/docs/workflows/building-workflows/triggers/#stepstriggercontext), and more. Action authors can use it to get context about the calling workflow and the execution.

All properties from [`steps.trigger.context`](/docs/workflows/building-workflows/triggers/#stepstriggercontext) are exposed, as well as:

| Property | Description |

| ---------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `deadline` | An epoch millisecond timestamp marking the point when the workflow is configured to [timeout](/docs/workflows/limits/#time-per-execution). |

| `JIT` | Stands for “just in time” (environment). `true` if the user is testing the step, `false` if the step is running in production. |

| `run` | An object containing metadata about the current run number. See [the docs on `$.flow.rerun`](/docs/workflows/building-workflows/triggers/#stepstriggercontext) for more detail. |

### Environment variables

[Environment variables](/docs/workflows/environment-variables/) are not accessible within sources or actions directly. Since components can be used by anyone, you cannot guarantee that a user will have a specific variable set in their environment.

In sources, you can use [`secret` props](/docs/components/contributing/api/#props) to reference sensitive data.

In actions, you’ll see a list of your environment variables in the object explorer when selecting a variable to pass to a step:

### Using npm packages

To use an npm package in a component, just require it. There is no `package.json` or `npm install` required.

```javascript

import axios from "axios";

```

When you deploy a component, Pipedream downloads the latest versions of these packages and bundles them with your deployment.

Some packages that rely on large dependencies or on unbundled binaries — may not work on Pipedream. Please [reach out](https://pipedream.com/support) if you encounter a specific issue.

#### Referencing a specific version of a package

*This currently applies only to sources*.

If you’d like to use a *specific* version of a package in a source, you can add that version in the `require` string, for example: `require("axios@0.19.2")`. Moreover, you can pass the same version specifiers that npm and other tools allow to specify allowed [semantic version](https://semver.org/) upgrades. For example:

* To allow for future patch version upgrades, use `require("axios@~0.20.0")`

* To allow for patch and minor version upgrades, use `require("axios@^0.20.0")`

## Managing Components

Sources and actions are developed and deployed in different ways, given the different functions they serve in the product.

* [Managing Sources](/docs/components/contributing/api/#managing-sources)

* [Managing Actions](/docs/components/contributing/api/#managing-actions)

### Managing Sources

#### CLI - Development Mode

***

The easiest way to develop and test sources is with the `pd dev` command. `pd dev` deploys a local file, attaches it to a component, and automatically updates the component on each local save. To deploy a new component with `pd dev`, run:

```

pd dev  ### Triggering Sources

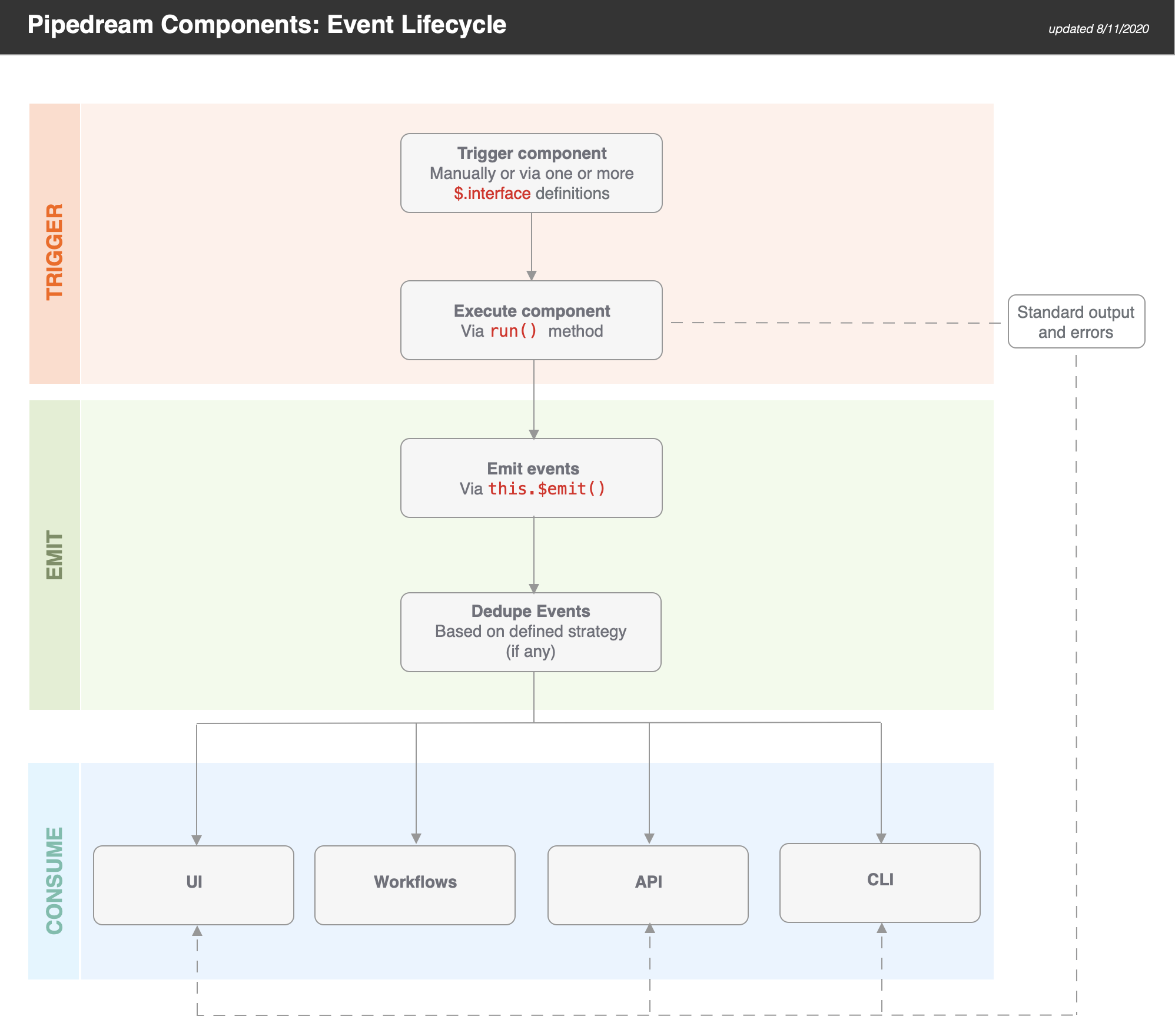

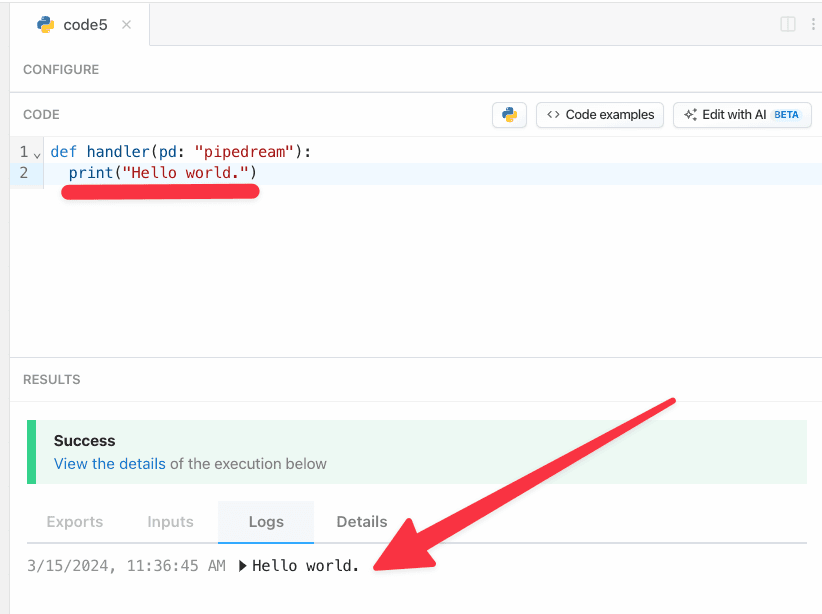

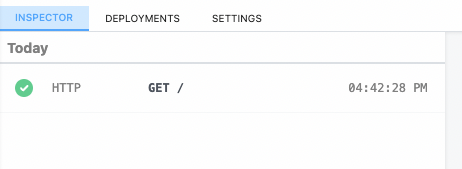

Sources are triggered when you manually run them (e.g., via the **RUN NOW** button in the UI) or when one of their [interfaces](/docs/components/contributing/api/#interface-props) is triggered. Pipedream sources currently support **HTTP** and **Timer** interfaces.

When a source is triggered, the `run()` method of the component is executed. Standard output and errors are surfaced in the **Logs** tab.

### Emitting Events from Sources

Sources can emit events via `this.$emit()`. If you define a [dedupe strategy](/docs/components/contributing/api/#dedupe-strategies) for a source, Pipedream automatically dedupes the events you emit.

> **TIP:** if you want to use a dedupe strategy, be sure to pass an `id` for each event. Pipedream uses this value for deduping purposes.

### Consuming Events from Sources

Pipedream makes it easy to consume events via:

* The UI

* Workflows

* APIs

* CLI

#### UI

When you navigate to your source [in the UI](https://pipedream.com/sources), you’ll be able to select and inspect the most recent 100 events (i.e., an event bin). For example, if you send requests to a simple HTTP source, you will be able to inspect the events (i.e., a request bin).

#### Workflows

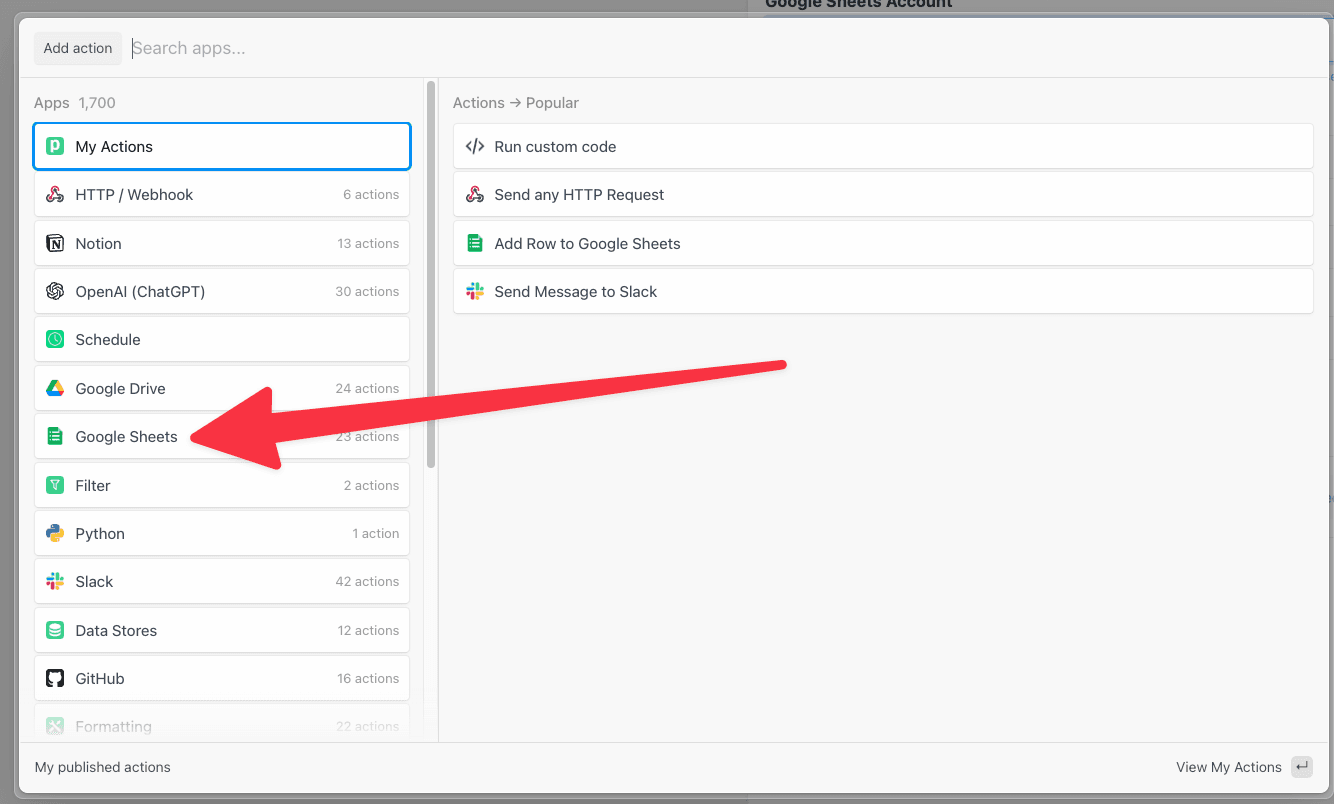

[Trigger hosted Node.js workflows](/docs/workflows/building-workflows/) on each event. Integrate with 2,700+ apps including Google Sheets, Discord, Slack, AWS, and more!

#### API

Events can be retrieved using the [REST API](/docs/rest-api/) or [SSE stream tied to your component](/docs/workflows/data-management/destinations/sse/). This makes it easy to retrieve data processed by your component from another app. Typically, you’ll want to use the [REST API](/docs/rest-api/) to retrieve events in batch, and connect to the [SSE stream](/docs/workflows/data-management/destinations/sse/) to process them in real time.

#### CLI

Use the `pd events` command to retrieve the last 10 events via the CLI:

```

pd events -n 10

### Triggering Sources

Sources are triggered when you manually run them (e.g., via the **RUN NOW** button in the UI) or when one of their [interfaces](/docs/components/contributing/api/#interface-props) is triggered. Pipedream sources currently support **HTTP** and **Timer** interfaces.

When a source is triggered, the `run()` method of the component is executed. Standard output and errors are surfaced in the **Logs** tab.

### Emitting Events from Sources

Sources can emit events via `this.$emit()`. If you define a [dedupe strategy](/docs/components/contributing/api/#dedupe-strategies) for a source, Pipedream automatically dedupes the events you emit.

> **TIP:** if you want to use a dedupe strategy, be sure to pass an `id` for each event. Pipedream uses this value for deduping purposes.

### Consuming Events from Sources

Pipedream makes it easy to consume events via:

* The UI

* Workflows

* APIs

* CLI

#### UI

When you navigate to your source [in the UI](https://pipedream.com/sources), you’ll be able to select and inspect the most recent 100 events (i.e., an event bin). For example, if you send requests to a simple HTTP source, you will be able to inspect the events (i.e., a request bin).

#### Workflows

[Trigger hosted Node.js workflows](/docs/workflows/building-workflows/) on each event. Integrate with 2,700+ apps including Google Sheets, Discord, Slack, AWS, and more!

#### API

Events can be retrieved using the [REST API](/docs/rest-api/) or [SSE stream tied to your component](/docs/workflows/data-management/destinations/sse/). This makes it easy to retrieve data processed by your component from another app. Typically, you’ll want to use the [REST API](/docs/rest-api/) to retrieve events in batch, and connect to the [SSE stream](/docs/workflows/data-management/destinations/sse/) to process them in real time.

#### CLI

Use the `pd events` command to retrieve the last 10 events via the CLI:

```

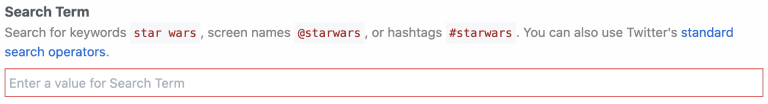

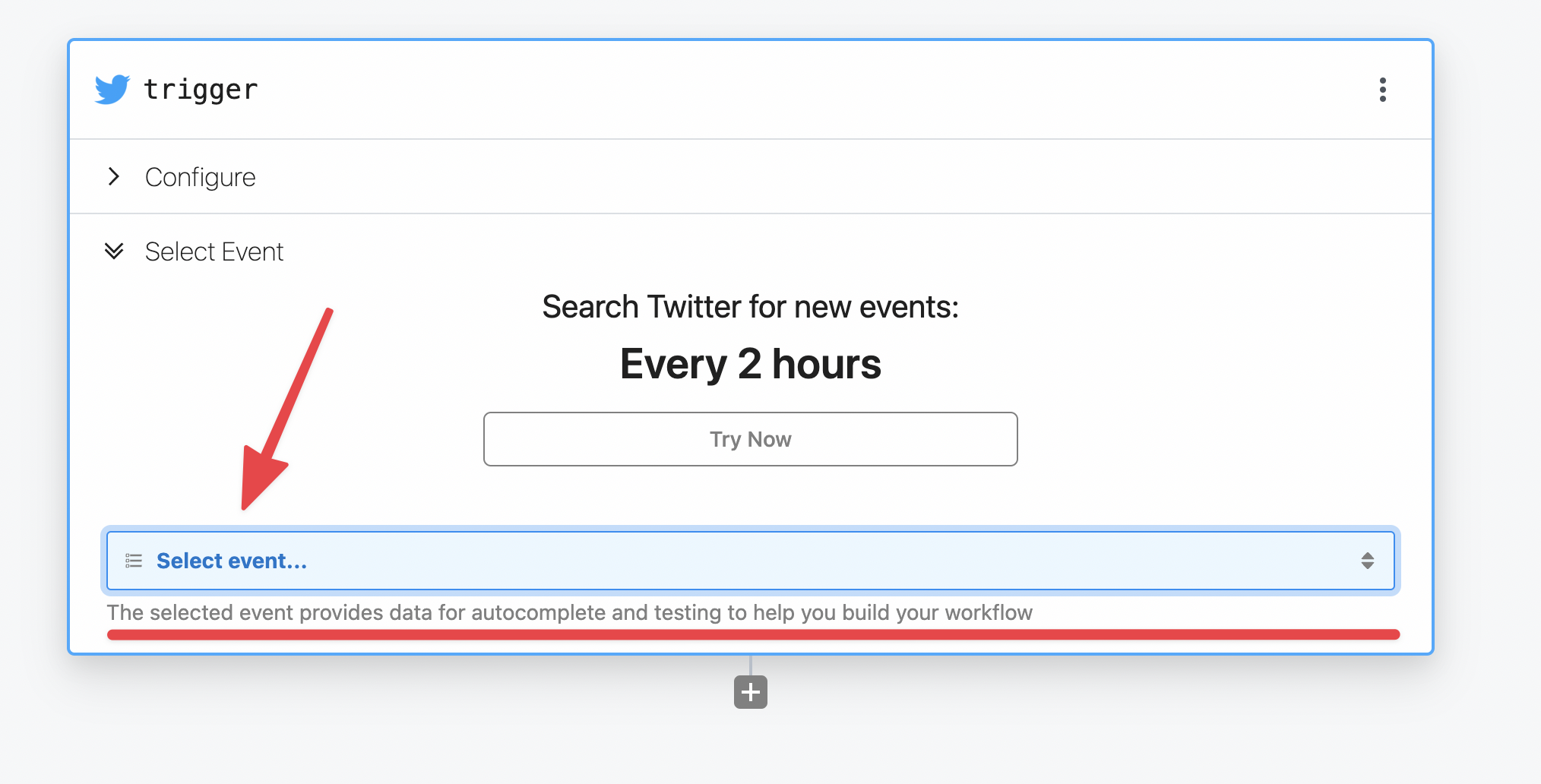

pd events -n 10  * The “Search Term” prop for Twitter includes a description that helps the user understand what values they can enter, with specific values highlighted using backticks and links to external content.

* The “Search Term” prop for Twitter includes a description that helps the user understand what values they can enter, with specific values highlighted using backticks and links to external content.

### Optional vs Required Props

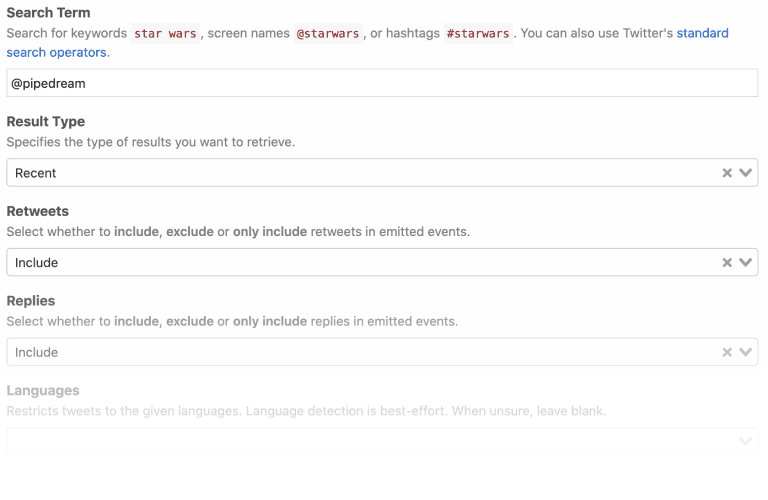

Use optional [props](/docs/components/contributing/api/#user-input-props) whenever possible to minimize the input fields required to use a component.

For example, the Twitter search mentions source only requires that a user connect their account and enter a search term. The remaining fields are optional for users who want to filter the results, but they do not require any action to activate the source:

### Optional vs Required Props

Use optional [props](/docs/components/contributing/api/#user-input-props) whenever possible to minimize the input fields required to use a component.

For example, the Twitter search mentions source only requires that a user connect their account and enter a search term. The remaining fields are optional for users who want to filter the results, but they do not require any action to activate the source:

### Default Values

Provide [default values](/docs/components/contributing/api/#user-input-props) whenever possible. NOTE: the best default for a source doesn’t always map to the default recommended by the app. For example, Twitter defaults search results to an algorithm that balances recency and popularity. However, the best default for the use case on Pipedream is recency.

### Async Options

Avoid asking users to enter ID values. Use [async options](/docs/components/contributing/api/#async-options-example) (with label/value definitions) so users can make selections from a drop down menu. For example, Todoist identifies projects by numeric IDs (e.g., 12345). The async option to select a project displays the name of the project as the label, so that’s the value the user sees when interacting with the source (e.g., “My Project”). The code referencing the selection receives the numeric ID (12345).

Async options should also support [pagination](/docs/components/contributing/api/#async-options-example) (so users can navigate across multiple pages of options for long lists). See [Hubspot](https://github.com/PipedreamHQ/pipedream/blob/a9b45d8be3b84504dc22bb2748d925f0d5c1541f/components/hubspot/hubspot.app.mjs#L136) for an example of offset-based pagination. See [Twitter](https://github.com/PipedreamHQ/pipedream/blob/d240752028e2a17f7cca1a512b40725566ea97bd/components/twitter/twitter.app.mjs#L200) for an example of cursor-based pagination.

### Dynamic Props

[Dynamic props](/docs/components/contributing/api/#dynamic-props) can improve the user experience for components. They let you render props in Pipedream dynamically, based on the value of other props, and can be used to collect more specific information that can make it easier to use the component. See the Google Sheets example in the linked component API docs.

### Interface & Service Props

In the interest of consistency, use the following naming patterns when defining [interface](/docs/components/contributing/api/#interface-props) and [service](/docs/components/contributing/api/#service-props) props in source components:

| Prop | **Recommended Prop Variable Name** |

| ------------------- | ---------------------------------- |

| `$.interface.http` | `http` |

| `$.interface.timer` | `timer` |

| `$.service.db` | `db` |

Use getters and setters when dealing with `$.service.db` to avoid potential typos and leverage encapsulation (e.g., see the [Search Mentions](https://github.com/PipedreamHQ/pipedream/blob/master/components/twitter/sources/search-mentions/search-mentions.mjs#L83-L88) event source for Twitter).

## Source Guidelines

These guidelines are specific to [source](/docs/workflows/building-workflows/triggers/) development.

### Webhook vs Polling Sources

Create subscription webhooks sources (vs polling sources) whenever possible. Webhook sources receive/emit events in real-time and typically use less compute time from the user’s account. Note: In some cases, it may be appropriate to support webhook and polling sources for the same event. For example, Calendly supports subscription webhooks for their premium users, but non-premium users are limited to the REST API. A webhook source can be created to emit new Calendly events for premium users, and a polling source can be created to support similar functionality for non-premium users.

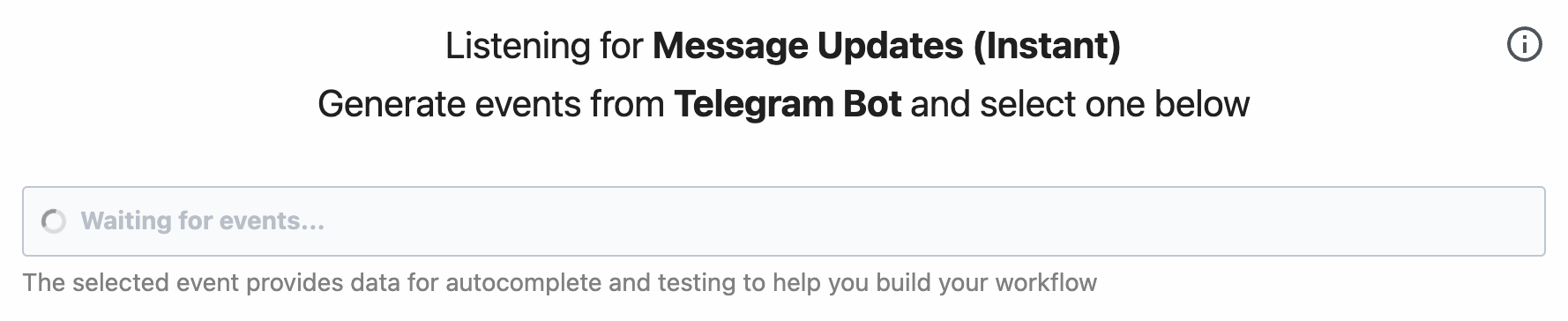

### Source Name

Source name should be a singular, title-cased name and should start with “New” (unless emits are not limited to new items). Name should not be slugified and should not include the app name. NOTE: Pipedream does not currently distinguish real-time event sources for end-users automatically. The current pattern to identify a real-time event source is to include “(Instant)” in the source name. E.g., “New Search Mention” or “New Submission (Instant)”.

### Source Description

Enter a short description that provides more detail than the name alone. Typically starts with “Emit new”. E.g., “Emit new Tweets that matches your search criteria”.

### Emit a Summary

Always [emit a summary](/docs/components/contributing/api/#emit) for each event. For example, the summary for each new Tweet emitted by the Search Mentions source is the content of the Tweet itself.

If no sensible summary can be identified, submit the event payload in string format as the summary.

### Deduping

Use built-in [deduping strategies](/docs/components/contributing/api/#dedupe-strategies) whenever possible (`unique`, `greatest`, `last`) vs developing custom deduping code. Develop custom deduping code if the existing strategies do not support the requirements for a source.

### Surfacing Test Events

In order to provide users with source events that they can immediately reference when building their workflow, we should implement 2 strategies whenever possible:

#### Emit Events on First Run

* Polling sources should always emit events on the first run (see the [Spotify: New Playlist](https://github.com/PipedreamHQ/pipedream/blob/master/components/spotify/sources/new-playlist/new-playlist.mjs) source as an example)

* Webhook-based sources should attempt to fetch existing events in the `deploy()` hook during source creation (see the [Jotform: New Submission](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/new-submission.mjs) source)

*Note – make sure to emit the most recent events (considering pagination), and limit the count to no more than 50 events.*

#### Include a Static Sample Event

There are times where there may not be any historical events available (think about sources that emit less frequently, like “New Customer” or “New Order”, etc). In these cases, we should include a static sample event so users can see the event shape and reference it while building their workflow, even if it’s using fake data.

To achieve this, follow these steps:

1. Copy the JSON output from the source’s emit (what you get from `steps.trigger.event`) and **make sure to remove or scrub any sensitive or personal data** (you can also copy this from the app’s API docs)

2. Add a new file called `test-event.mjs` in the same directory as the component source and export the JSON event via `export default` ([example](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/test-event.mjs))

3. In the source component code, make sure to import that file as `sampleEmit` ([example](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/new-submission.mjs#L2))

4. And finally, export the `sampleEmit` object ([example](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/new-submission.mjs#L96))

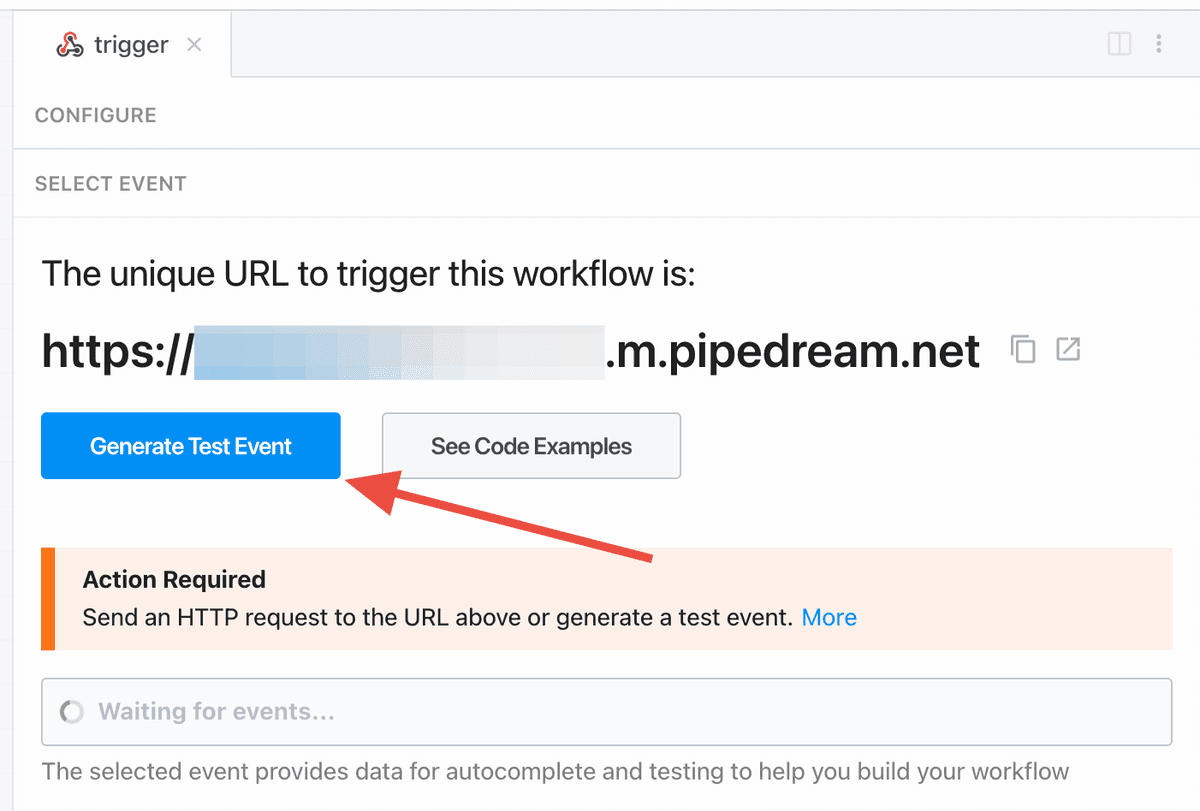

This will render a “Generate Test Event” button in the UI for users to emit that sample event:

### Default Values

Provide [default values](/docs/components/contributing/api/#user-input-props) whenever possible. NOTE: the best default for a source doesn’t always map to the default recommended by the app. For example, Twitter defaults search results to an algorithm that balances recency and popularity. However, the best default for the use case on Pipedream is recency.

### Async Options

Avoid asking users to enter ID values. Use [async options](/docs/components/contributing/api/#async-options-example) (with label/value definitions) so users can make selections from a drop down menu. For example, Todoist identifies projects by numeric IDs (e.g., 12345). The async option to select a project displays the name of the project as the label, so that’s the value the user sees when interacting with the source (e.g., “My Project”). The code referencing the selection receives the numeric ID (12345).

Async options should also support [pagination](/docs/components/contributing/api/#async-options-example) (so users can navigate across multiple pages of options for long lists). See [Hubspot](https://github.com/PipedreamHQ/pipedream/blob/a9b45d8be3b84504dc22bb2748d925f0d5c1541f/components/hubspot/hubspot.app.mjs#L136) for an example of offset-based pagination. See [Twitter](https://github.com/PipedreamHQ/pipedream/blob/d240752028e2a17f7cca1a512b40725566ea97bd/components/twitter/twitter.app.mjs#L200) for an example of cursor-based pagination.

### Dynamic Props

[Dynamic props](/docs/components/contributing/api/#dynamic-props) can improve the user experience for components. They let you render props in Pipedream dynamically, based on the value of other props, and can be used to collect more specific information that can make it easier to use the component. See the Google Sheets example in the linked component API docs.

### Interface & Service Props

In the interest of consistency, use the following naming patterns when defining [interface](/docs/components/contributing/api/#interface-props) and [service](/docs/components/contributing/api/#service-props) props in source components:

| Prop | **Recommended Prop Variable Name** |

| ------------------- | ---------------------------------- |

| `$.interface.http` | `http` |

| `$.interface.timer` | `timer` |

| `$.service.db` | `db` |

Use getters and setters when dealing with `$.service.db` to avoid potential typos and leverage encapsulation (e.g., see the [Search Mentions](https://github.com/PipedreamHQ/pipedream/blob/master/components/twitter/sources/search-mentions/search-mentions.mjs#L83-L88) event source for Twitter).

## Source Guidelines

These guidelines are specific to [source](/docs/workflows/building-workflows/triggers/) development.

### Webhook vs Polling Sources

Create subscription webhooks sources (vs polling sources) whenever possible. Webhook sources receive/emit events in real-time and typically use less compute time from the user’s account. Note: In some cases, it may be appropriate to support webhook and polling sources for the same event. For example, Calendly supports subscription webhooks for their premium users, but non-premium users are limited to the REST API. A webhook source can be created to emit new Calendly events for premium users, and a polling source can be created to support similar functionality for non-premium users.

### Source Name

Source name should be a singular, title-cased name and should start with “New” (unless emits are not limited to new items). Name should not be slugified and should not include the app name. NOTE: Pipedream does not currently distinguish real-time event sources for end-users automatically. The current pattern to identify a real-time event source is to include “(Instant)” in the source name. E.g., “New Search Mention” or “New Submission (Instant)”.

### Source Description

Enter a short description that provides more detail than the name alone. Typically starts with “Emit new”. E.g., “Emit new Tweets that matches your search criteria”.

### Emit a Summary

Always [emit a summary](/docs/components/contributing/api/#emit) for each event. For example, the summary for each new Tweet emitted by the Search Mentions source is the content of the Tweet itself.

If no sensible summary can be identified, submit the event payload in string format as the summary.

### Deduping

Use built-in [deduping strategies](/docs/components/contributing/api/#dedupe-strategies) whenever possible (`unique`, `greatest`, `last`) vs developing custom deduping code. Develop custom deduping code if the existing strategies do not support the requirements for a source.

### Surfacing Test Events

In order to provide users with source events that they can immediately reference when building their workflow, we should implement 2 strategies whenever possible:

#### Emit Events on First Run

* Polling sources should always emit events on the first run (see the [Spotify: New Playlist](https://github.com/PipedreamHQ/pipedream/blob/master/components/spotify/sources/new-playlist/new-playlist.mjs) source as an example)

* Webhook-based sources should attempt to fetch existing events in the `deploy()` hook during source creation (see the [Jotform: New Submission](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/new-submission.mjs) source)

*Note – make sure to emit the most recent events (considering pagination), and limit the count to no more than 50 events.*

#### Include a Static Sample Event

There are times where there may not be any historical events available (think about sources that emit less frequently, like “New Customer” or “New Order”, etc). In these cases, we should include a static sample event so users can see the event shape and reference it while building their workflow, even if it’s using fake data.

To achieve this, follow these steps:

1. Copy the JSON output from the source’s emit (what you get from `steps.trigger.event`) and **make sure to remove or scrub any sensitive or personal data** (you can also copy this from the app’s API docs)

2. Add a new file called `test-event.mjs` in the same directory as the component source and export the JSON event via `export default` ([example](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/test-event.mjs))

3. In the source component code, make sure to import that file as `sampleEmit` ([example](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/new-submission.mjs#L2))

4. And finally, export the `sampleEmit` object ([example](https://github.com/PipedreamHQ/pipedream/blob/master/components/jotform/sources/new-submission/new-submission.mjs#L96))

This will render a “Generate Test Event” button in the UI for users to emit that sample event:

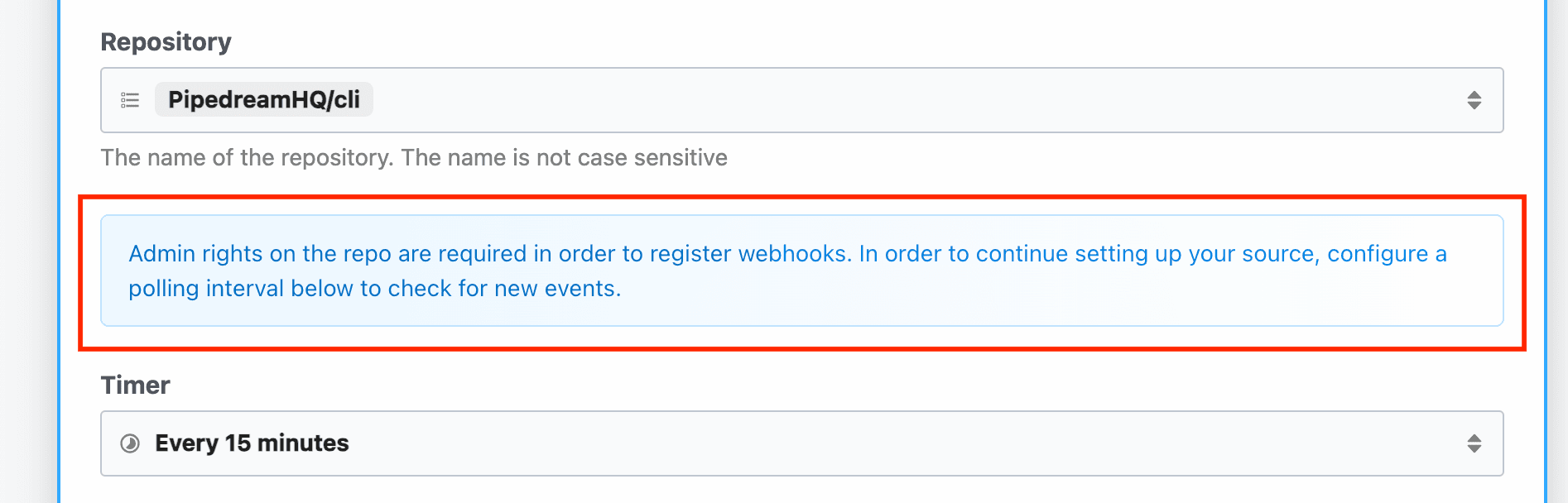

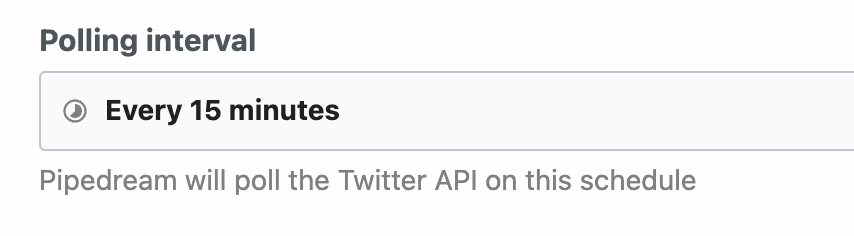

### Polling Sources

#### Default Timer Interval

As a general heuristic, set the default timer interval to 15 minutes. However, you may set a custom interval (greater or less than 15 minutes) if appropriate for the specific source. Users may also override the default value at any time.

For polling sources in the Pipedream registry, the default polling interval is set as a global config. Individual sources can access that default within the props definition:

```javascript

import { DEFAULT_POLLING_SOURCE_TIMER_INTERVAL } from "@pipedream/platform";

export default {

props: {

timer: {

type: "$.interface.timer",

default: {

intervalSeconds: DEFAULT_POLLING_SOURCE_TIMER_INTERVAL,

},

},

},

// rest of component...

}

```

#### Rate Limit Optimization

When building a polling source, cache the most recently processed ID or timestamp using `$.service.db` whenever the API accepts a `since_id` or “since timestamp” (or equivalent). Some apps (e.g., Github) do not count requests that do not return new results against a user’s API quota.

If the service has a well-supported Node.js client library, it’ll often build in retries for issues like rate limits, so using the client lib (when available) should be preferred. In the absence of that, [Bottleneck](https://www.npmjs.com/package/bottleneck) can be useful for managing rate limits. 429s should be handled with exponential backoff (instead of just letting the error bubble up).

### Webhook Sources

#### Hooks

[Hooks](/docs/components/contributing/api/#hooks) are methods that are automatically invoked by Pipedream at different stages of the [component lifecycle](/docs/components/contributing/api/#source-lifecycle). Webhook subscriptions are typically created when components are instantiated or activated via the `activate()` hook, and deleted when components are deactivated or deleted via the `deactivate()` hook.

#### Helper Methods

Whenever possible, create methods in the app file to manage [creating and deleting webhook subscriptions](/docs/components/contributing/api/#hooks).

| **Description** | **Method Name** |

| --------------------------------------- | --------------- |

| Method to create a webhook subscription | `createHook()` |

| Method to delete a webhook subscription | `deleteHook()` |

#### Storing the 3rd Party Webhook ID

After subscribing to a webhook, save the ID for the hook returned by the 3rd party service to the `$.service.db` for a source using the key `hookId`. This ID will be referenced when managing or deleting the webhook. Note: some apps may not return a unique ID for the registered webhook (e.g., Jotform).

#### Signature Validation

Subscription webhook components should always validate the incoming event signature if the source app supports it.

#### Shared Secrets

If the source app supports shared secrets, implement support transparent to the end user. Generate and use a GUID for the shared secret value, save it to a `$.service.db` key, and use the saved value to validate incoming events.

## Action Guidelines

### Action Name

Like [source name](/docs/components/contributing/guidelines/#source-name), action name should be a singular, title-cased name, should not be slugified, and should not include the app name.

As a general pattern, articles are not included in the action name. For example, instead of “Create a Post”, use “Create Post”.

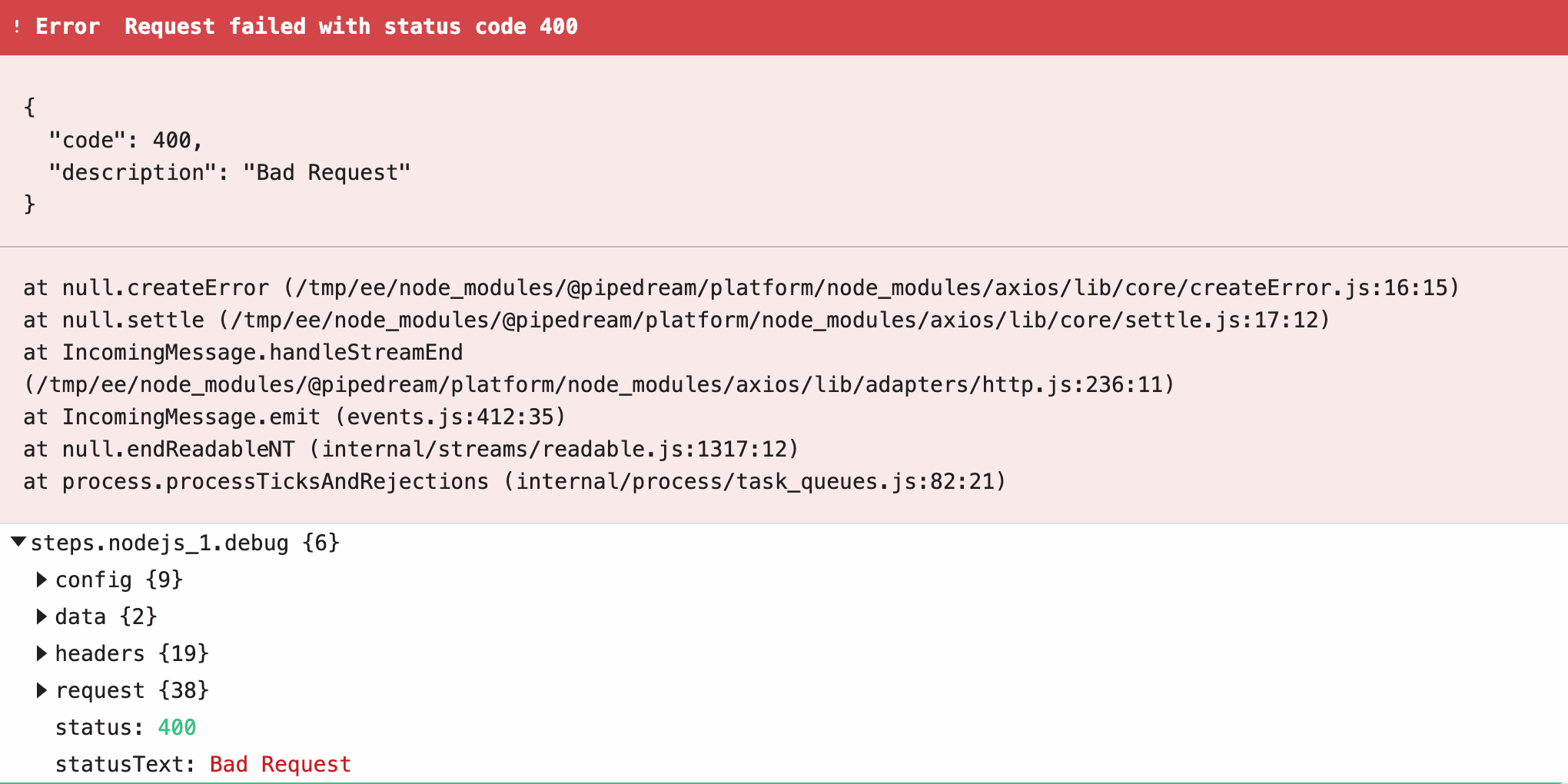

#### Use `@pipedream/platform` axios for all HTTP Requests

By default, the standard `axios` package doesn’t return useful debugging data to the user when it `throw`s errors on HTTP 4XX and 5XX status codes. This makes it hard for the user to troubleshoot the issue.

Instead, [use `@pipedream/platform` axios](/docs/workflows/building-workflows/http/#platform-axios).

#### Return JavaScript Objects

When you `return` data from an action, it’s exposed as a [step export](/docs/workflows/#step-exports) for users to reference in future steps of their workflow. Return JavaScript objects in all cases, unless there’s a specific reason not to.

For example, some APIs return XML responses. If you return XML from the step, it’s harder for users to parse and reference in future steps. Convert the XML to a JavaScript object, and return that, instead.

### ”List” Actions

#### Return an Array of Objects

To simplify using results from “list”/“search” actions in future steps of a workflow, return an array of the items being listed rather than an object with a nested array. [See this example for Airtable](https://github.com/PipedreamHQ/pipedream/blob/cb4b830d93e1495d8622b0c7dbd80cd3664e4eb3/components/airtable/actions/common-list.js#L48-L63).

#### Handle Pagination

For actions that return a list of items, the common use case is to retrieve all items. Handle pagination within the action to remove the complexity of needing to paginate from users. We may revisit this in the future and expose the pagination / offset params directly to the user.

In some cases, it may be appropriate to limit the number of API requests made or records returned in an action. For example, some Twitter actions optionally limit the number of API requests that are made per execution (using a [`maxRequests` prop](https://github.com/PipedreamHQ/pipedream/blob/cb4b830d93e1495d8622b0c7dbd80cd3664e4eb3/components/twitter/twitter.app.mjs#L52)) to avoid exceeding Twitter’s rate limits. [See the Airtable components](https://github.com/PipedreamHQ/pipedream/blob/e2bb7b7bea2fdf5869f18e84644f5dc61d9c22f0/components/airtable/airtable.app.js#L14) for an example of using a `maxRecords` prop to optionally limit the maximum number of records to return.

### Use `$.summary` to Summarize What Happened

[Describe what happened](/docs/components/contributing/api/#returning-data-from-steps) when an action succeeds by following these guidelines:

* Use plain language and provide helpful and contextually relevant information (especially the count of items)

* Whenever possible, use names and titles instead of IDs

* Basic structure: *Successfully \[action performed (like added, removed, updated)] “\[relevant destination]”*

### Don’t Export Data You Know Will Be Large

Browsers can crash when users load large exports (many MBs of data). When you know the content being returned is likely to be large –e.g. files —don’t export the full content. Consider writing the data to the `/tmp` directory and exporting a reference to the file.

## Miscellaneous

* Use camelCase for all props, method names, and variables.

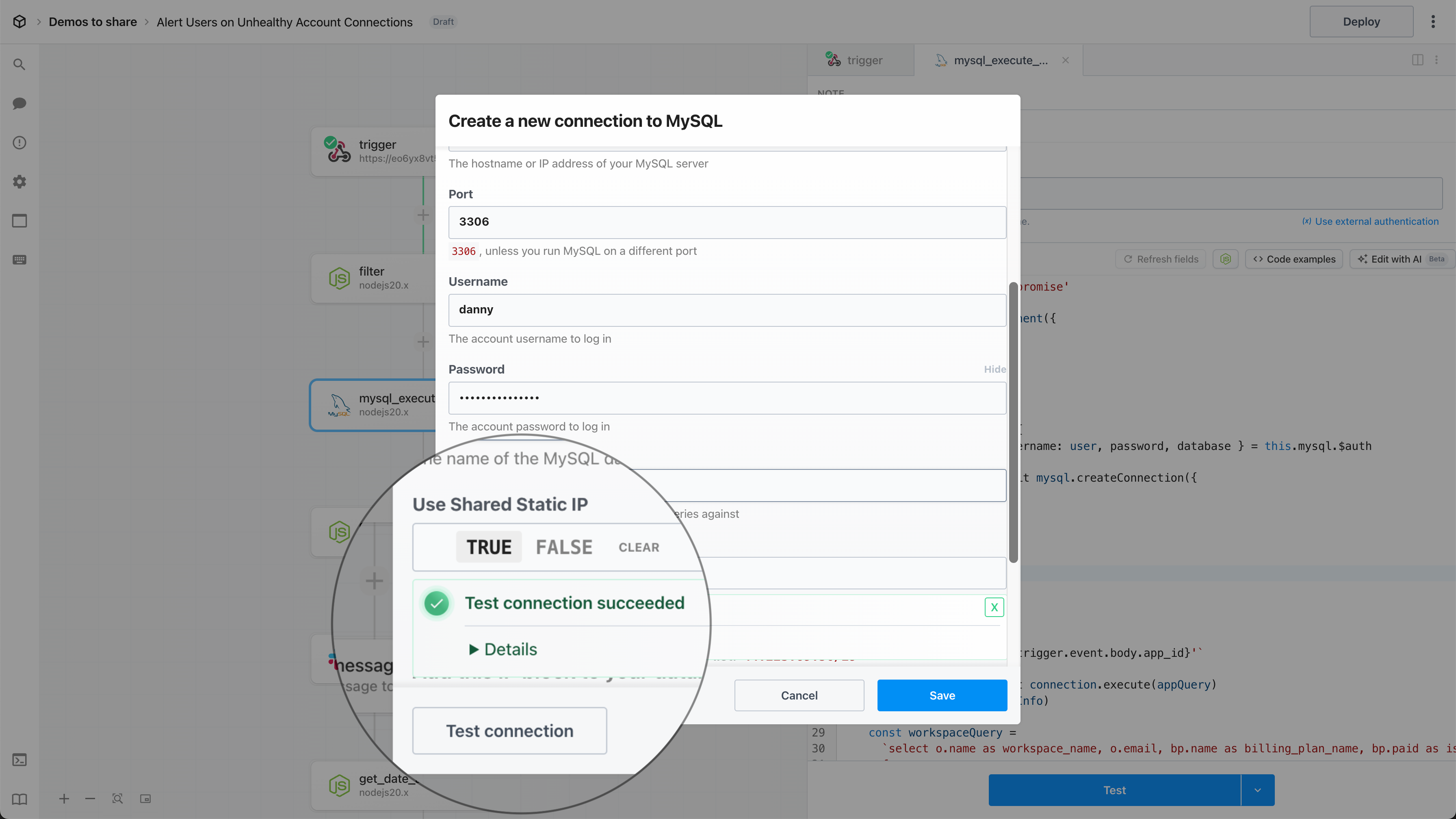

## Database Components

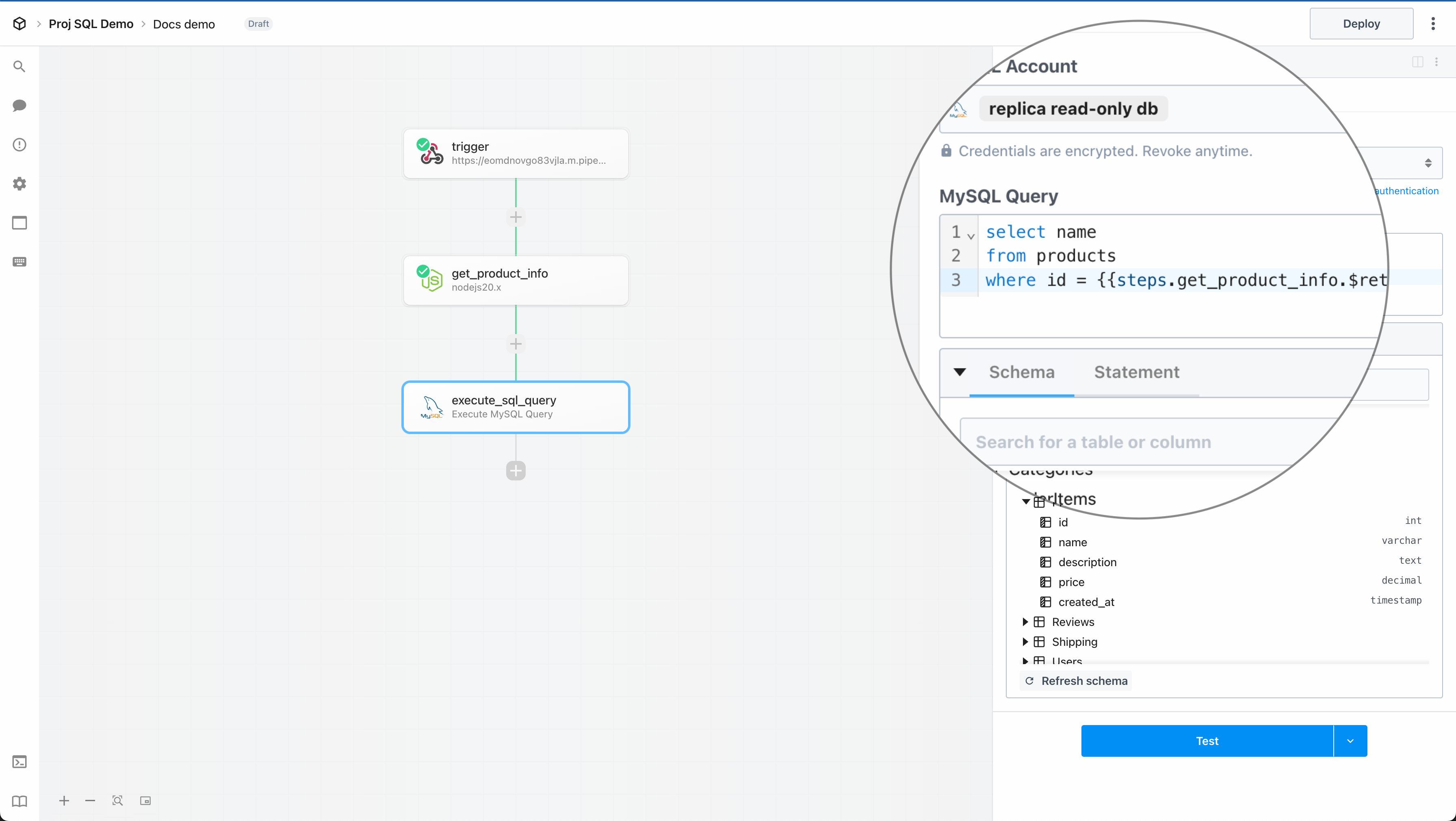

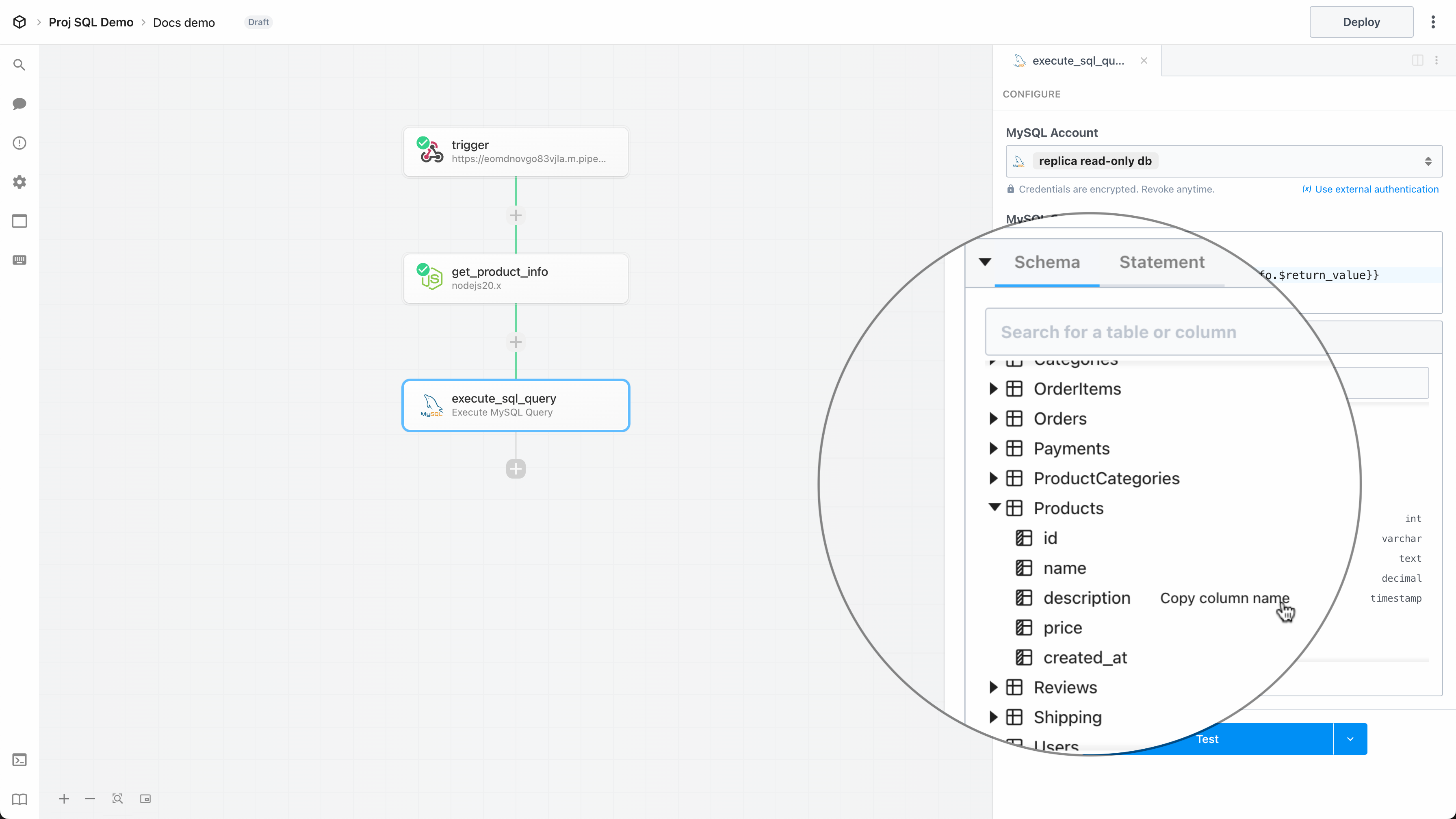

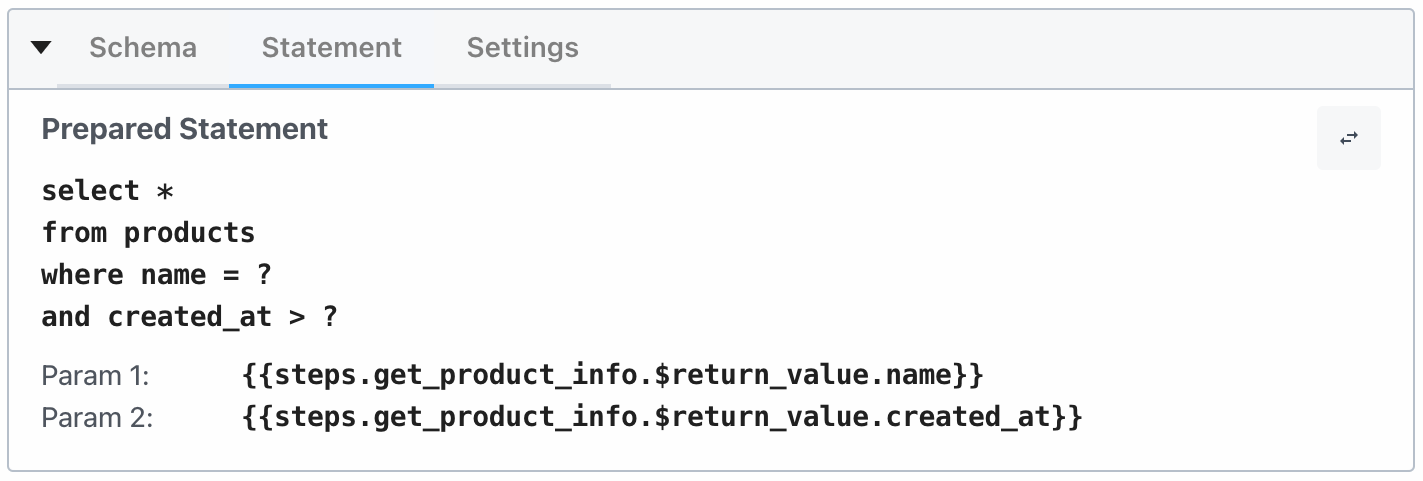

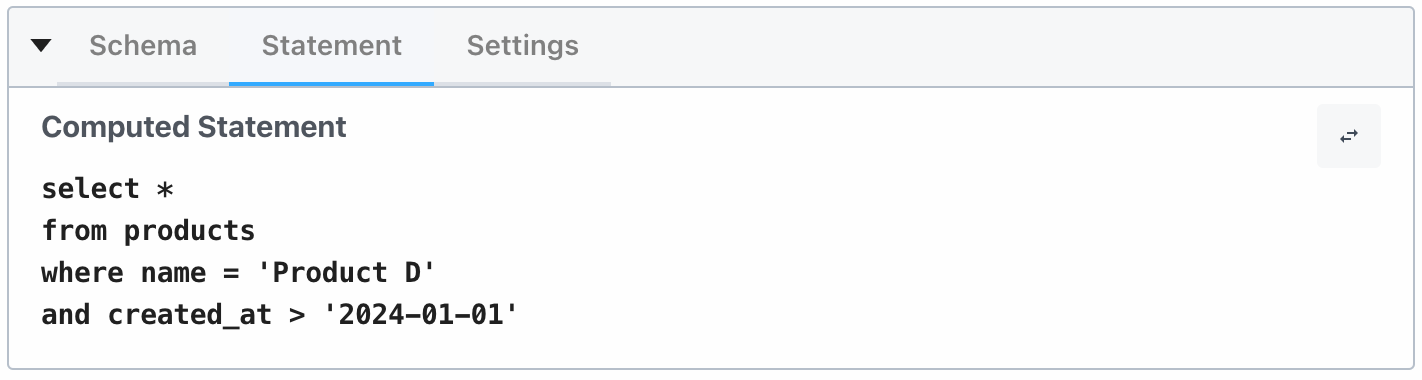

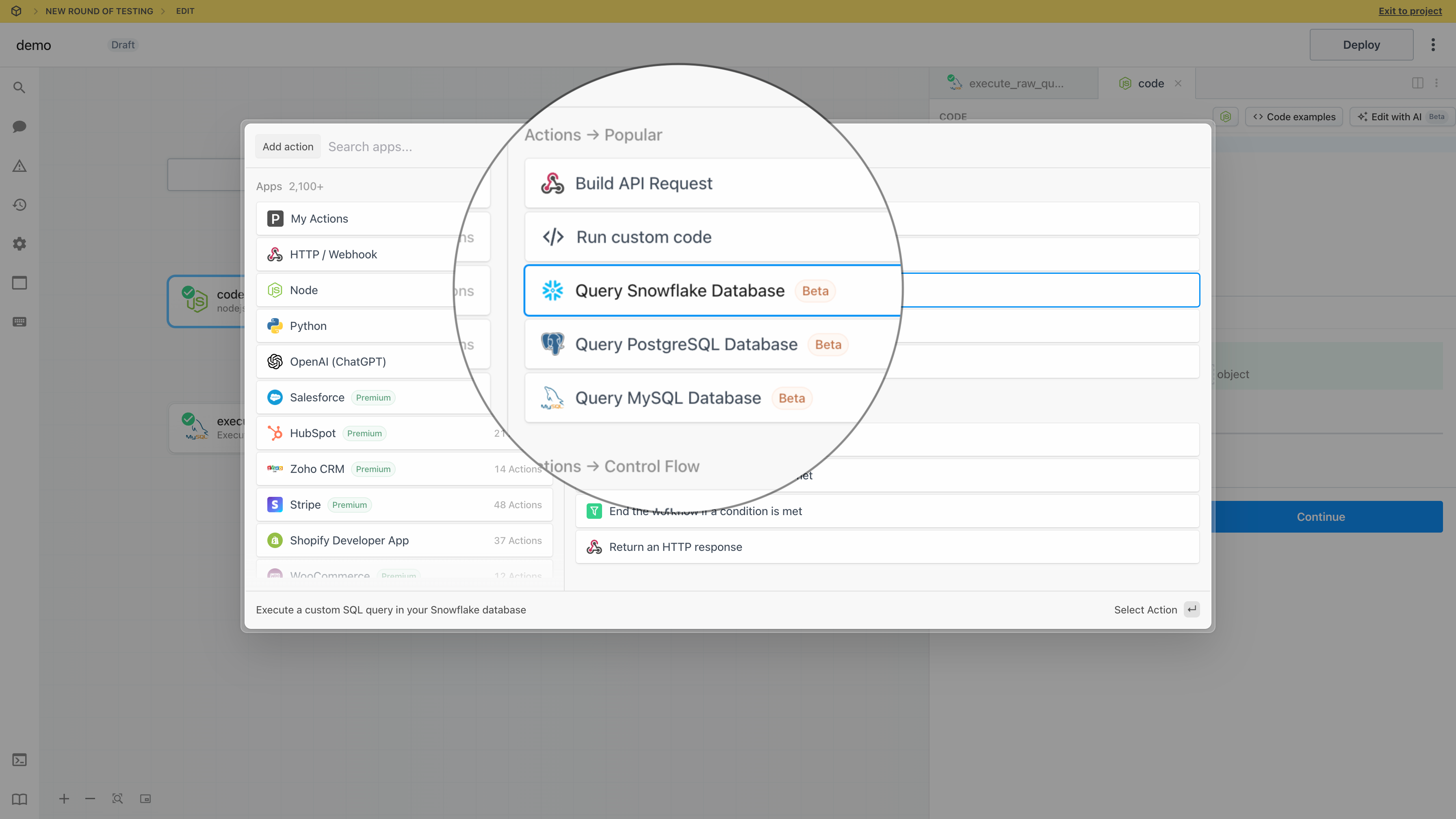

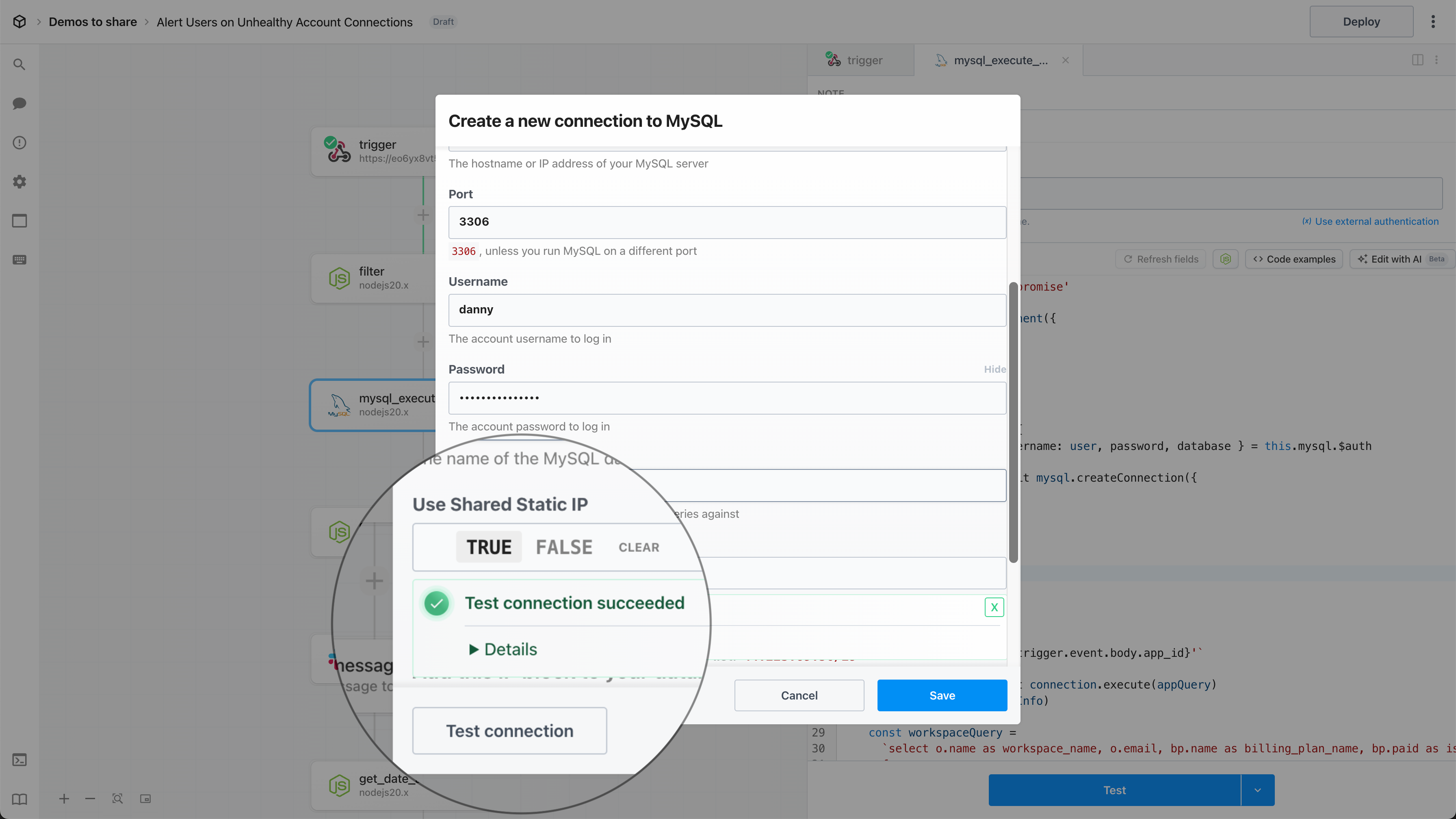

Pipedream supports a special category of apps called [“databases”](/docs/workflows/data-management/databases/), such as [MySQL](https://github.com/PipedreamHQ/pipedream/tree/master/components/mysql), [PostgreSQL](https://github.com/PipedreamHQ/pipedream/tree/master/components/postgresql), [Snowflake](https://github.com/PipedreamHQ/pipedream/tree/master/components/snowflake), etc. Components tied to these apps offer unique features *as long as* they comply with some requirements. The most important features are:

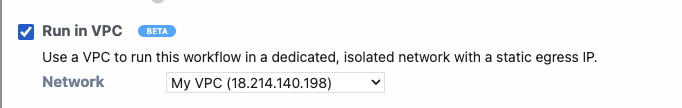

1. A built-in SQL editor that allows users to input a SQL query to be run against their DB

2. Proxied execution of commands against a DB, which guarantees that such requests are always being made from the same range of static IPs (see the [shared static IPs docs](/docs/workflows/data-management/databases/#send-requests-from-a-shared-static-ip))